Firefox 16 will be the first version to support incremental garbage collection. This is a major feature, over a year in the making, that makes Firefox smoother and less laggy. With incremental GC, Firefox responds more quickly to mouse clicks and key presses. Animations and games will also draw more smoothly.

The basic purpose of the garbage collector is to collect memory that JavaScript programs are no longer using. The space that is reclaimed can then be reused for new JavaScript objects. Garbage collections usually happen every five seconds or so. Prior to incremental GC landing, Firefox was unable to do anything else during a collection: it couldn’t respond to mouse clicks or draw animations or run JavaScript code. Most collections were quick, but some took hundreds of milliseconds. This downtime can cause a jerky, frustrating user experience. (On Macs, it causes the dreaded spinning beachball.)

Incremental garbage collection fixes the problem by dividing the work of a GC into smaller pieces. Rather than do a 500 millisecond garbage collection, an incremental collector might divide the work into fifty slices, each taking 10ms to complete. In between the slices, Firefox is free to respond to mouse clicks and draw animations.

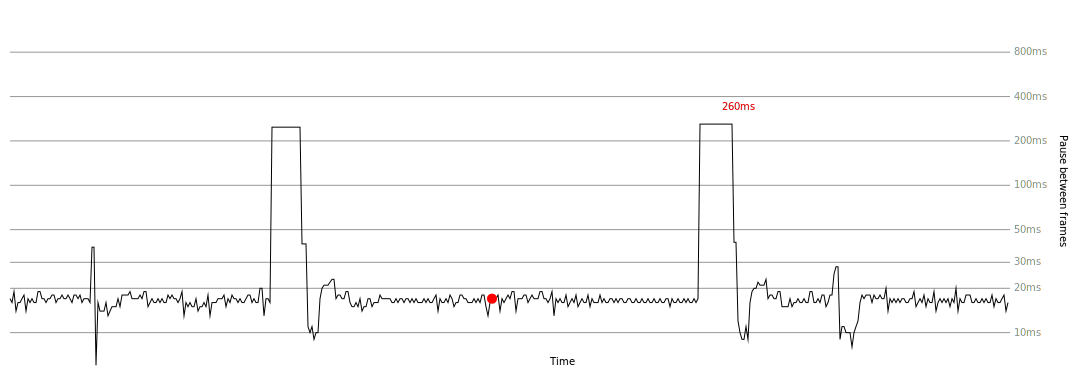

I’ve created a demo to show the difference made by incremental GC. If you’re running a Firefox 16 beta, you can try it out here. (If you don’t have Firefox 16, the demo will still work, although it won’t perform as well.) The demo shows GC performance as an animated chart. To make clear the difference between incremental and non-incremental GC, I’ll show two screenshots from the demo. The first one was taken with incremental GC disabled. Later I’ll show a chart with incremental collections enabled. Here is the non-incremental chart:

Time is on the horizontal axis; the red dot moves to the right and shows the current time. The vertical axis, drawn with a log scale, shows the time it takes to draw each frame of the demo. This number is the inverse of frame rate. Ideally, we would like to draw the animation at 60 frames per second, so the time between frames should be 1000ms / 60 = 16.667ms. However, if the browser needs to do a garbage collection or some other task, then there will be a longer pause between frames.

The two big bumps in the graph are where non-incremental garbage collections occured. The number in red shows that the time of the worst bump–in this case, 260ms. This means that the browser was frozen for a quarter second, which is very noticeable. (Note: garbage collections often don’t take this long. This demo allocates a lot of memory, which makes collections take longer to demonstrate the benefits of incremental GC.)

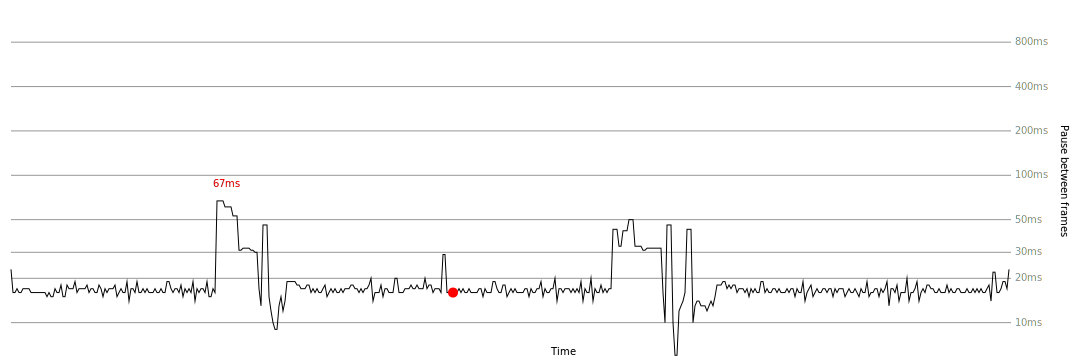

To generate the chart above, I disabled incremental GC by visiting about:config in the URL bar and setting the javascript.options.mem.gc_incremental preference to false. (Don’t forget to turn it on again if you try this yourself!) If I enable incremental GC, the chart looks like this:

This chart also shows two collections. However, the longest pause here is only 67ms. This pause is small enought that it is unlikely to be discernible. Notice, though, that the collections here are more spread out. In the top image, the 260ms pause is about 30 pixels wide. In the bottom image, the GCs are about 60 pixels wide. That’s because the incremental collections in the bottom chart are split into slices; in between the slices, Firefox is drawing frames and responding to input. So the total duration of the garbage collection is about twice as long. But it is much less likely that anyone will be affected by these shorter collection pauses.

At this point, we’re still working heavily on incremental collection. There are still some phases of collection that have not been incrementalized. Most of the time, these phases don’t take very long. But users with many tabs open may still see unacceptable pauses. Firefox 17 and 18 will have additional improvements that will decrease pause times even more.

If you want to explore further, you can install MemChaser, an addon for Firefox that shows garbage collection pauses as they happen. For each collection, the worst pause is displayed in the addon bar at the bottom of the window. It’s important to realize that not all pauses in Firefox are caused by garbage collection. You can use MemChaser to correlate the bumps in the chart with garbage collections reported by MemChaser.

If there is a bump when no garbage collection happened, then something else must have caused the pause. The Snappy project is a larger effort aimed at reducing pauses in Firefox. They have developed tools to figure out the sources of pauses (often called “jank”) in the browser. Probably the most important tool is the SPS profiler. If you can reliably reproduce a pause, then you can profile it and figure out what Firefox code was running that made us slow. Then file a bug!

Ed wrote on

:

wrote on

:

Florian wrote on

:

wrote on

:

Robert O’Callahan wrote on

:

wrote on

:

Florian wrote on

:

wrote on

:

Arne Babenhauserheide wrote on

:

wrote on

:

Jasmine Kent wrote on

:

wrote on

:

mccr8 wrote on

:

wrote on

:

Igor Soarez wrote on

:

wrote on

:

mccr8 wrote on

:

wrote on

:

Rursus wrote on

:

wrote on

:

Brunoais wrote on

:

wrote on

:

Forrest O. wrote on

:

wrote on

:

ghaith wrote on

:

wrote on

:

Heiko Wengler wrote on

:

wrote on

:

Pingback from New garbage collection in Firefox 16 | Browser Download on :

Pingback from multiPetros » Firefox 16 Released on :

Chris wrote on

:

wrote on

:

Ralf wrote on

:

wrote on

:

~ wrote on

:

wrote on

:

Arne Babenhauserheide wrote on

:

wrote on

:

trlkly wrote on

:

wrote on

:

trlkly wrote on

:

wrote on

:

J. MacAuslan wrote on

:

wrote on

:

Papou wrote on

:

wrote on

: