There wasn’t much MemShrink activity this week in terms of bugs fixed, just bug 718100 and bug 720359. So I’m going to take the opportunity this week to talk about the bigger picture.

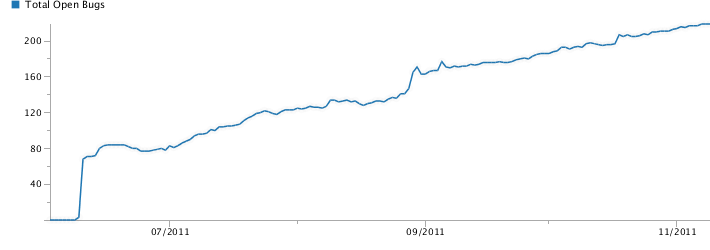

Bug Counts

As a prelude, here are this week’s bug counts.

- P1: 20 (-4/+0)

- P2: 131 (-3/+3)

- P3: 74 (-2/+7)

- Unprioritized: 4 (-3/+4)

The drop in P1s was just due to bug re-classification; in particular, three bugs relating to long cycle collector pauses were un-MemShrink’d because they are more about responsiveness, and they are being tracked by Project Snappy.

The Big Ticket Items

David Mandelin asked me today what where the big ticket items for MemShrink. I’d been looking a lot at the MemShrink:P1 list recently (which is why some were re-classified) and so I was able to break it down into six main areas that cover most of the P1s and various P2s. I’ll list these from what I think is least important to most important.

#6: Better Script Handling

Internally, a JSScript represents (more or less) the code of a JS function, including things like the internal bytecode that SpiderMonkey generates for it. The memory used by JSScripts is measured by the “gc-heap/scripts” and “script-data” entries in about:memory.

Luke Wagner did some measurements that showed that most (70–80%) JSScripts created in the browser are never run. In hindsight, this isn’t so surprising — many websites load libraries like jQuery but only use a fraction of the functions in those libraries. If SpiderMonkey could be changed to generate bytecode for scripts lazily, it could reduce “script-data” memory usage by 60–70%. This would also allow the decompiler to be removed, which would be great.

Luke also proposed sharing immutable parts of scripts between web pages. This would avoid a lot of duplication in the case where you have many tabs open with pages from a single site.

Both of these changes potentially will make the browser faster as well, because SpiderMonkey will spend less time compiling JavaScript source code to bytecode.

No-one is assigned to work on these bugs. The lazy script creation can be done entirely within the JS engine; the script sharing requires assistance from Necko. Luke is currently busy with some other righteous refactorings, but I’m quietly hoping once they’re done he might find time for one or both of these bugs.

#5: Better Memory Reporting

Before you can reduce memory consumption you have to measure it. about:memory is the critical tool that has facilitated much of MemShrink’s work. (For example, we never would have known about zombie compartments without it.) It’s in pretty good shape now but there are two major improvements that can be made.

First, the “heap-unclassified” number (a.k.a “dark matter”) is still typically around 20–25%. My goal is to reduce that to 10%. This won’t require any great new insights, we already have the tools and data required. Rather, it’s just a matter of grinding through the list of memory reporters that need to be added and improved.

Second, the resources used by each browser tab are reported in an unwieldy fashion: JS memory on a per-compartment basis; layout memory on a per-docshell basis; DOM memory on a per-window basis. Only a few internal architectural changes stand in the way of uniting these to provide the oft-requested feature of per-tab memory reporting. This will be great for users, because if Firefox is using more memory than they’d like, it tells them which tabs they should close in order to free up memory.

I am actively working on both these improvements, and I’m hoping that within a couple of months they’ll be mostly done.

#4: Better Memory Consumption Tracking

One thing we haven’t done well in MemShrink is to improve the state of tracking Firefox’s memory consumption. We have plenty of anecdotes but not much hard data about the improvements we’ve made, and we don’t have good ways to detect any regressions. A couple of ideas haven’t gone very far, but some good news is that John Schoenick is making great progress on a proper areweslimyet.com implementation. John has demonstrated preliminary versions of the site at two MemShrink meetings and it’s looking very promising. It uses the endurance test framework to make the measurements, and opens lots of pages from the Talos tp5 pageset.

We also hope to use telemetry data to analyze how the memory consumption of each released version of Firefox stacks up. That analysis would come with a significant delay — weeks or months after each release — but it would be much more comprehensive than any oft-run benchmark, coming from the real-world usage patterns of thousands of users.

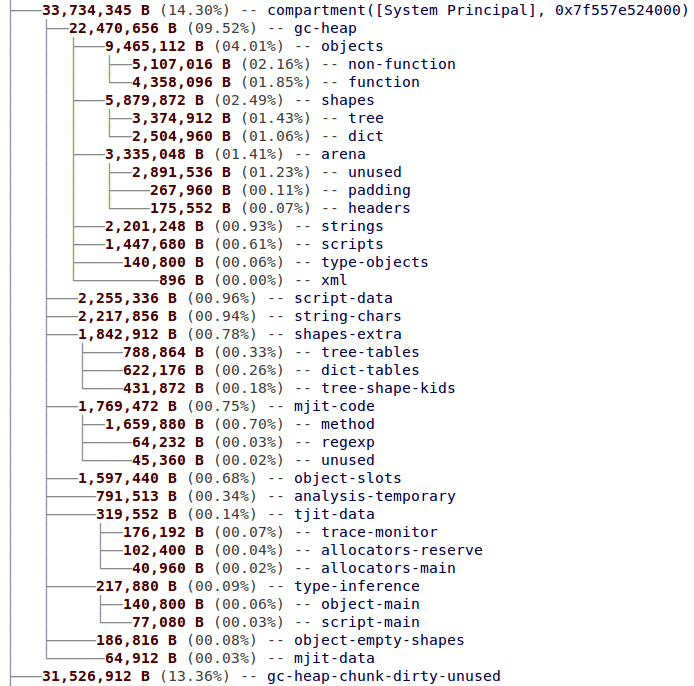

#3: Compacting Generational GC

If you look in about:memory, JavaScript memory usage usually dominates. In particular, the “js-gc-heap” is usually large. There’s also the “js-gc-heap-unused-fraction” number, often 30% or higher, which tells you how much of that space is unused because of fragmentation. That percentage overstates things somewhat, because often a good proportion of that unused space (see “js-gc-heap-decommitted”) is decommitted, which means that it’s costing nothing but address space… but that is cold comfort if you’re suffering out-of-memory aborts on Windows due to virtual memory exhaustion.

A compacting garbage collector is one that can move objects around the heap, filling up all those little gaps that constitute fragmentation. The JS team (especially Bill McCloskey and Terrence Cole) is implementing a compacting generational garbage collector, which is a particular kind that tends to have good performance. In particular, many objects die young and generational collectors find these quickly, which means that the heap will grow at a significantly slower rate than it currently does. I could be wrong, but I’m convinced this will be a big win for both memory consumption and speed.

#2: Better Foreground Tab Image Handling

Images are stored in a compressed format (e.g. JPEG, PNG, GIF) on disk. In order to display them, a browser must decompress (a.k.a decode) the compressed form into a raw pixel form that can easily be ten times larger. This decoded form can be discarded and regenerated as necessary, and there are trade-offs to be made — for example, if you are too aggressive in discarding decoded images, you might have to decode them again, which will take CPU cycles and the user might see flickering if the decoding occurs in the visible part of the page.

However, Firefox goes way too far in the other direction. If you open a page in the foreground tab, every single image in that page will be immediately decoded, and none of the decoded data will be discarded unless you switch away to another tab. For pages that contain many images, this is a recipe for horrific memory consumption, and Firefox does much worse than all the other browsers. So this is a problem that doesn’t rear its head for all users, but it’s terrible for those that are affected.

There are three MemShrink:P1 bugs relating to this: one about not decoding all images immediately, one about discarding non-visible decoded images after some time, and one about some infrastructure work that is required for the first two. As far as I know, no progress has been made on these three bugs, and although two of them are assigned they are not being actively worked on.

(See this discussion on the dev-platform mailing list for more details about this topic.)

#1: Better Detection and Notification of Leaky Add-ons

It’s been the case for several months that when a user complains about Firefox consuming an excessive amount of memory, it’s usually because of one or more add-ons, and the “can you try that again in safe mode?” / “oh yeah, that fixes it” dance is getting tiresome.

Many add-ons leak. Even popular, well-written ones: in the past few months leaks have been found in Adblock Plus, Video DownloadHelper, GreaseMonkey and Firebug. That’s four of the top five add-ons on AMO! We’re now getting several reports about leaky add-ons a week; in this week’s MemShrink meeting there were four: TorButton, NoSquint, Customize Your Web, and 1Password. I strongly suspect the leaks we know about are just the tip of the iceberg.

Although leaks in add-ons are not Mozilla’s fault, they are Mozilla’s problem: Firefox gets blamed for the sins of its add-ons. And it’s not just memory consumption; the story is the same for performance in general. Here’s the quote of the week, from a user of 1Password:

I only use a handful of extensions and honestly never suspected 1P, however after disabling it I noticed my FireFox performance increased very noticibly. I’ve been running for 48 hours now without the 1P extension in Firefox and wow what a difference. Browsing is faster, switching is faster, memory usage is way down.

I’ve lost count of the number of stories like this that I’ve heard. How many users have we lost to Chrome because of these issues, I wonder?

(And it’s not just leaks. See this analysis of 16 add-ons and their effect on memory consumption when Firefox starts.)

One small step towards improving this situation was made this week: Jorge Villalobos and Andrew Williamson added a “check for memory leaks” item to the AMO review checklist (under “Memory leaks from content”). And Kris Maglione added some support for this checking in his Extension Test add-on. This means that add-ons with obvious memory leaks (and many of them are obvious if you are actively looking for them) will not be accepted by AMO.

So that will prevents leaks in some new add-ons and new versions of established add-ons. What about existing add-ons? One idea is that AMO could also have a flag that indicates add-ons that have known memory problems (and other performance problems). (This flag wouldn’t be an automatic thing, it would only be set once a leak has been confirmed, and after giving the author notification and some time to fix the problem.) So that would also improve things a bit.

But lots of add-ons aren’t hosted on AMO. Another idea is to have a stronger mechanism, one that informs the user if they have any add-ons installed that are known to cause high memory consumption (or other bad performance problems). There is an existing mechanism for blocking add-ons that are known to be malware or exceptionally crashy, so hopefully the warnings could piggy-back on top of that.

Then, we need a better way to detect leaky add-ons. Currently this is entirely done manually — and a couple of excellent contributors have found leaks on multiple add-ons — but I’m hoping that it’ll be possible to do a much more thorough job by analyzing telemetry data to find out which add-ons are correlated with high memory consumption. That information could be used to trigger manual checking.

Finally, once you know an add-on leaks, it’s not always easy to work out why. Tools could help a lot here, if they can be made to work well.

Conclusion

I listed six big areas for improvement. If we fixed all of these I think we’d be in a fantastic position.

Three of them (#5 better memory reporting, #4 better memory consumption tracking, #3 compacting generational GC) have people working on them and are in a good state.

Three of them (#6 better script handling, #2 better foreground image tab handling, #1 better detection and notification of leaky add-ons) don’t have people working on them, as far as I know. If you are willing and have the skills to contribute to any of these areas, please contact me!

And if you think I’ve overestimated or underestimated the importance of any issue, I’d love to hear about it. Thanks!