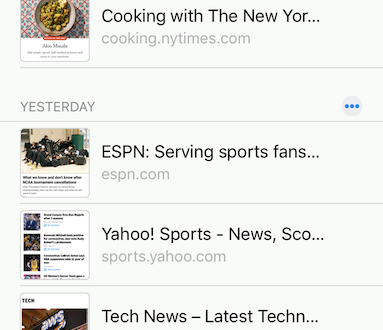

Ordering Browser Tabs Chronologically to Support Task Continuity

Product teams working on Firefox at Mozilla have long been interested in helping people get things done, whether that’s completing homework for school, shopping for a pair of shoes, or … Read more