In part II, we looked at the problem of Web authentication and covered the twin problems of phishing and password database compromise. In this post, I’ll be covering some of the technologies that have been developed to address these issues.

In part II, we looked at the problem of Web authentication and covered the twin problems of phishing and password database compromise. In this post, I’ll be covering some of the technologies that have been developed to address these issues.

This is mostly a story of failure, though with a sort of hopeful note at the end. The ironic thing here is that we’ve known for decades how to build authentication technologies which are much more secure than the kind of passwords we use on the Web. In fact, we use one of these technologies — public key authentication via digital certificates — to authenticate the server side of every HTTPS transaction before you send your password over. HTTPS supports certificate-base client authentication as well, and while it’s commonly used in other settings, such as SSH, it’s rarely used on the Web. Even if we restrict ourselves to passwords, we have long had technologies for password authentication which completely resist phishing, but they are not integrated into the Web technology stack at all. The problem, unfortunately, is less about cryptography than about deployability, as we’ll see below.

Two Factor Authentication and One-Time Passwords

The most widely deployed technology for improving password security goes by the name one-time passwords (OTP) or (more recently) two-factor authentication (2FA). OTP actually goes back to well before the widespread use of encrypted communications or even the Web to the days when people would log in to servers in the clear using Telnet. It was of course well known that Telnet was insecure and that anyone who shared the network with you could just sniff your password off the wire1 and then login with it [Technical note: this is called a replay attack.] One partial fix for this attack was to supplement the user password with another secret which wasn’t static but rather changed every time you logged in (hence a “one-time” password).

OTP systems came in a variety of forms but the most common was a token about the size of a car key fob but with an LCD display, like this:

The token would produce a new pseudorandom numeric code every 30 seconds or so and when you went to log in to the server you would provide both your password and the current code. That way, even if the attacker got the code they still couldn’t log in as you for more than a brief period2 unless they also stole your token. If all of this looks familiar, it’s because this is more or less the same as modern OTP systems such as Google Authenticator, except that instead of a hardware token, these systems tend to use an app on your phone and have you log into some Web form rather than over Telnet. The reason this is called “two-factor authentication” is that authenticating requires both a value you know (the password) and something you have (the device). Some other systems use a code that is sent over SMS but the basic idea is the same.

OTP systems don’t provide perfect security, but they do significantly improve the security of a password-only system in two respects:

- They guarantee a strong, non-reused secret. Even if you reuse passwords and your password on site A is compromised, the attacker still won’t have the right code for site B.3

- They mitigate the effect of phishing. If you are successfully phished the attacker will get the current code for the site and can log in as you, but they won’t be able to log in in the future because knowing the current code doesn’t let you predict a future code. This isn’t great but it’s better than nothing.

The nice thing about a 2FA system is that it’s comparatively easy to deploy: it’s a phone app you download plus another code that the site prompts you for. As a result, phone-based 2FA systems are very popular (and if that’s all you have, I advise you to use it, but really you want WebAuthn, which I’ll be describing in my next post).

Password Authenticated Key Agreement

One of the nice properties of 2FA systems is that they do not require modifying the client at all, which is obviously convenient for deployment. That way you don’t care if users are running Firefox or Safari or Chrome, you just tell them to get the second factor app and you’re good to go. However, if you can modify the client you can protect your password rather than just limiting the impact of having it stolen. The technology to do this is called a Password Authenticated Key Agreement (PAKE) protocol.

The way a PAKE would work on the Web is that it would be integrated into the TLS connection that already secures your data on its way to the Web server. On the client side when you enter your password the browser feeds it into TLS and on the other side, the server feeds in a verifier (effectively a password hash). If the password matches the verifier, then the connection succeeds, otherwise it fails. PAKEs aren’t easy to design — the tricky part is ensuring that the attacker has to reconnect to the server for each guess at the password — but it’s a reasonably well understood problem at this point and there are several PAKEs which can be integrated with TLS.

What a PAKE gets you is security against phishing: even if you connect to the wrong server, it doesn’t learn anything about your password that it doesn’t already know because you just get a cryptographic failure. PAKEs don’t help against password file compromise because the server still has to store the verifier, so the attacker can perform a password cracking attack on the verifier just as they would on the password hash. But phishing is a big deal, so why doesn’t everyone use PAKEs? The answer here seems to be surprisingly mundane but also critically important: user interface.

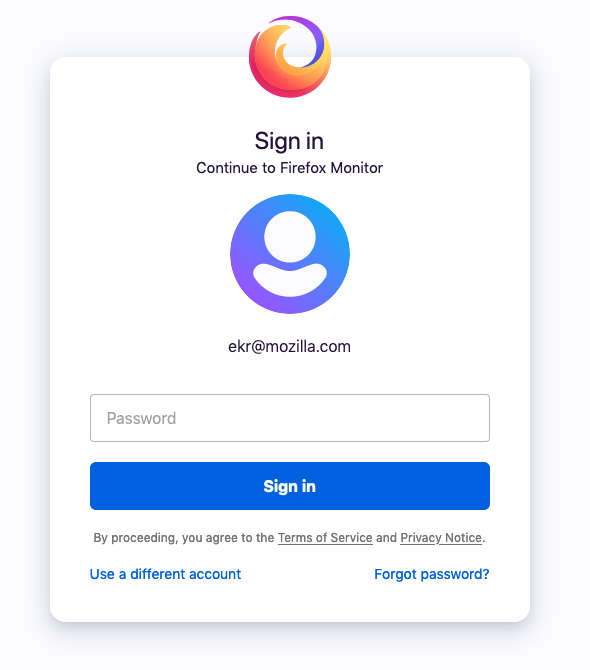

The way that most Web sites authenticate is by showing you a Web page with a field where you can enter your password, as shown below:

When you click the “Sign In” button, your password gets sent to the server which checks it against the hash as described in part I. The browser doesn’t have to do anything special here (though often the password field will be specially labelled so that the browser can automatically mask out your password when you type); it just sends the contents of the field to the server.

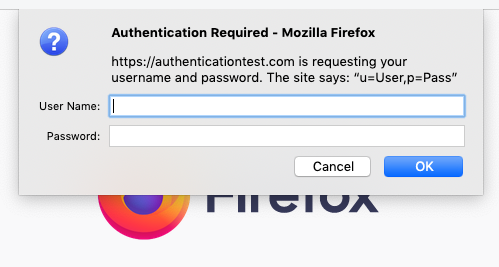

In order to use a PAKE, you would need to replace this with a mechanism where you gave the browser your password directly. Browsers actually have something for this, dating back to the earliest days of the Web. On Firefox it looks like this:

Hideous, right? And I haven’t even mentioned the part where it’s a modal dialog that takes over your experience. In principle, of course, this might be fixable, but it would take a lot of work and would still leave the site with a lot less control over their login experience than they have now; understandably they’re not that excited about that. Additionally, while a PAKE is secure from phishing if you use it, it’s not secure if you don’t, and nothing stops the phishing site from skipping the PAKE step and just giving you an ordinary login page, hoping you’ll type in your password as usual.

None of this is to say that PAKEs aren’t cool tech, and they make a lot of sense in systems that have less flexible authentication experiences; for instance, your email client probably already requires you to enter your authentication credentials into a dialog box, and so that could use a PAKE. They’re also useful for things like device pairing or account access where you want to start with a small secret and bootstrap into a secure connection. Apple is known to use SRP, a particular PAKE, for exactly this reason. But because the Web already offers a flexible experience, it’s hard to ask sites to take a step backwards and PAKEs have never really taken off for the Web.

Public Key Authentication

From a security perspective, the strongest thing would be to have the user authenticate with a public private key pair, just like the Web server does. As I said above, this is a feature of TLS that browsers actually have supported (sort of) for a really long time but the user experience is even more appalling than for builtin passwords.4 In principle, some of these technical issues could have been fixed, but even if the interface had been better, many sites would probably still have wanted to control the experience themselves. In any case, public key authentication saw very little usage.

It’s worth mentioning that public key authentication actually is reasonably common in dedicated applications, especially in software development settings. For instance, the popular SSH remote login tool (replacing the unencrypted Telnet) is commonly used with public key authentication. In the consumer setting, Apple Airdrop usesiCloud-issued certificates with TLS to authenticate your contacts.

Up Next: FIDO/WebAuthn

This was the situation for about 20 years: in theory public key authentication was great, but in practice it was nearly unusable on the Web. Everyone used passwords, some with 2FA and some without, and nobody was really happy. There had been a few attempts to try to fix things but nothing really stuck. However, in the past few years a new technology called WebAuthn has been developed. At heart, WebAuthn is just public key authentication but it’s integrated into the Web in a novel way which seems to be a lot more deployable than what has come before. I’ll be covering WebAuthn in the next post.

- And by “wire” I mean a literal wire, though such sniffing attacks are prevalent in wireless networks such as those protected by WPA2 ↩

- Note that to really make this work well, you also need to require a new code in order to change your password, otherwise the attacker can change your password for you in that window. ↩

- Interestingly, OTP systems are still subject to server-side compromise attacks. The way that most of the common systems work is to have a per-user secret which is then used to generate a series of codes, e.g., truncated HMAC(Secret, time) (see RFC6238). If an attacker compromises the secret, then they can generate the codes themselves. One might ask whether it’s possible to design a system which didn’t store a secret on the server but rather some public verifier (e.g., a public key) but this does not appear to be secure if you also want to have short (e.g., six digits) codes. The reason is that if the information that is used to verify is public, the attacker can just iterate through every possible 6 digit code and try to verify it themselves. This is easily possible during the 30 second or so lifetime of the codes. Thanks to Dan Boneh for this insight. ↩

- The details are kind of complicated here, but just some of the problems (1) TLS client authentication is mostly tied to certificates and the process of getting a certificate into the browser was just terrible (2) The certificate selection interface is clunky (3) Until TLS 1.3, the certificate was actually sent in the clear unless you did TLS renegotiation, which had its own problems, particularly around privacy. ↩

Update: 2020-07-21: Fixed up a sentence.