Here’s a quick quiz to start this discussion:

As a marketer, if you’re willing to pay (or bid) $0.20 per click through your paid search marketing programs, and you’re currently paying an average CPC (cost per click) of $0.10, what is the most you’re paying for a single click?

Hint #1: the answer is not $0.20 (or anything less than that). Hint #2: Please keep in mind our marginal cost discussion from last time. Our answer will be described near the conclusion of this post.

We previously looked at an experiment with our online marketing programs here, here, and here. The results revolved around the interaction of regular (organic) search with paid search advertisements — and how understanding this interaction helps us better account for our true marketing costs.

More recently, we conducted an experiment trying to isolate the effect of a single variable — keyword bidding — within the paid search process. In other words, if we adjust our bidding on certain keywords, how does this isolated factor affect our overall marketing budget, and ultimately, the number downloads and active usage of Firefox?

Here is our methodology blended with a hypothetical situation based on our experiment’s actual findings (I’m keeping things hypothetical to focus on the business question and not the data itself):

- We compared three different seven-day periods in January on a major search engine

- We then assigned a single bidding amount to our top performing keywords in each week, e.g., we left bidding unchanged during the first period (we’ll call this our “status quo bidding”) and adjusted it to $x in week 2 and $y in week 3

- Turning to the results, we’ll want to see how the total clicks (or downloads) and cost of these keywords vary from week to week

- At this point, we’re cognizant of a couple flaws in our methodology and we want to highlight these for full disclosure: (1) comparing three different time periods doesn’t give us a perfect apples-to-apples comparison, and (2) we’re primarily relying on click data and largely ignoring download or usage data

- Let’s assume that given your conversion rate (i.e., whatever you use to measure success in terms of clicks to purchase, clicks to revenue, clicks to download, etc.), you’ve previously determined that your maximum willingness to pay (or bid) is $0.20 per click.

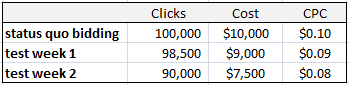

- Looking at total clicks and total cost for each of the three weeks, you arrive at the following findings:

You’ll notice that your CPC didn’t vary much across the three weeks. Given our quiz at the beginning of this post, you might assume that if you’re operating under the status quo bidding strategy, you’re in okay shape. However, if you take test week 2 as the base case (instead of the status quo), you might ask yourself a different set of questions. For example, moving from our bidding strategy in test week 2 to our bidding strategy in test week 1, we see that we gain 8,500 clicks at a cost of $1,500. That works out to $0.18 for each of those additional clicks — still below our $0.20 max bid.

But what happens when we then move from our test week 1 bidding strategy to our status quo bidding strategy? We see a gain of 1,500 clicks at an additional cost of $1,000, or $0.67 per additional click!

At this point, you may be thinking that this analysis seems confusing or anti-intuitive. So here’s another way to look at it: when you move from test week 1 to status quo bidding, you have to spend an extra penny for the first 98,500 clicks (your average CPC is now $0.10 rather than $0.09). Those pennies add up to nearly an extra $1,000 that you’re spending for the exact same clicks… that’s wasted money that you then have to factor into your true cost of gaining those additional 1,500 clicks.

So, what do these findings say about Mozilla’s online marketing efforts and how will they change our business decisions? First, we’ve seen that experiments are a good thing and we’ll continue to do more of them. Second, those pennies and that marginal cost ($0.67 in the example) add up to significant proportions – both over time and when you consider the scale of Mozilla’s acquisition marketing efforts. Anytime we can allocate our resources in more efficient ways is a win both for our operations and for the Firefox community.

Pingback from Another search marketing experiment | Giant Spatula > a Rolnitzky blog on :