Web Workers are additional threads that a website can create. Using workers, a website can utilize multiple CPU cores to speed itself up, or move heavy single-core processing to a background thread to keep the main (UI) thread as responsive as possible.

A problem, however, is that many APIs exist only on the main thread, for example WebGL. WebGL is a natural candidate for running in a worker, as it is often used by things like 3D games, simulations, etc., which do heavy amounts of JavaScript that can stall the main thread. Therefore there have been discussions about supporting WebGL in workers (for example, work is ongoing in Firefox), and hopefully this will be widely supported eventually. But that will take some time – perhaps we can polyfill this meanwhile? That is what this blogpost is about: WebGLWorker is a new open source project that makes the WebGL API available in workers. It does so by transparently proxying necessary commands to the main thread, where WebGL is then rendered.

Quick overview of the rest of this post:

- We’ll describe WebGLWorker’s design, and how it allows running WebGL-using code in workers, without any modifications to that code.

- While the proxying approach has some inherent limitations which prevent us from implementing 100% of the WebGL API, the part that we can implement turns out to be sufficient for several real-world projects.

- We’ll see performance numbers on the proxying approach used in WebGLWorker, showing that it is quite efficient, which is perhaps surprising since it sounds like it might be slow.

Examples

Before we get into technical details, here are two demos that show the project in action:

- PlayCanvas: PlayCanvas is an open source 3D game engine written in JavaScript. Here is the normal version of one of their examples, and here is the worker version.

- BananaBread: BananaBread is a port of the open source Cube 2/Sauerbraten first person shooter using Emscripten. Here is one of the levels running normally, and here it is in a worker. (Note that startup on the worker version may be sluggish; see the notes on “nested workers”, below, for why.)

In both cases the rendered output should look identical whether running on the main thread or in a worker. Note that neither of these two demos were modified to run in a worker: The exact same code, in both cases, runs either on the main thread or in a worker (see the WebGLWorker repo for details and examples).

How it works

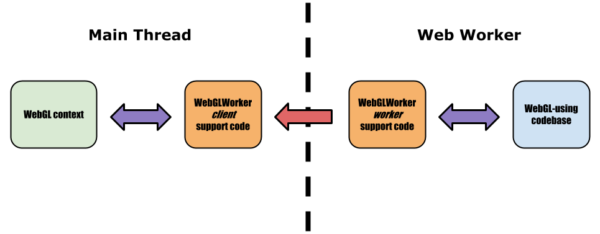

WebGLWorker has two parts: The worker and the client (= main thread). On the worker, we construct what looks like a normal WebGL context, so that a project using WebGL can use the WebGL API normally. When you call it, the WebGLWorker worker code queues rendering commands into buffers, then sends them over at the end of a frame using postMessage. The client code on the main thread receives such command buffers, and then executes them, command after command, in order. Here is what this architecture looks like:

Note that the WebGL-using codebase interacts with WebGLWorker’s worker code synchronously – it calls into it, and receives responses back immediately, for example, createShader returns an object representing a shader. To do that, the worker code needs to parse shader source files and so forth. Basically, we end up implementing some of the “frontend” of WebGL ourselves, in JS. And, of course, on the client side the WebGLWorker client code interacts synchronously with the actual browser WebGL context, executing commands and saving the responses where relevant. However, the important thing to note is that the WebGLWorker worker code sends messages to the client code, using postMessage, but it cannot receive responses – the response would be asynchronous, but WebGL application code is written synchronously. So that arrow goes only in one direction.

For that reason, a major limitation of this approach is synchronous operations that we cannot implement ourselves, like readPixels and getError – in both of those, we don’t know the answer ourselves, we need to get a response from the actual WebGL context on the client. But we can’t access it synchronously from a worker. As a consequence we do not support readPixels, and for getError, we return “no error” optimistically in the worker where getError is called, proxy the call, and if the client gets an error message back, we abort since it’s too late to tell the worker at that point. We are therefore limited in what we can accomplish with this approach. However, as the demos above show, 2 real-world projects work out of the box without issues.

Performance

I think most people’s intuition would be that this approach has to slow things down. After all, we are doing more work – queue commands in a buffer, serialize it, transfer it, deserialize it, and then execute the commands. All of that instead of just running each command as it is invoked! Now, on a single-core machine that would be correct, but on a multi-core machine there are reasons to suspect otherwise, because the worker thread often does other heavy JavaScript operations, so freeing it up quickly to do whatever else it needs can be beneficial. There are a few reasons why that might be possible:

- Some commands are proxied literally by just appending a few numbers to an array. That can be faster than calling into a DOM API which crosses into native C++ code.

- Some commands are handled locally in JavaScript, without proxying at all. Again, this can be faster than crossing the native code boundary.

- Calling from the browser’s native code into the graphics driver can cause delays, which proxying avoids.

So on the one hand it seems obvious that this must be slower, but there are also some reasons to think it might not be. Let’s see some measurements!

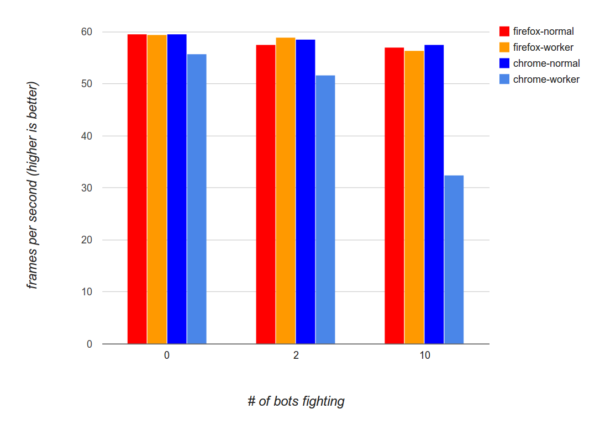

The chart shows frames per second (higher numbers are better) on the BananaBread demo, on Firefox and Chrome (latest developer versions, Firefox 33 and Chrome 37, on Linux; results on other OSes are overall similar), on a normal version running on the main thread, and a worker version using WebGLWorker. Frame rates are shown for different numbers of bots, with 2 being a “typical” workload for this game engine, 0 being a light workload and 10 being unrealistically large (note that “0 bots” does not mean “no work” – even with no bots, the game engine renders the world, the player’s model and weapon, HUD information, etc.). As expected, as we go from 0 bots to 2 and then 10, frame rates decrease a little, because the game does more work, both on the CPU (AI, physics, etc.) and on the GPU (render more characters, weapon effects, etc.). Hower, on the normal versions (not running in a worker), the decrease is fairly small, just a few frames per second under the optimal 60. On Firefox, we see similar results when running in a worker as well, even with 10 bots the worker version is about as fast as the normal version. On Chrome, we do see a slowdown on the worker version, of just a few frames per second for reasonable workloads, but a larger one for 10 bots.

Overall, then, WebGLWorker and the proxying approach do fairly well: While our intuition might be that this must be slow, in practice on reasonable workloads the results are reasonably fast, comparable to running on the main thread. And it can perform well even on large workloads, as can be seen by 10 bots on the worker version on Firefox (Chrome’s slowdown there appears to be due to the proxying overhead being more expensive for it – it shows up high on profiles).

Note, by the way, that WebGLWorker could probably be optimized a lot more – it doesn’t take advantage of typed array transfer yet (which could avoid much of the copying, but would make the protocol a little more complex), nor does it try to consolidate typed arrays in any way (hundreds of separate ones can be sent per frame), and it uses a normal JS array for the commands themselves.

A final note on performance: All the measurements from before are for throughput, not latency. Running in a worker inherently adds some amount of latency, as we send user input to the worker and receive rendering back, so a frame or so of lag might occur. It’s encouraging that BananaBread, a first person shooter, feels responsive even with the extra latency – that type of game is typically very sensitive to lag.

“Real” WebGL in workers – that is, directly implemented at the browser level – is obviously still very important, even with the proxying polyfill. Aside from reducing latency, as just mentioned, real WebGL in workers also does not rely on the main thread to be free to execute GL commands, which the proxying approach does.

Other APIs

Proxying just WebGL isn’t enough for a typical WebGL-using application. There are some simple things like proxying keyboard and mouse events, but we also run into more serious issues, like the lack of HTML Image elements in workers. Both PlayCanvas and BananaBread use Image elements to load image assets and convert them to WebGL textures. WebGLWorker therefore includes code to proxy Image elements as well, using a similar approach: When you create an Image and set its src URL, we proxy that info and create an Image on the main thread. When we get a response, we fire the onload event, and so forth, after creating JS objects that look like what we have on the main thread.

Another missing API turns out to be Workers themselves! While the spec supports workers created in workers (“nested workers” or “subworkers”), and while an html5rocks article from 2010 mentions subworkers as being supported, they seem to only work in Firefox and Internet Explorer so far (Chrome bug, Safari bug). BananaBread uses workers for 2 things during startup: to decompress a gzipped asset file, and to decompress crunched textures, which greatly improves startup speed. To get the BananaBread demo to work in browsers without nested workers, it includes a partial polyfill for Workers in Workers (which is far from complete – just enough to run the 2 workers actually needed). (This incidentally is the reason why Chrome startup on the BananaBread worker demo is slower than on the non-worker demo – the polyfill uses just one core, instead of 3, and it has no choice but to use eval, which is generally not optimized very well.)

Finally, there are a variety of missing APIs like requestAnimationFrame, that are straightforward to fill in directly without proxying (see proxyClient.js/proxyWorker.js in the WebGLWorker repo). These are typically not hard to implement, but the sheer amount of potential APIs a website might use (and are not in workers) means that hitting one of them is the most likely thing to be a problem when porting an app to run in a worker.

Summary

Based on what we’ve seen, it looks surprisingly practical to polyfill APIs via proxying in order to make them available in workers. And WebGL is one of the larger and higher-traffic APIs, so it is reasonable to expect that applying this approach to something like WebSockets or IndexedDB, for example, would be much more straightforward. (In fact, perhaps the web platform community could proxy an API first and use that to prioritize speccing and implementing it in workers?) Overall, it looks like proxying could enable more applications to run code in web workers, thus doing less on the main thread and maximizing responsiveness.

Blender fan wrote on

wrote on

zproxy wrote on

wrote on

Alon Zakai wrote on

wrote on

Jon W wrote on

wrote on

Alon Zakai wrote on

wrote on

Patrick Pfeiffer wrote on

wrote on

Alon Zakai wrote on

wrote on

Markus Henschel wrote on

wrote on