I’m excited to announce a new Mozilla Research experiment: the Emterpreter, a pure-JavaScript interpreter that can start running large Emscripten-compiled apps faster than JavaScript engines can, giving developers control over the latency/throughput trade-off.

An app’s startup time is a precious resource. For small apps, minification and image compression are good enough to provide a smooth user onboarding experience. But when a codebase gets large enough, the JavaScript engine startup costs—in particular, parsing—can add up to noticeable startup delays.

What can we do to improve JavaScript parse time? The obvious steps are removing unneeded code and minifying, but those only get you so far. We wanted to try a more extreme experiment: what if we compressed asm.js into a bytecode format and shipped it along with a small interpreter? Read on for some interesting results!

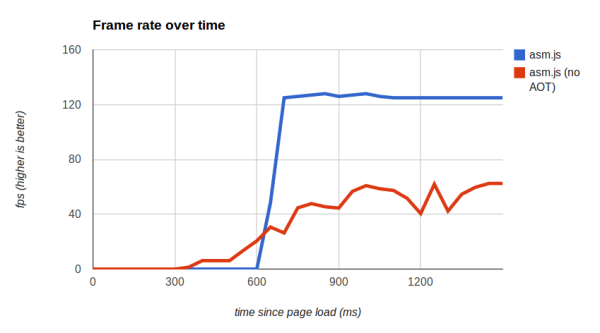

First let’s see how we can measure the problem:

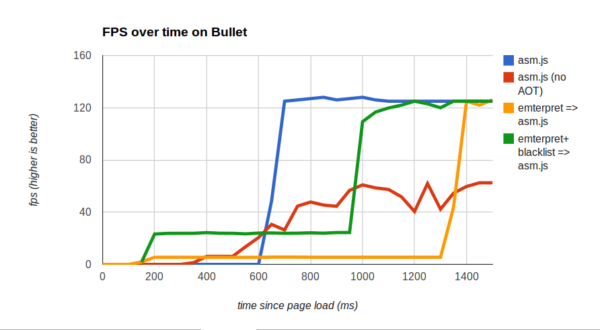

Here we ran the Bullet physics engine in Firefox with and without ahead-of-time (AOT) compilation. The numbers show a classic latency-vs-throughput tradeoff: AOT compilation of asm.js maximizes our sustained speed, but at some cost in startup time.

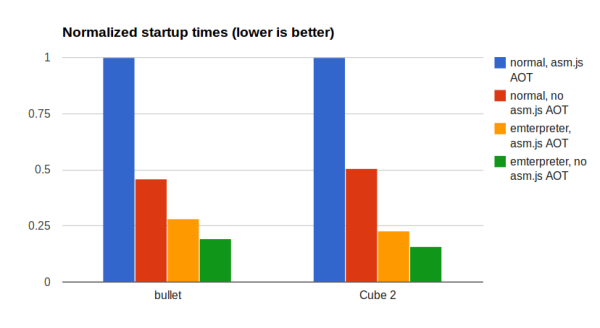

The Emterpreter is an experiment to address the latency side of this equation. We’ve added an experimental command-line flag to the Emscripten compiler to convert the generated asm.js code to bytecode format and emit a JavaScript interpreter for that bytecode. Let’s see the effect this has on startup times:

We ran the Bullet and Cube 2 benchmarks with asm.js and Emterpreter modes, running each with and without AOT. The Emterpreter starts up significantly faster – unsurprisingly, since loading unprocessed binary data (which is what the Emterpreter bytecode is) is faster than the JavaScript engine processing that code.

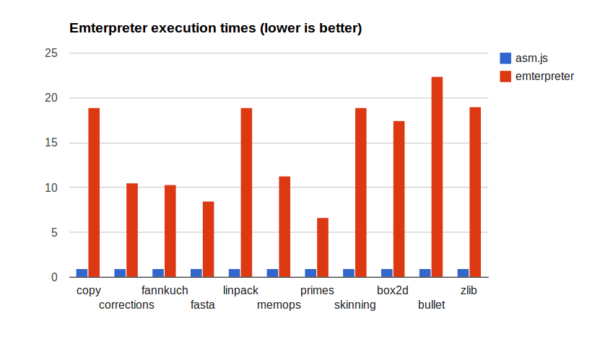

Naturally, while the startup times improved, the costs of running the code through an interpreter are substantial. Running the benchmarks from the Emscripten benchmark suite we can see anywhere from 6x to 22x slowdowns compared to normal asm.js execution:

That’d certainly be disappointing if it were all we could do. But we designed the Emterpreter to allow mixed execution. Some functions are “emterpreted,” and others run normally as asm.js. This lets us run most code in bytecode format, but leave the performance-sensitive parts running at full asm.js speed – outside of the Emterpreter.

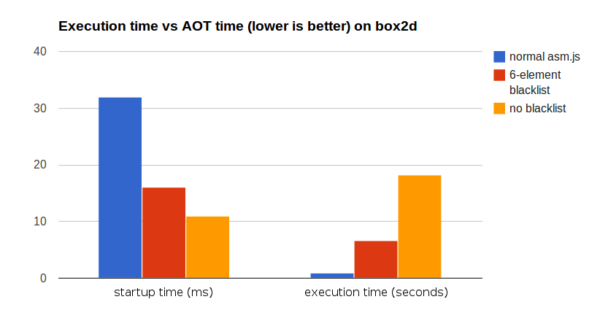

As an example, we can run the Box2D physics engine mostly in the Emterpreter but with a “blacklist” of 6 performance-sensitive functions that remain in asm.js:

On the left we can see the blacklist slows down startup only slightly, and on the right we can see that execution time takes a much smaller hit than running purely in the Emterpreter. This shows that there is promise to this approach to balancing startup time and speed: developers can selectively improve startup time without losing all the performance benefits of asm.js.

Finally, there’s one more optimization trick we can use. So far it has seemed we couldn’t significantly improve startup times without taking a hit in our peak performance. How can we avoid this compromise? The answer: start up quickly in emterpreted mode, but load the asm.js version in the background. Browsers can parse <script async> tags in a background thread (as Firefox does). Since AOT compilation of asm.js is done at parse time, developers have the ability to force compilation into a background thread. Apps can even get notified with a callback when the code is ready. While this can delay the point at which we reach peak performance, it also gives apps control over their user experience, such as supplying a splash screen so the app remains responsive while code is still being compiled. Once the callback is called, the Emterpreted code can be “hot swapped” with the optimized asm.js code (this is practical to do because asm.js code is in a very modular form), and the app will run at full speed.

With all these tools in place, we can revisit our first graph. We ran the same benchmark on two new cases: where we hot-swap the emterpreter with asm.js (yellow line), and where we both hot-swap and use a blacklist of performance-sensitive functions for the emterpreter (green line).

Hot-swapping allows us to reach full peak performance but get much better startup times. And the blacklist allows us to selectively improve the speed in the interim before the optimized code finishes compiling. Note also how the green line is strictly the best until around 600ms: all the others either have not started to execute yet, or are executing much more slowly. That shows the Emterpreter is capable of startup performance even better than the browser can achieve, whether the browser does AOT compilation or not. This is possible because asm.js code is very simple and low-level, and as a result easy to interpret in an efficient manner. And it takes the browser much less time to parse and optimize an interpreter over parsing and optimizing all of the code of an application.

These are preliminary results, and we intend to keep experimenting with the Emterpreter to see what we can do with it on real codebases. We encourage you to give it a try and tell us what you learn!

Peter Jensen wrote on

wrote on