It’s been a good month or more since we started the current round of

chasing space problems in Firefox. Considerable effort has gone into

identifying and fixing memory hogs. Although the individual fixes are

often excellent, I’ve haven’t had the big picture on how we’re doing.

So today I did some 3 way profiling, comparing

- mozilla-central of today, incorporating essentially all the

space fixes to date

- mozilla-central of 1 Nov last year, before this really got going

- 1.9.2 of today, since that’s what we keep

getting compared against

These are release builds on x86_64 linux, using jemalloc, as that’s

presumably the least fragmentful allocator we have.

Each run loads 20 cad-comic.com tabs. I let the browser run through

60 billion machine instructions, then stopped it. By

around 40 billion instructions it has loaded the tabs completely, and

the last 20 billion are essentially idling, intended to give an

identifiable steady-state plateau. That plateau ought to indicate the

minimum achievable residency, after the cycle collector, JS garbage

collector, the method jit code thrower-awayer, the image discarder,

and any other such things, have done their thing. I regard the

plateau as more indicative of the behaviour of the browser during a

long run, than I do the peak.

I profiled using Valgrind’s Massif profiler, using the –pages-as-heap

option. This measures all mapped pages in the process, and so

includes C++ heap, other mmap’d space, code, data and bss segments —

everything.

Consequently a lot of the measured space is the constant overhead of

the text, data and bss segments of the many shared objects involved.

That cost is the same regardless of the browser’s workload. To

quantify it, I did a fourth profile run, loading a single blank page.

This gives me a way to compute the incremental cost for each

cad-comic.com tab.

The summary results of all this are (all numbers are MBs)

- Constant overhead: 526

- Total costs: 1.9.2 907, MC-Nov10 1149, MC-now 1077

- Hence incremental per-tab costs are:

1.9.2 19.0,

MC-Nov10 31.1 (63% above 1.9.2),

MC-now 27.5 (45% above 1.9.2)

So we’re made considerable improvements since November. But we’re

still worse than 1.9.2. Nick Nethercote tells me that bug 623428 should

bring further improvements when it lands.

Here are the top-level visualisations for the three profiles.

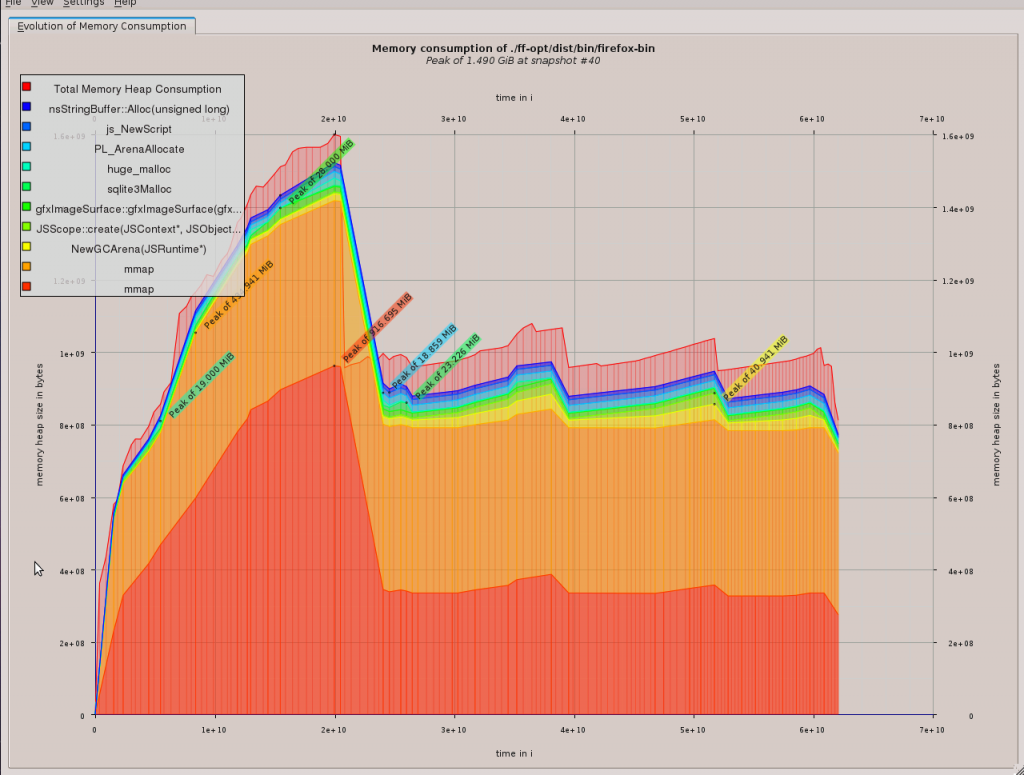

Firstly, 1.9.2 (picture below). What surprised me is the massive peak

of around 1.6GB during page load. Once that’s done, it falls back to a

series of modest trough-peak variations. I took the steady-state

measurement above at the lowest trough, around 54 billion instructions

on the horizontal axis.

Also interesting is that steady-state is reached before 25 billion

instructions. The M-C runs below took longer to get there.

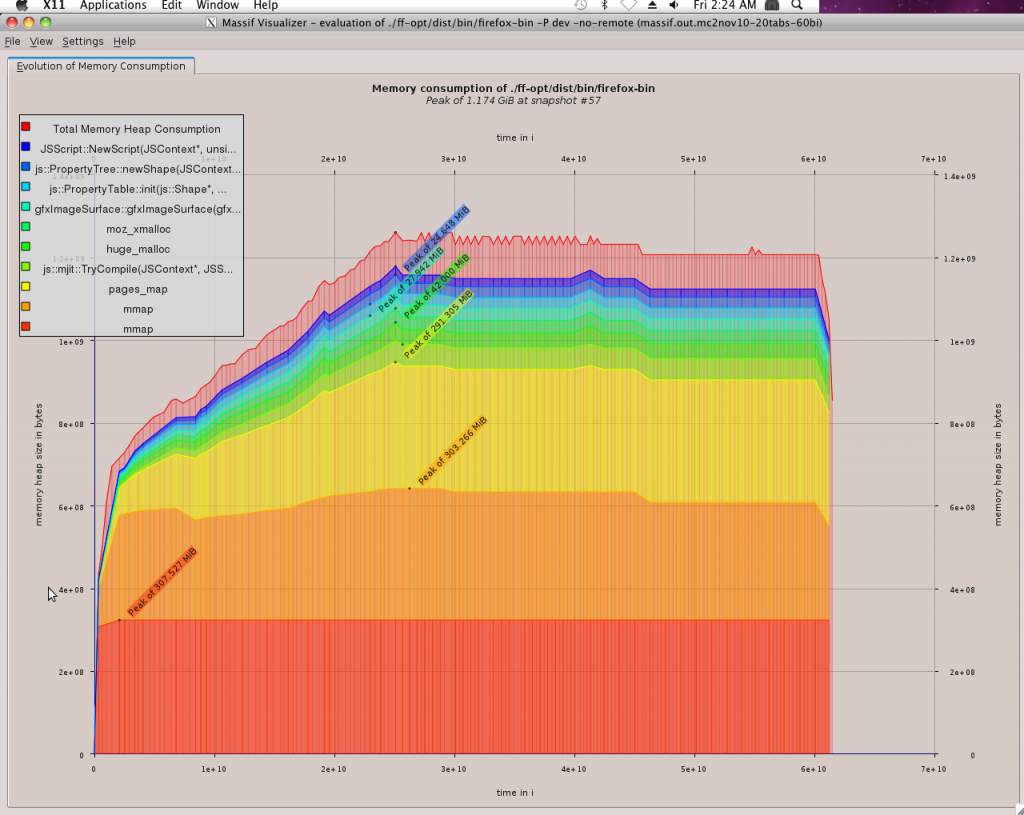

The M-C Nov10 picture (below) is less dramatic. It lacks the 1.6GB peak,

instead climbing to pretty much the final level of around 1.2GB and

staying there, with a slight decline into steady-state at around 44

billion insns.

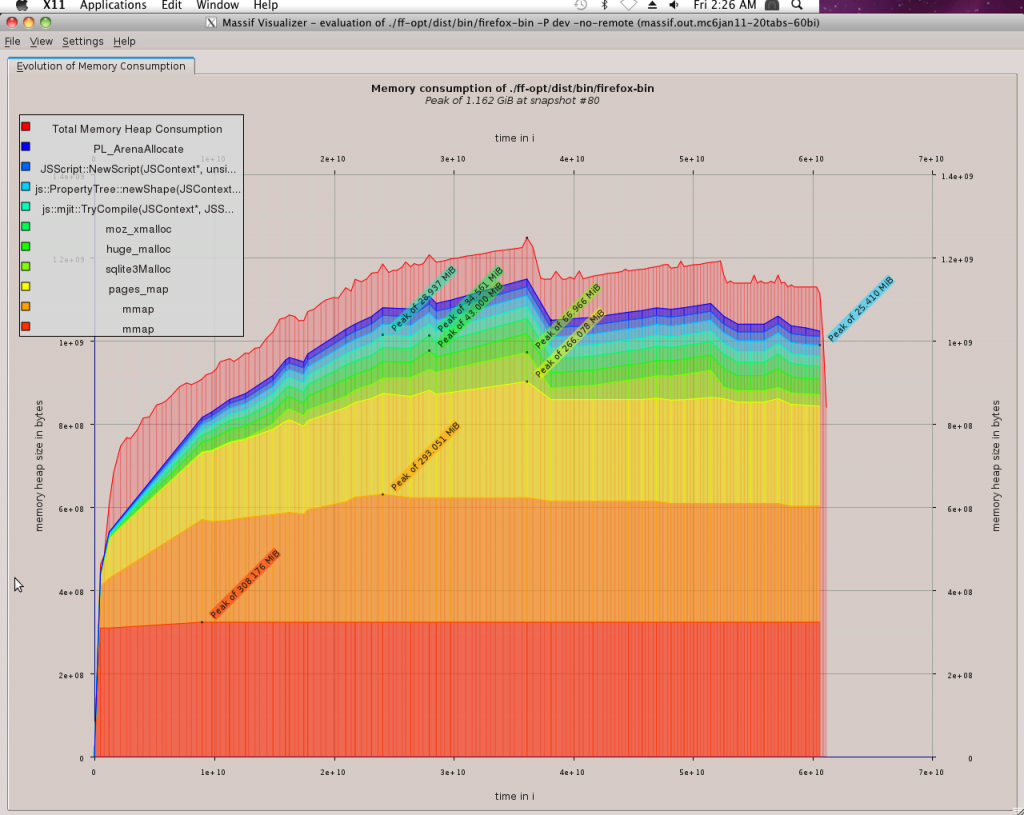

The M-C-of-now picture (below) is similar, although steady state

is less steady, and somewhat lower, reflecting the fixes of the past

few weeks. Observe how the orange band steps down slightly in

three stages after about 24 billion instructions. I believe that’s Brian

Hackett’s code discard patch, bug 617656. Also, note the gradual

slope up from around 38 billion to 53 billion insns. That might be

the excessively-infrequent GC problem investigated in bug 619822.

So what’s with the 1.6GB peak for 1.9.2 ? It gives the interesting

effect that, although M-C is worse in steady state than 1.9.2, M-C

has more modest peak requirements, at least for this test case.

On investigation, what 1.9.2 seems to be spiked by is thread stacks.

The implication is that it has more simultaneously live threads than

M-C. Why this should be, I don’t know. I did however notice that

1.9.2 seems to load all 20 tabs at the same time, whereas M-C appears

to pull them in in smaller groups. Related? I don’t know.