One thing I’ve learnt while working for Mozilla is that a web browser can be characterized as a JavaScript execution environment that happens to have some multimedia capabilities. In particular, if you look at Firefox’s about:memory page, the JS engine is very often the component responsible for consuming the most memory.

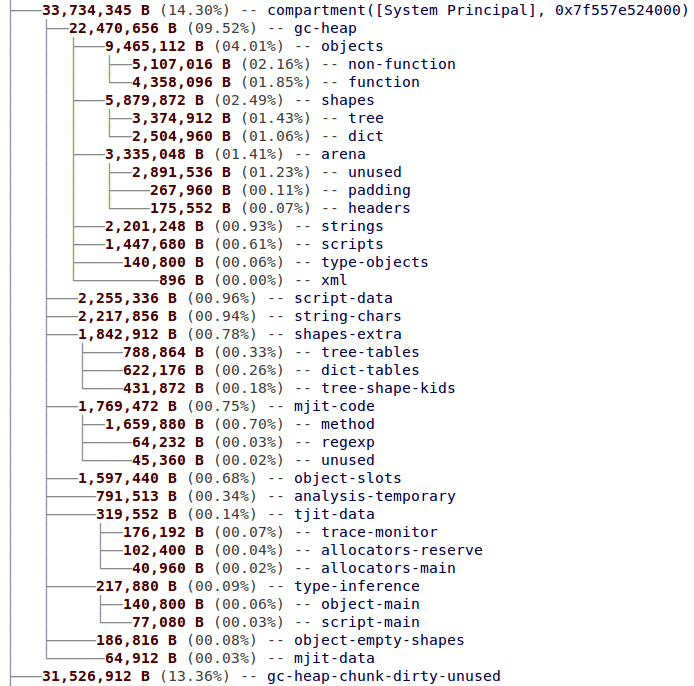

Consider the following snapshot from about:memory of the memory used by a single JavaScript compartment.

(For those of you who have looked at about:memory before, some of those entries may look unfamiliar, because I landed a patch to refine the JS memory reporters late last week.)

There is work underway to reduce many of the entries in that snapshot. SpiderMonkey is on a diet.

Objects

Objects are the primary data structure used in JS programs; after all, it is an object-oriented language. Inside SpiderMonkey, each object is represented by a JSObject, which holds basic information, and possibly a slots array, which holds the object’s properties. The memory consumption for all JSObjects is measured by the “gc-heap/objects/non-function” and “gc-heap/objects/function” entries in about:memory, and the slots arrays are measured by the “object-slots” entries.

The size of a non-function JSObject is currently 40 bytes on 32-bit platforms and 72 bytes on 64-bit platforms. Brian Hackett is working to reduce that to 16 bytes and 32 bytes respectively. Function JSObjects are a little larger, being (internally) a sub-class of JSObject called JSFunction. JSFunctions will therefore benefit from the shrinking of JSObject, and Brian is slimming down the function-specific parts as well. In fact, these changes are complete in the JaegerMonkey repository, and will likely be merged into mozilla-central early in the Firefox 11 development period.

As for the slots arrays, they are currently arrays of “fatvals” A fatval is a 64-bit internal representation that can hold any JS value — number, object, string, whatever. (See here for details, scroll down to “Mozilla’s New JavaScript Value Representation”; the original blog entry is apparently no longer available). 64-bits per entry is overkill if you know, for example, that you have an array full entirely of integers that could fit into 32 bits. Luke Wagner and Brian Hackett have been discussing a specialized representation to take advantage of such cases. Variations on this idea have been tried twice before and failed, but perhaps SpiderMonkey’s new type inference support will provide the right infrastructure for it to happen.

Shapes

There are a number of data structures within SpiderMonkey dedicated to making object property accesses fast. The most important of these are Shapes. Each Shape corresponds to a particular property that is present in one or more JS objects. Furthermore, Shapes are linked into linear sequences called “shape lineages”, which describe object layouts. Some shape lineages are shared and live in “property trees”. Other shape lineages are unshared and belong to a single JS object; these are “in dictionary mode”.

The “shapes/tree” and “shapes/dict” entries in about:memory measure the memory consumption for all Shapes. Shapes of both kinds are the same size; currently they are 40 bytes on 32-bit platforms and 64 bytes on 64-bit platforms. But Brian Hackett has also been taking a hatchet to Shape, reducing them to 24 bytes and 40 bytes respectively. This has required the creation of a new auxiliary BaseShape type, but there should be many fewer BaseShapes than there are Shapes. This change will also increase the number of Shapes, but should result in a space saving overall.

SpiderMonkey often has to search shape lineages, and for lineages that are hot it creates an auxiliary hash table, called a “property table”, that makes lookups faster. The “shapes-extra/tree-tables” and “shapes-extra/dict-tables” entries in about:memory measure these tables. Last Friday I landed a patch that avoids building these tables if they only have a few items in them; in that case a linear search is just as good. This reduced the amount of memory consumed by property tables by about 20%.

I mentioned that many Shapes are in property trees. These are N-ary trees, but most Shapes in them have zero or one child; only a small fraction have more than that, but the maximum N can be hundreds or even thousands. So there’s a long-standing space optimization where each shape contains (via a union) a single Shape pointer which is used if it has zero or one child. But if the number of children increases to 2 or more, this is changed into a pointer to a hash table, which contains pointers to the N children. Until recently, if a Shape had a child deleted and that reduced the number of children from 2 to 1, it wouldn’t be converted from the hash form back to the single-pointer. I changed this last Friday. I also reduced the minimum size of these hash tables from 16 to 4, which saves a lot of space because most of them only have 2 or 3 entries. These two changes together reduced the size of the “shapes-extra/tree-shape-kids” entry in about:memory by roughly 30–50%.

Scripts

Internally, a JSScript represents (more or less) the code of a JS function, including things like the internal bytecode that SpiderMonkey generates for it. The memory used by JSScripts is measured by the “gc-heap/scripts” and “script-data” entries in about:memory.

Luke Wagner did some measurements recently that showed that most (70–80%) JSScripts created in the browser are never run. In hindsight, this isn’t so surprising — many websites load libraries like jQuery but only use a fraction of the functions in those libraries. It wouldn’t be easy, but if SpiderMonkey could be changed to generate bytecode for scripts lazily, it could reduce “script-data” memory usage by 60–70%, as well as shaving non-trivial amounts of time when rendering pages.

Trace JIT

TraceMonkey is SpiderMonkey’s original JIT compiler, which was introduced in Firefox 3.5. Its memory consumption is measured by the “tjit-*” entries in about:memory.

With the improvements that type inference made to JaegerMonkey, TraceMonkey simply isn’t needed any more. Furthermore, it’s a big hairball that few if any JS team members will be sad to say goodbye to. (js/src/jstracer.cpp alone is over 17,000 lines and over half a megabyte of code!)

TraceMonkey was turned off for web content JS code when type inference landed. And then it was turned off for chrome code. And now it is not even built by default. (The about:memory snapshot above was from a build just before it was turned off.) And it will be removed entirely early in the Firefox 11 development period.

As well as saving memory for trace JIT code and data (including the wasteful ballast hack required to avoid OOM crashes in Nanojit, ugh), removing all that code will significantly shrink the size of Firefox’s code. David Anderson told me the binary of the standalone JS shell is about 0.5MB smaller with the trace JIT removed.

Method JIT

JaegerMonkey is SpiderMonkey’s second JIT compiler, which was introduced in Firefox 4.0. Its memory consumption is measured by the “mjit-code/*” and “mjit-data” entries in about:memory.

JaegerMonkey generates a lot of code. This situation will hopefully improve with the introduction of IonMonkey, which is SpiderMonkey’s third JIT compiler. IonMonkey is still in early development and won’t be integrated for some time, but it should generate code that is not only much faster, but much smaller.

GC HEAP

There is a great deal of work being done on the JS garbage collector, by Bill McCloskey, Chris Leary, Terrence Cole, and others. I’ll just point out two long-term goals that should reduce memory consumption significantly.

First, the JS heap currently has a great deal of wasted space due to fragmentation, i.e. intermingling of used and unused memory. Once moving GC — i.e. the ability to move things on the heap — is implemented, it will pave the way for a compacting GC, which is one that can move live things that are intermingled with unused memory into contiguous chunks of memory. This is a challenging goal, especially given Firefox’s high level of interaction between JS and C++ code (because moving C++ objects is not feasible), but one that could result in very large savings, greatly reducing the “gc-heap/arena/unused” and “gc-heap-chunk-*-unused” measurements in about:memory.

Second, a moving GC is a prerequisite for a generational GC, which allocates new things in a small chunk of memory called a “nursery”. The nursery is garbage-collected frequently (this is cheap because it’s small), and objects in the nursery that survive a collection are promoted to a “tenured generation”. Generational GC is a win because in practice the majority of things allocated die quickly and are not promoted to the tenured generation. This means the heap will grow more slowly.

Is that all?

It’s all I can think of right now. If I’ve missed anything, please add details in the comments.

There’s an incredible amount of work being done on SpiderMonkey at the moment, and a lot of it will help reduce Firefox’s memory consumption. I can’t wait to see what SpiderMonkey looks like in 6 months!

20 replies on “SpiderMonkey is on a diet”

Great job! Looking forward to more achievements by MemShrink efforts.

This is, without doubt, sensational news.

To all those involved, please accept my thanks for work completed thus far and immense encouragement to continue the hard work through the next 6 months. For once – and I am incredibly reluctant to say this due to the past – but it does seem that the massive effort required from Mozilla to get it’s memory performance under control and optimized, is really happening.

I was reading Webkit’s new dev changes blog and they are moving FAST!

One of their fix i thought was similar to Object Shrinking but i am not sure if they are the same. May worth a look.

https://bugs.webkit.org/show_bug.cgi?id=66161#c0

Peter is posting all the changes every week in Webkit much like the memshrink report. May be interested if any of those ideas are possible for Firefox.

http://peter.sh/

Nice to see all the improvement coming along. And lots of big win. Reading from it looks like it was TraceJIT that causes all the troubles. I was reading the bug, why Disable and Not Built TM in 10 but only remove it in 11?

No Mention of Incremental GC, it was done from its bug report but still no date of landing. And are Moving GC and Generational GC still far off?

IonMonkey, unless bug report are not updated, IM hasn’t even passed designed phrase yet. Hopefully time are spent to thoughtful design and test before implementation.

Would JSObject Shrink, and ShapeShrink Land in parts or as a whole? Are we expecting Mozilla 10 or 11 for that?

Good news. When about are IonMonkey results to appear in AWFY?

Probably shortly after it’s reached the point where it can run all of Sunspider/V8/Kraken. It’s still got a long way to go before it gets that far.

I forgot to ask, what % reduction should we expect if everything is done.

Object and Shape Shrink represent about 40% of the JS, a rough estimate of average 50% reduction would means 20% reduction in JS.

Moving GC and GGC should save around 15% of memory

IonMonkey produces 30% more efficient code ( Just guessing, hopefully IonMonkey could be designed to be VERY memory efficient in mind and do better then that 😀 ),

While they dont add up, we could expect up to 50% or more reduction in JS memory usage. And that is without generate bytecode for scripts lazily,

we could see HUGE reduction in JS memory usage.

Thorough, yet highly readable as always – nice post! 🙂

I just have to say, I really LOVE blog posts like this one. I hope you like and continue writing them, because I really enjoy reading them.

Thanks!

Between 1999 and 2000 the HotSpot Java Virtual Machine did exactly that: generational garbage collection and a dynamic optimizing compiler.

We used to run Pentium III’s with 128 MB of RAM then.

This article prompted me to check what about:memory looks like on my 8.x beta. Funny thing, the tab that uses up the most memory is the one that I’ve closed before going to work…. over 8 hours ago. Pressing GC/CC/MinMemory buttons doesn’t remove it.

I think before you guys get to the high level re-engineering tasks, maybe the tab closing mechanic needs to get some love 🙂

Sounds like a zombie compartment: please read http://blog.mozilla.org/nnethercote/2011/07/20/zombie-compartments-recognize-and-report-them-stop-the-screaming/ and report it in Bugzilla! Or at the very least, if you can isolate it to a single website please tell me here what that website is.

Add-on AdBlock+ is often the culprit due to that bug: https://bugzilla.mozilla.org/show_bug.cgi?id=672111 That’s why you can see some pages staying alive even if they are closed for ages.

Alright, so it looks like both Zombie+AdBlock cases are already covered in Bugzilla, and since they can happen on any page, it doesn’t matter which page it happen on for me (especially since it’s one of stackoverflow.com questions, which is as good as any other, and ads are rotated so AdBlock behavior won’t be consistent).

Oh well, I got some RAM to spare. And if it means proper AdBlock, and not the laughable imitation Chrome and IE get, I say let it eat its fill.

Nick, dmandelin’s blog post today seemed to indicate that the jm work won’t be landing on m-c until incremental GC is also ready. Seems unlikely for early in the Fx11 cycle?

I just asked about this on IRC, sounds like dmandelin wasn’t quite right — Bill said that he wants GC write barriers, which are close to finished, to land before objshrink, but incremental GC can land afterwards. Bill also said that if GC write barriers take more than 2 weeks he’ll let objshrink land first. So objshrink is still on track to make Fx11, IIUC.

Has Mozilla investigated reordering classes’ member variables to avoid unnecessary struct padding (as reported by gcc -Wpadded compiler warnings)? Or replacing member variables of type size_t (which may be 64 bits on some architectures) with a smaller type like uint32_t?

Sombody wrote a static analysis to find sub-optimal ordering a while back. It worked, but spat out hundreds of “struct X wasting N bytes” reports, and it couldn’t tell you which of those were actually important — i.e. for which cases many such structs are live at once.

I’ve done some packing by hand for cases that profiling has shown to be important, and others probably have too.

Would it be possible to follow up with a static tool to reorder all of the structs automatically; or would a human still be needed in the loop to update other bits of code to work with the changed structs?

I always wondered why unused JavaScript memory does not go to 0 after garbage collection. You clarified it: GC does not move objects in the heap. Thanks.

Seeing TraceMonkey disappear is sad when you’ve followed all the development of the technology and all the excitement that was behind when the Mozilla js team made it work at last. It will still be there in the source code of the older versions, but I wonder if it couldn’t still find some use for some specific case of js use.