This was originally a post to the monster thread “Data and commit rules” on dev-planning, which descended from the even bigger thread “Proposing a tree rule change for mozilla-central”. But it’s really an independent proposal, implementable with or without the changes discussed in those threads. It is most like Ehsan’s automated landing proposal but takes a somewhat different approach.

- Create a mozilla-pending tree. All pushes are queued up here. Each gets its own build, but no build starts until the preceding push’s build is complete and successful (the tests don’t need to succeed, nor even start.) Or maybe mostly complete, if we have some slow builds.

- Pushers have to watch their own results, though anyone can star on their behalf.

- Any failures are sent to the pusher, via firebot on IRC, email, instant messaging, registered mail, carrier pigeon, trained rat, and psychic medium (in extreme circumstances.)

- When starring, you have to explicitly say whether the result is known-intermittent, questionable, or other. (Other means the push was bad.)

- When any push “finishes” — all expected results have been seen — then it is eligible to proceed. Meaning, if all results are green or starred known-intermittent, its patches are automatically pushed to mozilla-central.

- Any questionable result is automatically retried once, but no matter what the outcome of the new job is, all results still have to be starred as known-intermittent for the push to go to mozilla-central.

- Any bad results (build failures or results starred as failing) cause the push to be automatically backed out and all jobs for later pushes canceled. The push is evicted from the queue, all later pushes are requeued, and the process restarts at the top.

- When all results are in, a completion notification is sent to the pusher with the number of remaining unmarked failures

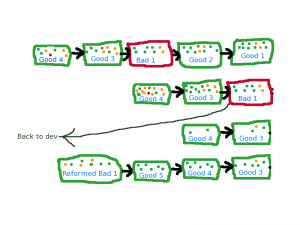

Silly 20-minute Gimped-up example:

Silly 20-minute Gimped-up example:

- Good1 and Good2 are queued up, followed by a bad push Bad1

- The builds trickle in. Good1 and Good2 both have a pair of intermittent oranges.

- The pusher, or someone, stars the intermittent oranges and Good1 and Good2 are pushed to mozilla-central

- The oranges on Bad1 turn out to be real. They are starred as failures, and the push is rolled back.

- All builds for Good3 and Good4 are discarded. (Notice how they have fewer results in the 3rd line?)

- Good3 gets an unknown orange. The test is retriggered.

- Bad1 gets fixed and pushed back onto the queue.

- Good3’s orange turns out to be intermittent, so it is starred. That is the trigger for landing it on mozilla-central (assuming all jobs are done.)

To deal with needs-clobber, you can set that as a flag on a push when queueing it up. (Possibly on your second try, when you discover that it needs it.)

mozilla-central doesn’t actually need to do builds, since it only gets exact tree versions that have already passed through a full cycle.

On a perf regression, you have to queue up a backout through the same mechanism, and your life kinda sucks for a while and you’ll probably have to be very friendly with the Try server.

Project branch merges go through the same pipeline. I’d be tempted to allow them to jump the queue.

You would normally pull from mozilla-pending only to queue up landings. For development, you’d pull mozilla-central.

Alternatively, mozilla-central would pull directly from the relevant changeset on mozilla-pending, meaning it would get all of the backouts in its history. But then you could use mozilla-pending directly. (You’d be at the mercy of pending failures, which would cause you to rebase on top of the resulting backouts. But that’s not substantially different from the alternative, where you have perf regression-triggered backouts and other people’s changes to contend with.) Upon further reflection, I think I like this better than making mozilla-central’s history artificially clean.

The major danger I see here is that the queue can grow arbitrarily. But you have a collective incentive for everyone in the queue to scrutinize the failures up at the front of the queue, so the length should be self-limiting even if people aren’t watching their own pushes very well. (Which gets harder to do in this model, since you never know when your turn will come up, and you’re guaranteed to have to wait a whole build cycle.)

You’d probably also want a way to step out of the queue when you discover a problem yourself.

Did I just recreate Ehsan’s long-term proposal? No. For one, this one doesn’t depend on fixing the intermittent orange problem first, though it does gain from it. (More good pushes go through without waiting on human intervention.)

But Ehsan’s proposal is sort of like a separate channel into mozilla-central, using the try server and automated merges to detect bit-rotting. This proposal relies on being the only path to mozilla-central, so there’s no opportunity for bitrot.

What’s the justification for this? Well, if you play fast and loose with assumptions, it’s the optimal algorithm for landing a collection of unproven changes. If all changes are good, you trivially get almost the best pipelining of tests (the best would be spawning builds immediately). With a bad change, you have to assume that all results after that point are useless, so you have no new information to use to decide between the remaining changes. There are faster algorithms that would try appending pushes in parallel, but they get more complicated and burn way more infrastructural resources. (Having two mozilla-pendings that merge into one mozilla-mergedpending before feeding into mozilla-central might be vaguely reasonable, but that’s already more than my brain can encompass and would probably make perf regressions suck too hard…)

Side question: how many non-intermittent failures happen on Windows PGO builds that would not happen on (faster) Windows non-PGO builds?

Tags: automation, mozilla, planet

So, I have a few comments.

Firstly, besides fixing the intermittent orange problem, what is the benefit of this proposal over mine? We’re currently at an orange factor of about 1.0, and I’m closely watching it to make sure that it doesn’t get regressed significantly, so solving the intermittent orange problem is not too far away. 🙂

Besides that, do you have any guesses on the amount of resources that we need in order to make this plan work? Sometimes the only way that we can get testing on mozilla-central is by merging jobs (and I assume that this proposal requires no jobs to be merged), so under this proposal, such a situation might lead to the size of the pending queue to grow up uncontrollably (because each job needs to wait until its previous job is finished). This is just food for thought, I have no idea if we can overcome this limitation with a limited set of hardware resources.

I also have a question about the process itself. In the text you’re talking about waiting for the tests for each job to be finished before proceeding with the next one, but the diagram doesn’t show this. And if your plan is what you have laid out in the diagram, this could potentially lead to jobs run on Good 3 and Good 4 to go to waste…

I like the idea of differentiating between known-intermittent, questionable, and “other” (which I would just call “bad”)!

On further reflection, I don’t think this is a significant difference. I could modify my proposal to do your retriggering and only accept if every test type has seen a green, or you could modify yours to allow the developer to star oranges as acceptable and allow through anything with no unstarred failures. Besides, the progress on intermittent oranges is very, very clearly visible on tbpl these days, so I believe you that this won’t matter much longer. (Well, as long as the retrigger logic is there. I don’t know how you’re feeling about the long tail of rare oranges, and there are plenty of infrastructure problems to take over too.)

Fair point. I’ll hand-wave and say that if things get overloaded, the sheriff or pushers can manually select pushes for merging. Does it help to have things in separate pushes if the tests are merged anyway? I’m just trying to figure out if it’s better to merge pushes or have a DONTTEST marker. The result of failures in both cases would be the same; all changes that are new to the failing test run would get backed out.

Turning it around, how does your proposal deal with overload? It seems like the more busy things get, the more opportunity for bit rot (or its evil cousin, semantic conflict when there’s no syntactic conflict). Also, how does your proposal use any less resources than mine? You do a full test run on try for every patch. I do a full test run on mozilla-pending for every push. Your queue isn’t directly visible, so its growth isn’t as obvious, but is it any less bothersome? I guess your mechanism isn’t the only path into the repo, so it doesn’t block people as much, but you’re still bogging down the whole build infrastructure. Or am I misunderstanding?

My diagram sucks. I should’ve drawn up more of a pipeline diagram, since that’s really the point of this.

My intent is to not wait for tests to finish before starting the tests for the next push. Those jobs are indeed wasted if they are behind a failing push, though the jobs get canceled automatically as soon as the failure is detected. I don’t see how to avoid that and still maintain the pipelining. But there shouldn’t be too many of those aborted jobs, and they’ll be on the fast OSes.

The way I’m conceptualizing my proposal is as a pipeline in the computing sense — a series of stages that are overlapped with the stages of subsequent jobs. As long as all jobs succeed, the stages can overlap. (The stages here are just “build” and “test”, though splitting into more, shorter stages would be better.) When a failure is observed, it forces a pipeline flush. Which, by the way, implies that it should really treat each build type (OS x opt/debug) as independent and start later pushes building before all builds have finished of an earlier push, in order to detect failures sooner. But that’s tuning.