I often like to split patches up into independent pieces, for ease of reviewing by both reviewers and myself. You can split off preparatory refactorings, low-level mechanism from high-level users, features from tests, etc., making it much easier to evaluate the sanity of each piece.

But it’s something of a pain to do. If I’ve been hacking along and accumulated a monster patch, with stock hg and mq I’d do:

hg qref -X '*' # get all the changes in the working directory; only

# needed if you've been qref'ing along the way

hg qref -I '...pattern...' # put in any touched files

hg qnew temp # stash away the rest so you can edit the patch

hg qpop

hg qpop # go back to unpatched version

emacs $(hg root --mq)/patchname # hack out the pieces you don't want,

# put them in /tmp/p or somewhere...

hg qpush # reapply just the parts you want

patch -p1 < /tmp/p

... # you get the point. There'll be a qfold somewhere in here...

and on and on. It’s a major pain. I even started working on a web-based patch munging tool because I was doing it so often.

Then I discovered qcrecord, part of the crecord extension. It is teh awesome with a capital T (and A, but this is a family blog). It gives you a mostly-spiffy-but-slightly-clunky curses (textual) interface to select which files to include, and within those files which patch chunks to include, and within those chunks which individual lines to include. That last part, especially, is way cool — it lets you do things that you’d have to be crazy to attempt working with the raw patches, and are a major nuisance with the raw files.

Assuming you are again starting with a huge patch that you’ve been qreffing, the workflow goes something like:

hg qref -X '*'

hg qcrecord my-patch-part1

hg qcrecord my-patch-part2

hg qcrecord my-patch-part3

hg qpop -a

hg qrm original-patchname

hg qpush -a

Way, way nicer. No more dangerous direct edits of patch files. But what’s that messy business about nuking the original patch? Hold that thought.

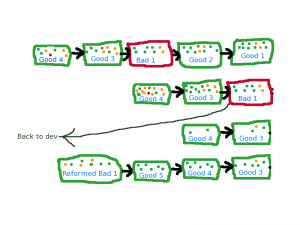

Now that you have a nicely split-up patch series, you’ll be wanting to edit various parts of it. As usual with mq, you qpop or qgoto to the patch you want to hack on, then edit it, and finally qref (qrefresh). But many times you’ll end up putting in some bits and pieces that really belong in the other patches. So if you were working on my-patch-part2 and made some changes that really belong in my-patch-part3, you do something like:

hg qcrecord piece-meant-for-part3 # only select the part intended for part3

hg qnew remaining-updates-for-part2 # make a patch with the rest of the updates, to go into part2

hg qgoto my-patch-part2

hg qpush --move remaining-updates-for-part2 # now we have part2 and its updates adjacent

hg qpop

hg qfold remaining-updates-for-part2 # fold them together, producing a final part2

hg qpush

hg qfold my-patch-part3 # fold in part3 with its updates from the beginning

hg qmv my-patch-part3 # and rename, mangling the comment

or at least, that’s what I generally do. If I were smarter, I would use qcrecord to pick out the remaining updates for part2, making it just:

hg qcrecord more-part2 # select everything intended for part2

hg qnew update-part3 # make a patch with the rest, intended for part3

hg qfold my-patch-part3 # fold to make a final part3

hg qmv my-patch-part3 # ...with the wrong name, so fix and mess up the comment

hg qgoto my-patch-part2

hg qfold more-part2 # and make a final part2

but that’s still a mess. The fundamental problem is that, as great as qcrecord is, it always wants to create a new patch. And you don’t.

Enter qcrefresh. It doesn’t exist, but you can get it by replacing your stock crecord with

hg clone https://sfink@bitbucket.org/sfink/crecord # Obsolete!

Update: it has been merged into the main crecord repo! Use

hg clone https://bitbucket.org/edgimar/crecord

It does the obvious thing — it does the equivalent of a qrefresh, except it uses the crecord interface to select what parts should end up in the current patch. So now the above is:

hg qcref # Keep everything you want for the current patch

hg qnew update-part3

hg qfold my-patch-part3

hg qmv my-patch-part3

Still a little bit of juggling (though you could alias the latter 3 commands in your ~/.hgrc, I guess.) It would be nice if qfold had a “reverse fold” option.

Finally, when splitting up a large patch you often want to keep the original patch’s name and comment, so you’d really do:

hg qcref # keep just the parts you want in the main patch

hg qcrec my-patch-part2 # make a final part2

hg qcrec my-patch-part3 # make a final part3

And life is good.