As with several other people, I’m going to put my opinions on the whole “Firefox hates enterprise users” kerfuffle here. Why here? Because this way I don’t have to pretend to have read everyone else’s thoughtful and incisive comments on the mailing list thread, and because the conversation isn’t going to move from the mailing list to here so I can safely jot out my unconsidered opinions and then back away, quickly. (I am in fact not reading every message in the thread. I scan through it once every 1-2 days and look for messages written by a handful of users who tend to say things I find worth hearing.)

The conversation seems to be generating far more heat than light. One thing that strikes me is that some questions are worth considering, and others aren’t. For example: “should Mozilla treat enterprises as a priority?” is not worth considering. It’s nearly meaningless. Consider these answers: “Yes, of course it should! Mozilla cares about everybody!” “No, it costs too much given the small slice of our user base that it represents.” Is anybody happy with either of them? Can anyone figure out what to do based on either conclusion? I can’t.

So I wanted to write out some questions that I think are worth considering. But first, a pet peeve: I’m not going to use the word “enterprise”. It barely means something as a noun, and means everything and nothing as an adjective (which is worse than meaning nothing, because then at least readers don’t imbue it with whatever meaning it holds in their own heads.) Anyway, here goes:

- If corporations abandoned Firefox, what impact would it have on Mozilla’s mission?

Forget for now why they’re abandoning FF. Maybe we decided to heighten security by rendering all HTTP pages in unselectable ROT-13 text, requiring our users to learn to decode them from memory or switch to HTTPS. Maybe we punish bad JS/server side coding practices by automatically detecting them and posting unencrypted passwords to a public server. Whatever it is, what impact would it have? How many people would not want to use a different browser at home and at work, and what would be the impact on our market share? What are the influence patterns that matter to us (eg new-to-the-Web users pick their browser based on what they or their friends know about from work)? How many add-on authors would support multiple platforms? Standardize on a single non-Firefox platform? Are corporate users already comfortable with using multiple browsers (eg IE6 for the intranet, something else for everything else)?

- What are we actually changing that is relevant to corporate users?

I think the answer is something like: we’re releasing features at a faster cadence. The average feature per unit time metric isn’t intentionally being changed, though MoCo is doing a lot of hiring so that’ll probably ramp up too for reasons other than our release policy. And we’re no longer separating features from fixes.

IM(naive)O, business users care about the first (frequency of features becoming available), but not enough to override other concerns. That’s why long-term support versions exist. Actually, that’s an oversimplification: business users are the same as anyone else, and would much prefer to have the most features the soonest, but those damn IT departments get mad at them when they install the latest version of FirewallBusterSupreme the day before it’s officially released. Forgoing shiny new features is the cost of ensuring stability and predictability, and you never want the scheduling and cost of upgrades to be any more at the whim of your vendor than absolutely necessary. You have a core business function to worry about — unless of course your core business happens to be advising other businesses about the impact of software upgrades. (Which is a sucky business, by the way; it’s another one of those where your customers are pretty much guaranteed to hate you. Your only function is to tell them “no”.) So the key bit really is the separate of features from fixes — or really, “stuff that is likely to break my users” from “stuff that is relatively safe and likely to keep me closer to the status quo than not having it would be”. An obvious example of the latter is security fixes — the risk from the software change is less than the risk from people starting to exploit some new vulnerability.

- What level of support would make the difference to “enough” corporate users?

- What are the relevant types of support?

If 60 months of long term support for selected versions isn’t enough for an IT department, then nothing will be, so forget about those users. And what does “long term support” mean, anyway? It isn’t a binary distinction. 60 months of “all security fixes we ever make for any version” is obviously unsustainable. I’m not sure “critical security fixes” is adequately complete as a description either. The severity of a security fix leaves out many relevant factors: backwards compatibility, divergence from trunk, maintainability, etc.

- What do various support options cost the Mozilla organization?

Cost, by the way, isn’t just measured in money coming out of MoCo’s pockets. Focus, maintainability, goodwill, brand, freshness, risk, etc.

- What could we do to make it easier for someone (perhaps us) to better support IT department-blessed use?

If we offered up a package (access to sensitive bugs + cash + agreement to support specific components + testing infrastructure + …) to attempt to lure 3rd parties into maintaining older versions “for us”, would anyone bite? (“For us” in quotes because we’re the Mozilla community, and they’d immediately become part of “us” if they accepted.)

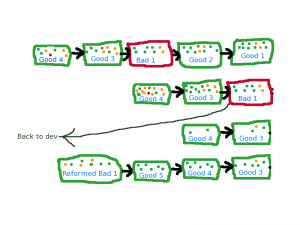

- What is the connection between a rapid release cadence and long term support?

They’re not diametrically opposed, but rapid releases obviously introduce difficulties for long term support. You could do rapid releases without affecting long term support at all, if all you’re talking about is the frequency of releases. But we’re not; we want to release new features quickly. In the gray area are incompatible changes to existing functionality that aren’t critical for moving the Web forward.

- What other groups are effected by the same long term support issues as “enterprise” (sorry) users?

Add-on authors have already been brought up. We at least have a defensible story there (mainly, “use the add-on SDK”.) That community could be usefully subdivided, but who else is affected?

Them’s all the thoughts that’ve leaked out of my brain so far. I’ll try to do a better job of keeping them inside where they belong.