One of the primary goals of JIT engines for dynamic languages is to make high-level code run fast by compiling it into efficient machine code. A key property of this, however, is that the compiled code for any piece of JavaScript must behave exactly the same at runtime as the interpreted code for that JavaScript would have behaved. In a nutshell, providing efficiency while maintaining correctness is the fundamental problem faced by JIT compilers.

This efficiency is tricky to achieve because dynamic languages like JavaScript are highly polymorphic: any variable can hold values of any type, and in the general case, the runtime cannot know ahead of time what type a particular value will have. For example, even if the program will always add two integers together at a particular place in the code, the engine has to allow for the possibility that a non-integer value might unexpectedly show up.

There are two broad techniques which can be used to deal with this challenge. The first is guarding, where the engine generates machine code that checks its assumptions before executing the code that relies on those assumptions. For the adding example above, the engine will generate machine code to do integer addition, but it will make sure to prefix that code with checks which ensure that the two values being added are actually integers. If the checks fail, the machine code jumps to a slowpath that handles the uncommon case in a general way. If the check succeeds, the fastpath (or optimized) machine code is executed. The expectation is that the checks will succeed most of the time, and so the fastpath will be executed most of the time.

While guarding is a useful technique, it imposes a performance penalty. Checking assumptions takes time, and having those checks in hot code can be a significant performance drain. The second technique for emitting efficient code while retaining correctness is invalidation. That’s what I’m going to talk about in this post. I’m going to use SpiderMonkey’s newly added JIT engine, IonMonkey, to illustrate my examples, but aside from the details the overall strategy will apply to most JIT engines.

Invalidation at 10,000 Feet

The key idea behind invalidation is this: instead of making our jitcode check an assumption every time we are about to do something that relies on that assumption, we ask the engine to mark the jitcode as invalid when the assumption becomes false. As long as we take care never to run jitcode that’s been marked invalid, then we can go ahead and leave out the guards we would have otherwise added.

Let’s take a look at a simple example to get started. Here’s a small JavaScript program that computes the sum of some point distances:

function Point(x, y) { this.x = x; this.y = y; } function dist(pt1, pt2) { var xd = pt1.x - pt2.x, yd = pt1.y - pt2.y; return Math.sqrt(xd*xd + yd*yd); } function main() { var totalDist = 0; var origin = new Point(0, 0); for (var i = 0; i < 20000; i++) { totalDist += dist(new Point(i, i), origin); } // The following "eval" is just there to prevent IonMonkey from // compiling main(). Functions containing calls to eval don't // currently get compiled by Ion. eval(""); return totalDist; } main(); |

When this script is run, the dist function is compiled by IonMonkey after roughly 10,000 iterations, generating the following intermediate representation (IR) code:

[1] Parameter 0 => Value // (pt1 value) [2] Parameter 1 => Value // (pt2 value) [3] Unbox [1] => Object // (unbox pt1 to object) [4] Unbox [2] => Object // (unbox pt2 to object) [5] LoadFixedSlot(x) [3] => Int32 // (read pt1.x, unbox to Int32) [6] LoadFixedSlot(x) [4] => Int32 // (read pt2.x, unbox to Int32) [7] Sub [5] [6] => Int32 // xd = (pt1.x - pt2.x) [8] LoadFixedSlot(y) [3] => Int32 // (read pt1.y, unbox to Int32) [9] LoadFixedSlot(y) [4] => Int32 // (read pt2.y, unbox to Int32) [10] Sub [8] [9] => Int32 // yd = (pt1.y - pt2.y) [11] Mul [7] [7] => Int32 // (xd*xd) [12] Mul [10] [10] => Int32 // (yd*yd) [13] Add [11] [12] => Int32 // ((xd*xd) + (yd*yd)) [14] Sqrt [13] => Double // Math.sqrt(...) [15] Return [14]

The above is a cleaned-up version of the actual IR for illustration. For the actual nitty-gritty low-level IR, Click Here.

In instructions [3] and [4], Ion blindly unboxes the argument values into Object pointers without checking to see if they are actually object pointers and not primitives. In instructions [5], [6], [8], and [9], Ion blindly converts the x and y values loaded from the objects to Int32s.

(Note: Unboxing in this case refers to decoding a generic JavaScript value into a raw value of a specific type such as Int32, Double, Object pointer, or Boolean).

If we were only using guards to check our assumptions, the following extra checks would have gone into this code:

- Two type checks: An extra check before each of [3] and [4] to check that the input argument was actually an object pointer before unboxing it.

- Four type checks: Ensuring that pt1.x, pt2.x, pt1.y, and pt2.y were all Int32 values before unboxing them.

Instead, the IR code skips these six extra checks, generating extremely tight code. Normally, we wouldn’t be able to do this because the jitcode would simply be wrong if any of these assumptions were not held. If the function dist is ever called with non-object arguments, the jitcode would crash. If dist was ever called with instances of Point with x and y values that were not Int32s, the jitcode would crash.

To get away with generating this efficient code while maintaining correctness, we must make sure that we never run it after its assumptions become invalid. Accomplishing that requires leveraging SpiderMonkey’s type inference system.

Type Inference

SpiderMonkey’s type inference (TI) dynamically tracks known type information for JavaScript objects and scripts, and how those types flow through different parts of the code. The data structures maintained and updated by TI represent the current type knowledge about the program. By tracking when these structures change, the engine can trigger events whenever particular type assumptions are broken.

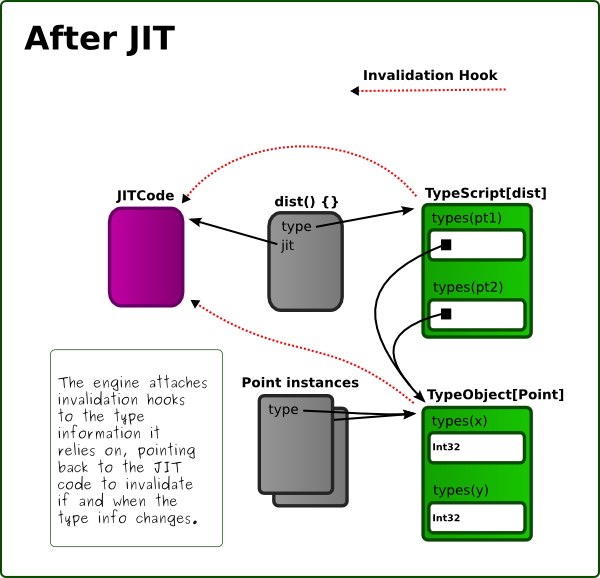

The following is a very simplified diagram of the type model generated for our program as it runs.

The TypeScript at the top keeps track of the type information for the function dist – such as the type sets associated with the first and second arguments (‘pt1’ and ‘pt2’).

The TypeObject diagrammed below keeps track of the type information associated with instances of Point – such as the type sets associated with the fields ‘x’ and ‘y’.

These structures will be updated by TI as needed. For example, if dist is ever called with an object that is not an instance of Point, TI will update the appropriate argument typeset on the TypeScript. Likewise, if Point is ever instantiated with values for ‘x’ or ‘y’ that are not integers, the parameter type sets on the TypeObject will be updated.

When Ion JIT-compiles a function and makes implicit assumptions about types, it adds invalidation hooks to the appropriate type sets, like so:

Whenever new types are added to type sets, the relevant invalidation hook will be triggered, which will cause the jitcode to be marked invalid.

Experiments With Ion

Let’s see if we can experimentally trigger these kinds of invalidations by changing the code above. I’ll be using the standalone JavaScript shell built from the Mozilla sources to run these examples. You can verify these tests yourself by building a debug version of the JS shell. (See the SpiderMonkey build documentation for more information).

First, let’s start easy – and verify that no invalidations occur with the script above:

$ IONFLAGS=osi js-debug points.js [Invalidate] Start invalidation. [Invalidate] No IonScript invalidation.

(ignore the spurious, extra “Start invalidation” in this and subsequent outputs – it’s due to garbage-collection starting, which makes the engine check for potential invalidations caused by the GC. It’s not relevant to this post)

Passing the environment variable IONFLAGS=osi just asks Ion to dump all invalidation related events to its output as it runs. As the output notes, this program causes no invalidations – since no type assumptions are broken after compilation.

Stab 2

For our second try, let’s break the assumption that all arguments to dist are instances of Point, and see what happens. Here is the modified main() function:

... function main() { var totalDist = 0; var origin = new Point(0, 0); for (var i = 0; i < 20000; i++) { totalDist += dist(new Point(i, i), origin); } dist({x:3,y:9}, origin); /** NEW! **/ // The following "eval" is just there to prevent IonMonkey from // compiling main(). Functions containing calls to eval don't // currently get compiled by Ion. eval(""); return totalDist; } ... |

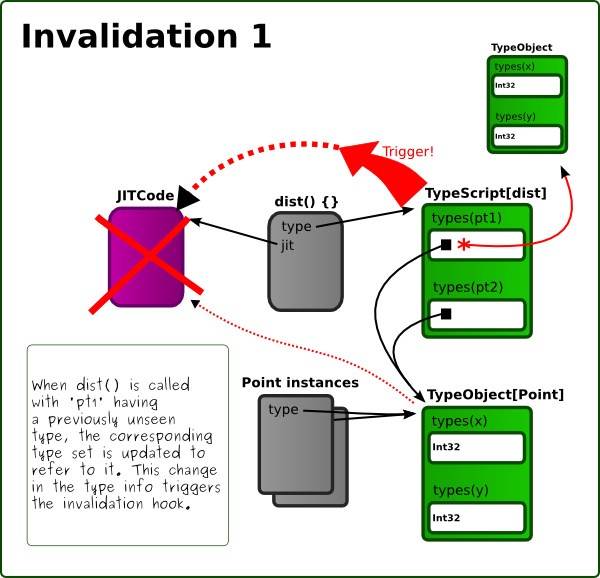

In this program, the loop is going to run and make dist hot enough that it gets compiled with the assumption that its arguments are always going to be instances of Point. Once the loop is completed, we call dist again, but with its first argument being an object which is not an instance of Point, which breaks the assumption made by the jitcode.

$ IONFLAGS=osi js-debug points.js [Invalidate] Start invalidation. [Invalidate] No IonScript invalidation. [Invalidate] Start invalidation. [Invalidate] Invalidate points.js:6, IonScript 0x1f7e430

(once again, the first “Start invalidation” is just caused by GC kicking in, and it’s not relevant to this post.)

Aha! We’ve managed to trigger invalidation of dist (points.js, line 6). Life is good.

The diagram below shows what’s happening. The dist TypeScript’s first-argument-typeset changes, adding a reference to a new TypeObject. This triggers its invalidation hook, and marks the IonScript invalid:

Stab 3

For our third stab, we break the assumption that fields of Point instances always contain Int32 values. Here is the modified main() function:

... function main() { var totalDist = 0; var origin = new Point(0, 0); for (var i = 0; i < 20000; i++) { totalDist += dist(new Point(i, i), origin); } dist(new Point(1.1, 5), origin); /** NEW! **/ // The following "eval" is just there to prevent IonMonkey from // compiling main(). Functions containing calls to eval don't // currently get compiled by Ion. eval(""); return totalDist; } ... |

And the associated output:

$ IONFLAGS=osi js-debug points.js [Invalidate] Start invalidation. [Invalidate] No IonScript invalidation. [Invalidate] Start invalidation. [Invalidate] Invalidate points.js:6, IonScript 0x1f7e430

And the diagram showing how the script is invalidated:

Invalidation vs. Guarding

Invalidation and guarding are two distinct approaches to generating jitcode for any given operation. Within the jitcode for any given function, both techniques may be used, and neither one is strictly better than the other in all cases.

The advantage of invalidation-based optimization is that guards can be omitted, allowing very hot code to execute extremely quickly. However, there are also downsides. When an assumption fails, every piece of jitcode that relies on that assumption must be marked invalid, and prevented from being executed. This means that the function returns to running via the interpreter, until such a time as we compile it again with a new set of assumptions. If we have the bad luck of choosing invalidation-based optimization on an assumption that happens to break, then we’re suddenly stuck with a very high cost.

With guard-based optimization, we sacrifice the speed of the optimize code, but the resulting code is more resilient to changes in assumptions – we don’t have to throw away jitcode when assumptions break. In those cases, the code will just execute a slowpath. If the number of times a function is called with invalid assumptions is small (but greater than zero), then guard-based optimization might be a better choice than invalidation-based optimization.

The question of which optimization strategy to pick when is not always easily answered. Even if an assumption is invalidated occasionally, the jitcode which assumes it may be hot enough to make it worthwhile to use invalidation-based optimization, and just pay the cost of the occasional recompile – the time saved in between will be worth the recompile cost. Alternatively, it may not be worth the cost. Beyond a certain point, deciding between the two approaches becomes a matter of heuristics and tuning.

On-Stack Invalidation

The post so far gives a general idea of the main principles behind invalidations. That said, there is a very important corner case with invalidation that all JIT engines need to take care of.

The corner case is called on-stack invalidation, and it arises because sometimes, the jitcode that becomes invalid is code that’s on the call stack (i.e. it’s one of the callers of the code that’s currently executing). This means that once the current code finishes running, it’s going to execute a machine-level return to jitcode that we can no longer safely execute.

Handling this case requires a bit of juggling, and consideration for a number of subtle errors that may arise. I’ll cover more on that in a follow-up post.

David Corbacho wrote on

:

wrote on

:

kvijayan wrote on

:

wrote on

:

David Corbacho wrote on

:

wrote on

:

Arpad Borsos wrote on

:

wrote on

:

kvijayan wrote on

:

wrote on

:

André wrote on

:

wrote on

:

kvijayan wrote on

:

wrote on

:

fbender wrote on

:

wrote on

:

kvijayan wrote on

:

wrote on

: