(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean. You can find an index of all TWiG posts online.)

One of my favorite parts of working on the Telemetry Team is the wide variety of projects I get to contribute to and the things I learn from them. Not every team gets the opportunity to work on so many different projects, in many different languages and on many different platforms. Recently, I enjoyed the luxury of helping to integrate the Firefox Lockwise Android and iOS applications with Glean. Getting to work alongside another team is always fun and this was especially true with the Lockwise Team due to their obvious energy and helpful nature.

After integrating Glean with any new app, one of the most important steps we do is to validate that the integration is working and that we are getting the data we expect. This allows us to ensure that consuming applications can rely on the data provided by Glean and also allows us to find some unusual bugs. This blog post is going to focus on a couple of the interesting bugs and surprises that I encountered while validating the Glean data coming from the Lockwise mobile apps.

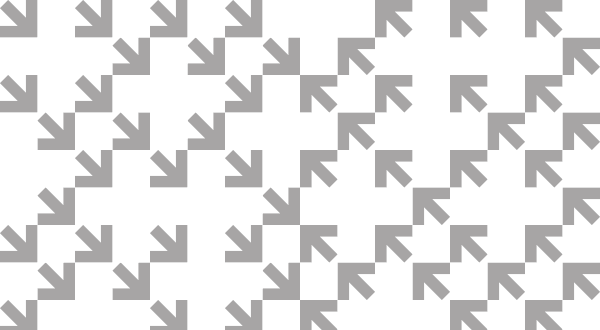

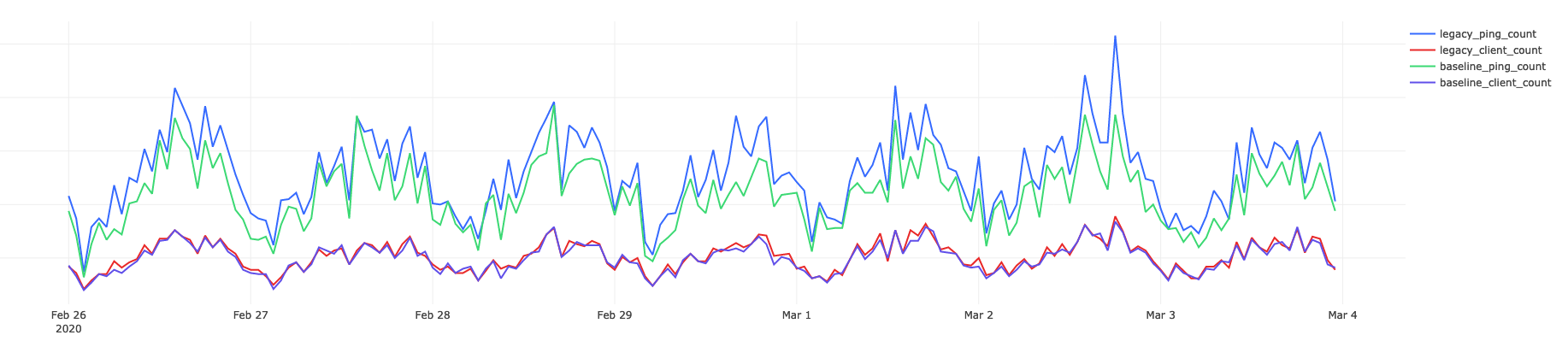

The first interesting and/or troubling thing that I discovered through my validation of the Lockwise on Android integration was that the Android Autofill service doesn’t have the same Activity lifecycle that a normal application does because it is invoked by the OS as a service. Since Glean relies on certain Activity lifecycle events that weren’t being generated when the app was invoked for the Autofill service, and since a lot of Lockwise’s usage is performed in this way, we were seeing a lot less usage than compared to the legacy telemetry. Legacy telemetry was being sent in a more explicit way from the application, so it wasn’t affected by this particular issue. This also affected the overall client counts, because Glean was only counting the clients that launched the app, and not the ones that were just using the Autofill service. This amounted to about 26 times as many legacy pings as baseline pings for the sample group. This same trend was visible no matter how the sample was segregated.

Below is an example of how many more pings we were seeing from legacy telemetry (the blue line) than Glean (the green line) for Lockwise on Android.

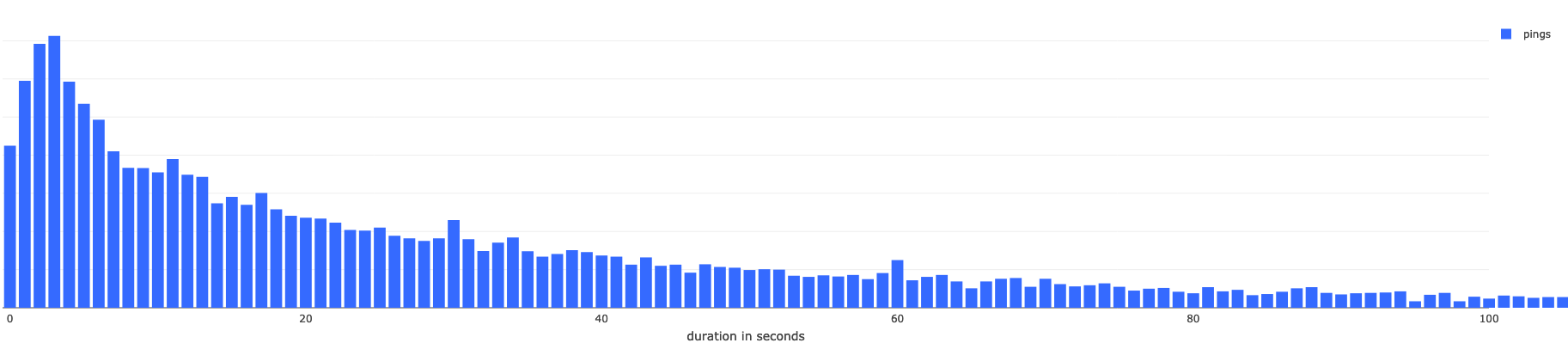

The next surprising thing I ran into was the lack of data in legacy telemetry. It’s really hard to compare things when you don’t have anything to compare to. This was a result of me trying to see what a side-by-side comparison of the length of times that a user had the app open, or more specifically, running in the foreground of the device. Glean data provided a nice distribution of usage which looked like it could provide some insights into how users were interacting with the app. Unfortunately, legacy telemetry let me down here when I discovered that each and every ping had a duration of zero.

Below is an example of the data I could see through Glean, but unfortunately had nothing from legacy telemetry which to validate it against.

All of this work was still worthwhile, despite the differences, as it exposed some things with Glean that we hadn’t considered, such as using Glean from a service rather than an application and how the difference in lifecycles affected ping submission. Through my investigation, I also was able to see firsthand the improvements that Glean provided by just providing information that was missing from legacy telemetry.

That was how the Android investigation went, and I followed it up by performing basically the same investigation for the Lockwise on iOS application. After seeing how many surprising things surfaced during the Android investigation, I went into this one with fewer expectations.

Once again, I started with looking at the usage information: the ping counts and client counts between Glean and legacy telemetry. This turned out looking much more like I had hoped with just slightly less Glean pings than legacy pings, and client counts that tracked very closely for the sample group. Well, it looks like the Autofill service on iOS is handled differently since we seem to see about the same usage from Glean as we do from legacy telemetry.

This goes to illustrate that the differences in platforms are an important consideration in how we build and test Glean. Similar functionality between Android and iOS doesn’t always mean similar behavior for a cross-platform library like Glean.

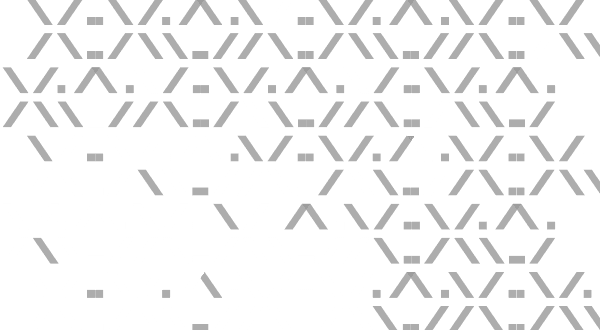

Not everything with the iOS investigation went as well. When I went to look at the length of time that the app was in the foreground, Glean had the expected data, but once again, legacy telemetry was lacking any information about duration. One nice thing about the Lockwise on iOS foreground duration information that I found was that it was comparable to the information I got from the Android investigation. Both showed similar usage from both Android and iOS. There were still some differences, but since we aren’t getting all of the pings from the Android version it’s a little hard to compare them properly.

Here’s what the foreground duration looks like from Lockwise-iOS:

At times during the investigation I felt like I was comparing apples to oranges, but there were valuable things learned and positive validation of data coming in. It was a solid reminder that we are still learning about use-cases for Glean and that there are differences between platforms that must be considered by cross-platform SDK’s like Glean. We walk away from this investigation with some action items on things to improve and some new information about how Glean works a little differently between platforms. All in a day’s work…