A monkey. That’s the default name a part in the JavaScript Engine of Mozilla Firefox gets. Even the full engine has its own monkey name, called Spidermonkey. 2013 has been a transformative year for the monkeys. New species have been born and others have gone extinct. I’ll give a small but incomplete overview into the developments that happened.

Before 2013 JaegerMonkey had established itself as the leader of the pack (i.e. the superior engine in Spidermonkey) and was the default JIT compiler in the engine. It was successfully introduced in Firefox 4.0 on March 22nd, 2011. Its original purpose was to augment the first JIT Monkey, TraceMonkey. Two years later it had kicked TraceMonkey out of the door and was absolute ruler in monkey land. Along the ride it had totally changed. A lot of optimizations had been added, the most important being Type Inference. Though there were also drawbacks. JaegerMonkey wasn’t really designed to host all those optimizations and it was becoming harder and harder to add new flashy things and easier and easier to add faults. JaegerMonkey had always been a worthy monkey but was starting to cripple under age.

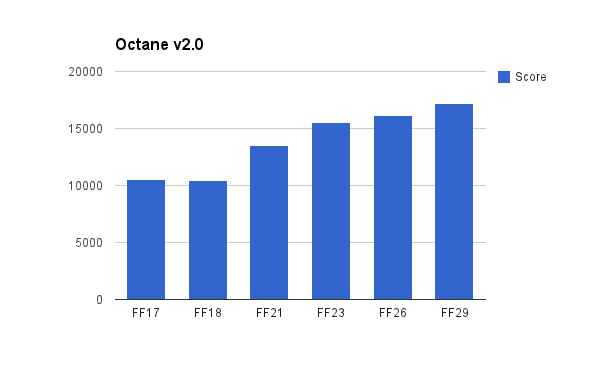

Improvement of Firefox on the octane benchmark

The year 2013 was only eight days old and together with the release of Firefox 18, a new bad boy was in town, IonMonkey. It had received education from the elder monkeys, as well as from its competitors and inherited the good ideas, while trying to avoid the old mistakes. IonMonkey became a textbook compiler with regular optimization passes only adjusted to work in a JIT environment. I would recommend reading the blogpost of the release for more information about it. Simultaneously, JaegerMonkey was downgraded to a startup JIT to warm up scripts before IonMonkey took over responsibility.

But that wasn’t the only big change. After the release of IonMonkey in Firefox 18 the year 2013 saw the release of Firefox 19, 20, all the way to number 26. Also Firefox 27, 28 and (partly) 29 were developed in 2013. All those releases brought their own set of performance improvements:

– Firefox 19 was the second release with IonMonkey enabled. Most work went into improving the new infrastructure of IonMonkey. Another notable improvement was updating Yarr (the engine that executes regular expressions imported from JSC) to the newest release.

– Firefox 20 saw range analysis, one of the optimization passes of IonMonkey, refactored. It was improved and augmented with symbolic range analysis. Also this was the first release containing JavaScript self-hosting infrastructure that allows standard, builtin functions to be implemented in JavaScript instead of C++. These functions get the same treatment as normal functions, including JIT compilation. This helps a lot with removing the overhead from calling between C++ and JavaScript and even allows builtin JS functions to be inlined in the caller.

– Firefox 21 is the first release where off-thread compilation for IonMonkey was enabled. This moves most of the compilation to a background thread, so that the main thread can happily continue executing JavaScript code.

– Firefox 22 saw a big refactoring of how we inline and made it possible to inline a subset of callees at a polymorphic callsite, instead of everything or nothing. A new monkey was also welcomed, called OdinMonkey. OdinMonkey acts as an Ahead of Time compiler optimization pass that reuses most of IonMonkey, kicking in for specific scripts that have been declared to conform to the asm.js subset of JavaScript. OdinMonkey showed immediate progress on the Epic Citadel demo. More recently, Google added an asm.js workload to Octane 2.0 where OdinMonkey provides a nice boost.

– Firefox 23 brought another first. The first compiler without a monkey name was released: the Baseline Compiler. It was designed from scratch to take over the role of JaegerMonkey. It is the proper startup JIT JaegerMonkey was forced to be when IonMonkey was released. No recompilations or invalidations in the Baseline Compiler: only saving type information and make it easy for IonMonkey to kick in. With this release IonMonkey was also allowed to kick in 10 times earlier. At this point, Type Inference was now only needed for IonMonkey. Consequently, major parts of Type Inference were moved and integrated directly into IonMonkey improving memory usage.

– Firefox 24 added lazy bytecode generation. One of the first steps in JS execution is parsing the functions in a script and creating bytecode for them. (The whole engine consumes bytecodes instead of a raw JavaScript string.) With the use of big libraries, a lot of functions aren’t used and therefore creating bytecode for all these functions adds unnecessary overhead. Lazy bytecode generation allow us to wait until the first execution before parsing a function and avoids parsing functions that are never executed.

– Firefox 25 to Firefox 28: No real big performance improvements that stand out. A lot of smaller changes under the hood have landed. Goal: polishing existing features or implementing small improvements. A lot of preparation work went into Exact Rooting. This is needed for more advanced garbage collection algorithms, like Generational GC. Also a lot of DOM improvements were added.

– Firefox 29. Just before 2014 Off-thread MIR Construction landed. Now the whole compilation process in IonMonkey can be run off the main thread. No delays during execution due to compiling if you have two or more processors anymore.

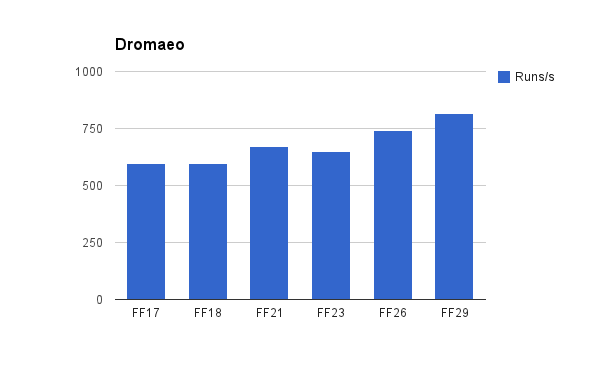

Improvement of Firefox on the dromaeo benchmark

All these things resulted in improved JavaScript speed. Our score on Octane v1.0 has increased considerably compared to the start of the year. We now are again competitive on the benchmark. Towards the end of the year, Octane v2.0 was released and we took a small hit, but we were very efficient in finding the opportunities to improve our engine and our score on Octane v2.0 has almost surpassed our Octane v1.0 score. Another example on how the speed of Spidermonkey has increased a lot is the score on the Web Browser Grand Prix on Tom’s Hardware. In those reports, Chrome, Firefox, Internet Explorer and Opera are tested on multiple benchmarks, including Browsermark, Peacekeeper, Dromaeo and a dozen others. During 2013, Firefox was in a steady second place behind Chrome. Unexpectedly, the hard work brought us to the first place on the Web Browser Grand Prix of June 30th. Firefox 22 was crowned above Chrome and Opera Next. More importantly than all these benchmarks are the reports we get about overall improved JavaScript performance, which is very encouraging.

A new year starts and improving performance is never finished. In 2014 we will try to improve the JavaScript engine even more. The usual fixes and adding of fast paths continues, but also the higher-level work continues. One of the biggest changes we will welcome this year is the landing of Generational GC. This should bring big benefits in reducing the long GC pauses and improving heap usage. This has been an enormous task, but we are close to landing. Other expected boosts include improving DOM access even more, possibly a lightweight way to do chunked compilation in the form of loop compilation, different optimization levels for scripts with different hotness, adding a new optimization pass called escape analysis … and possibly much more.

A happy new year from the JavaScript team!

Ferdinand wrote on

:

wrote on

:

YOLO wrote on

:

wrote on

:

ZeD wrote on

:

wrote on

:

Demy Haer wrote on

:

wrote on

:

YABE Yuji wrote on

:

wrote on

:

hex wrote on

:

wrote on

:

jr wrote on

:

wrote on

:

Not Frank wrote on

:

wrote on

:

timeless wrote on

:

wrote on

:

Matt wrote on

:

wrote on

:

Aiden wrote on

:

wrote on

:

Ferdinand wrote on

:

wrote on

:

Wesj wrote on

:

wrote on

:

theporchrat wrote on

:

wrote on

:

Macw666 wrote on

:

wrote on

:

Liam wrote on

:

wrote on

:

Hannes Verschore wrote on

:

wrote on

:

zfs wrote on

:

wrote on

:

Animesh wrote on

:

wrote on

:

Ronaldo wrote on

:

wrote on

:

Hannes Verschore wrote on

:

wrote on

:

Caspy7 wrote on

:

wrote on

:

Hannes Verschore wrote on

:

wrote on

:

Erik Harrison wrote on

:

wrote on

:

sfink wrote on

:

wrote on

:

Hannes Verschore wrote on

:

wrote on

:

Sherri – Dallas, TX wrote on

:

wrote on

:

Monkeyless wrote on

:

wrote on

:

Luke Wagner wrote on

:

wrote on

:

ᙇᓐ M. Edward Borasky ( wrote on

:

wrote on

:

abcd wrote on

:

wrote on

:

Hannes Verschore wrote on

:

wrote on

:

Caspy7 wrote on

:

wrote on

:

Arglborps wrote on

:

wrote on

:

Kevin Lark wrote on

:

wrote on

:

hamada wrote on

:

wrote on

:

will wrote on

:

wrote on

:

blitter wrote on

:

wrote on

:

it wrote on

:

wrote on

:

tigertownpc wrote on

:

wrote on

:

akbar jan wrote on

:

wrote on

:

Frank Gericke wrote on

:

wrote on

:

teddy wrote on

:

wrote on

:

Pronoy Mukherjee wrote on

:

wrote on

:

Firanolfind wrote on

:

wrote on

:

George Mitchell wrote on

:

wrote on

: