Rust is a great language, and Mozilla plans to use it extensively in Firefox. However, the Rust compiler (rustc) is quite slow and compile times are a pain point for many Rust users. Recently I’ve been working on improving that. This post covers how I’ve done this, and should be of interest to anybody else who wants to help speed up the Rust compiler. Although I’ve done all this work on Linux it should be mostly applicable to other platforms as well.

Getting the code

The first step is to get the rustc code. First, I fork the main Rust repository on GitHub. Then I make two local clones: a base clone that I won’t modify, which serves as a stable comparison point (rust0), and a second clone where I make my modifications (rust1). I use commands something like this:

user=nnethercote

for r in rust0 rust1 ; do

cd ~/moz

git clone https://github.com/$user/rust $r

cd $r

git remote add upstream https://github.com/rust-lang/rust

git remote set-url origin git@github.com:$user/rust

done

Building the Rust compiler

Within the two repositories, I first configure:

./configure --enable-optimize --enable-debuginfo

I configure with optimizations enabled because that matches release versions of rustc. And I configure with debug info enabled so that I get good information from profilers.

[Update: I now add –enable-llvm-release-debuginfo which builds the LLVM back-end with debug info too.]

Then I build:

RUSTFLAGS='' make -j8

[Update: I previously had -Ccodegen-units=8 in RUSTFLAGS because it speeds up compile times. But Lars Bergstrom informed me that it can slow down the resulting program significantly. I measured and he was right — the resulting rustc was about 5–10% slower. So I’ve stopped using it now.]

That does a full build, which does the following:

- Downloads a stage0 compiler, which will be used to build the stage1 local compiler.

- Builds LLVM, which will become part of the local compilers.

- Builds the stage1 compiler with the stage0 compiler.

- Builds the stage2 compiler with the stage1 compiler.

It can be mind-bending to grok all the stages, especially with regards to how libraries work. (One notable example: the stage1 compiler uses the system allocator, but the stage2 compiler uses jemalloc.) I’ve found that the stage1 and stage2 compilers have similar performance. Therefore, I mostly measure the stage1 compiler because it’s much faster to just build the stage1 compiler, which I do with the following command.

RUSTFLAGS='' make -j8 rustc-stage1

Building the compiler takes a while, which isn’t surprising. What is more surprising is that rebuilding the compiler after a small change also takes a while. That’s because a lot of code gets recompiled after any change. There are two reasons for this.

- Rust’s unit of compilation is the crate. Each crate can consist of multiple files. If you modify a crate, the whole crate must be rebuilt. This isn’t surprising.

- rustc’s dependency checking is very coarse. If you modify a crate, every other crate that depends on it will also be rebuilt, no matter how trivial the modification. This surprised me greatly. For example, any modification to the parser (which is in a crate called libsyntax) causes multiple other crates to be recompiled, a process which takes 6 minutes on my fast desktop machine. Almost any change to the compiler will result in a rebuild that takes at least 2 or 3 minutes.

Incremental compilation should greatly improve the dependency situation, but it’s still in an experimental state and I haven’t tried it yet.

To run all the tests I do this (after a full build):

ulimit -c 0 && make check

The checking aborts if you don’t do the ulimit, because the tests produces lots of core files and it doesn’t want to swamp your disk.

The build system is complex, with lots of options. This command gives a nice overview of some common invocations:

make tips

Basic profiling

The next step is to do some basic profiling. I like to be careful about which rustc I am invoking at any time, especially if there’s a system-wide version installed, so I avoid relying on PATH and instead define some environment variables like this:

export RUSTC01="$HOME/moz/rust0/x86_64-unknown-linux-gnu/stage1/bin/rustc"

export RUSTC02="$HOME/moz/rust0/x86_64-unknown-linux-gnu/stage2/bin/rustc"

export RUSTC11="$HOME/moz/rust1/x86_64-unknown-linux-gnu/stage1/bin/rustc"

export RUSTC12="$HOME/moz/rust1/x86_64-unknown-linux-gnu/stage2/bin/rustc"

In the examples that follow I will use $RUSTC01 as the version of rustc that I invoke.

rustc has the ability to produce some basic stats about the time and memory used by each compiler pass. It is enabled with the -Ztime-passes flag. If you are invoking rustc directly you’d do it like this:

$RUSTC01 -Ztime-passes a.rs

If you are building with Cargo you can instead do this:

RUSTC=$RUSTC01 cargo rustc -- -Ztime-passes

The RUSTC= part tells Cargo you want to use a non-default rustc, and the part after the -- is flags that will be passed to rustc when it builds the final crate. (A bit weird, but useful.)

Here is some sample output from -Ztime-passes:

time: 0.056; rss: 49MB parsing

time: 0.000; rss: 49MB recursion limit

time: 0.000; rss: 49MB crate injection

time: 0.000; rss: 49MB plugin loading

time: 0.000; rss: 49MB plugin registration

time: 0.103; rss: 87MB expansion

time: 0.000; rss: 87MB maybe building test harness

time: 0.002; rss: 87MB maybe creating a macro crate

time: 0.000; rss: 87MB checking for inline asm in case the target doesn't support it

time: 0.005; rss: 87MB complete gated feature checking

time: 0.008; rss: 87MB early lint checks

time: 0.003; rss: 87MB AST validation

time: 0.026; rss: 90MB name resolution

time: 0.019; rss: 103MB lowering ast -> hir

time: 0.004; rss: 105MB indexing hir

time: 0.003; rss: 105MB attribute checking

time: 0.003; rss: 105MB language item collection

time: 0.004; rss: 105MB lifetime resolution

time: 0.000; rss: 105MB looking for entry point

time: 0.000; rss: 105MB looking for plugin registrar

time: 0.015; rss: 109MB region resolution

time: 0.002; rss: 109MB loop checking

time: 0.002; rss: 109MB static item recursion checking

time: 0.060; rss: 109MB compute_incremental_hashes_map

time: 0.000; rss: 109MB load_dep_graph

time: 0.021; rss: 109MB type collecting

time: 0.000; rss: 109MB variance inference

time: 0.038; rss: 113MB coherence checking

time: 0.126; rss: 114MB wf checking

time: 0.219; rss: 118MB item-types checking

time: 1.158; rss: 125MB item-bodies checking

time: 0.000; rss: 125MB drop-impl checking

time: 0.092; rss: 127MB const checking

time: 0.015; rss: 127MB privacy checking

time: 0.002; rss: 127MB stability index

time: 0.011; rss: 127MB intrinsic checking

time: 0.007; rss: 127MB effect checking

time: 0.027; rss: 127MB match checking

time: 0.014; rss: 127MB liveness checking

time: 0.082; rss: 127MB rvalue checking

time: 0.145; rss: 161MB MIR dump

time: 0.015; rss: 161MB SimplifyCfg

time: 0.033; rss: 161MB QualifyAndPromoteConstants

time: 0.034; rss: 161MB TypeckMir

time: 0.001; rss: 161MB SimplifyBranches

time: 0.006; rss: 161MB SimplifyCfg

time: 0.089; rss: 161MB MIR passes

time: 0.202; rss: 161MB borrow checking

time: 0.005; rss: 161MB reachability checking

time: 0.012; rss: 161MB death checking

time: 0.014; rss: 162MB stability checking

time: 0.000; rss: 162MB unused lib feature checking

time: 0.101; rss: 162MB lint checking

time: 0.000; rss: 162MB resolving dependency formats

time: 0.001; rss: 162MB NoLandingPads

time: 0.007; rss: 162MB SimplifyCfg

time: 0.017; rss: 162MB EraseRegions

time: 0.004; rss: 162MB AddCallGuards

time: 0.126; rss: 164MB ElaborateDrops

time: 0.001; rss: 164MB NoLandingPads

time: 0.012; rss: 164MB SimplifyCfg

time: 0.008; rss: 164MB InstCombine

time: 0.003; rss: 164MB Deaggregator

time: 0.001; rss: 164MB CopyPropagation

time: 0.003; rss: 164MB AddCallGuards

time: 0.001; rss: 164MB PreTrans

time: 0.182; rss: 164MB Prepare MIR codegen passes

time: 0.081; rss: 167MB write metadata

time: 0.590; rss: 177MB translation item collection

time: 0.034; rss: 180MB codegen unit partitioning

time: 0.032; rss: 300MB internalize symbols

time: 3.491; rss: 300MB translation

time: 0.000; rss: 300MB assert dep graph

time: 0.000; rss: 300MB serialize dep graph

time: 0.216; rss: 292MB llvm function passes [0]

time: 0.103; rss: 292MB llvm module passes [0]

time: 4.497; rss: 308MB codegen passes [0]

time: 0.004; rss: 308MB codegen passes [0]

time: 5.185; rss: 308MB LLVM passes

time: 0.000; rss: 308MB serialize work products

time: 0.257; rss: 297MB linking

As far as I can tell, the indented passes are sub-passes, and the parent pass is the first non-indented pass afterwards.

More serious profiling

The -Ztime-passes flag gives a good overview, but you really need a profiling tool that gives finer-grained information to get far. I’ve done most of my profiling with two Valgrind tools, Cachegrind and DHAT. I invoke Cachegrind like this:

valgrind \

--tool=cachegrind --cache-sim=no --branch-sim=yes \

--cachegrind-out-file=$OUTFILE $RUSTC01 ...

where $OUTFILE specifies an output filename. I find the instruction counts measured by Cachegrind to be highly useful; the branch simulation results are occasionally useful, and the cache simulation results are almost never useful.

The Cachegrind output looks like this:

--------------------------------------------------------------------------------

Ir

--------------------------------------------------------------------------------

22,153,170,953 PROGRAM TOTALS

--------------------------------------------------------------------------------

Ir file:function

--------------------------------------------------------------------------------

923,519,467 /build/glibc-GKVZIf/glibc-2.23/malloc/malloc.c:_int_malloc

879,700,120 /home/njn/moz/rust0/src/rt/miniz.c:tdefl_compress

629,196,933 /build/glibc-GKVZIf/glibc-2.23/malloc/malloc.c:_int_free

394,687,991 ???:???

379,869,259 /home/njn/moz/rust0/src/libserialize/leb128.rs:serialize::leb128::read_unsigned_leb128

376,921,973 /build/glibc-GKVZIf/glibc-2.23/malloc/malloc.c:malloc

263,083,755 /build/glibc-GKVZIf/glibc-2.23/string/::/sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S:__memcpy_avx_unaligned

257,219,281 /home/njn/moz/rust0/src/libserialize/opaque.rs:<serialize::opaque::Decoder<'a> as serialize::serialize::Decoder>::read_usize

217,838,379 /build/glibc-GKVZIf/glibc-2.23/malloc/malloc.c:free

217,006,132 /home/njn/moz/rust0/src/librustc_back/sha2.rs:rustc_back::sha2::Engine256State::process_block

211,098,567 ???:llvm::SelectionDAG::Combine(llvm::CombineLevel, llvm::AAResults&, llvm::CodeGenOpt::Level)

185,630,213 /home/njn/moz/rust0/src/libcore/hash/sip.rs:<rustc_incremental::calculate_svh::hasher::IchHasher as core::hash::Hasher>::write

171,360,754 /home/njn/moz/rust0/src/librustc_data_structures/fnv.rs:<rustc::ty::subst::Substs<'tcx> as core::hash::Hash>::hash

150,026,054 ???:llvm::SelectionDAGISel::SelectCodeCommon(llvm::SDNode*, unsigned char const*, unsigned int)

Here “Ir” is short for “I-cache reads”, which corresponds to the number of instructions executed. Cachegrind also gives line-by-line annotations of the source code.

The Cachegrind results indicate that malloc and free are usually the two hottest functions in the compiler. So I also use DHAT, which is a malloc profiler that tells you exactly where all your malloc calls are coming from. I invoke DHAT like this:

/home/njn/grind/ws3/vg-in-place \

--tool=exp-dhat --show-top-n=1000 --num-callers=4 \

--sort-by=tot-blocks-allocd $RUSTC01 ... 2> $OUTFILE

I sometimes also use --sort-by=tot-bytes-allocd. DHAT’s output looks like this:

==16425== -------------------- 1 of 1000 --------------------

==16425== max-live: 30,240 in 378 blocks

==16425== tot-alloc: 20,866,160 in 260,827 blocks (avg size 80.00)

==16425== deaths: 260,827, at avg age 113,438 (0.00% of prog lifetime)

==16425== acc-ratios: 0.74 rd, 1.00 wr (15,498,021 b-read, 20,866,160 b-written)

==16425== at 0x4C2BFA6: malloc (vg_replace_malloc.c:299)

==16425== by 0x5AD392B: <syntax::ptr::P<T> as serialize::serialize::Decodable>::decode (heap.rs:59)

==16425== by 0x5AD4456: <core::iter::Map<I, F> as core::iter::iterator::Iterator>::next (serialize.rs:201)

==16425== by 0x5AE2A52: rustc_metadata::decoder::<impl rustc_metadata::cstore::CrateMetadata>::get_attributes (vec.rs:1556)

==16425==

==16425== -------------------- 2 of 1000 --------------------

==16425== max-live: 1,360 in 17 blocks

==16425== tot-alloc: 10,378,160 in 129,727 blocks (avg size 80.00)

==16425== deaths: 129,727, at avg age 11,622 (0.00% of prog lifetime)

==16425== acc-ratios: 0.47 rd, 0.92 wr (4,929,626 b-read, 9,599,798 b-written)

==16425== at 0x4C2BFA6: malloc (vg_replace_malloc.c:299)

==16425== by 0x881136A: <syntax::ptr::P<T> as core::clone::Clone>::clone (heap.rs:59)

==16425== by 0x88233A7: syntax::ext::tt::macro_parser::parse (vec.rs:1105)

==16425== by 0x8812E66: syntax::tokenstream::TokenTree::parse (tokenstream.rs:230)

The “deaths” value here indicate the total number of calls to malloc for each call stack, which is usually the metric of most interest. The “acc-ratios” value can also be interesting, especially if the “rd” value is 0.00, because that indicates the allocated blocks are never read. (See below for example of problems that I found this way.)

For both profilers I also pipe $OUTFILE through eddyb’s rustfilt.sh script which demangles ugly Rust symbols like this:

_$LT$serialize..opaque..Decoder$LT$$u27$a$GT$$u20$as$u20$serialize..serialize..Decoder$GT$::read_usize::h87863ec7f9234810

to something much nicer, like this:

<serialize::opaque::Decoder<'a> as serialize::serialize::Decoder>::read_usize

[Update: native support for Rust demangling recently landed in Valgrind’s repo. I use a trunk version of Valgrind so I no longer need to use rustfilt.sh in combination with Valgrind.]

For programs that use Cargo, sometimes it’s useful to know the exact rustc invocations that Cargo uses. Find out with either of these commands:

RUSTC=$RUSTC01 cargo build -v

RUSTC=$RUSTC01 cargo rust -v

I also have done a decent amount of ad hoc println profiling, where I insert println! calls in hot parts of the code and then I use a script to post-process them. This can be very useful when I want to know exactly how many times particular code paths are hit.

I’ve also tried perf. It works, but I’ve never established much of a rapport with it. YMMV. In general, any profiler that works with C or C++ code should also work with Rust code.

Finding suitable benchmarks

Once you know how you’re going to profile you need some good workloads. You could use the compiler itself, but it’s big and complicated and reasoning about the various stages can be confusing, so I have avoided that myself.

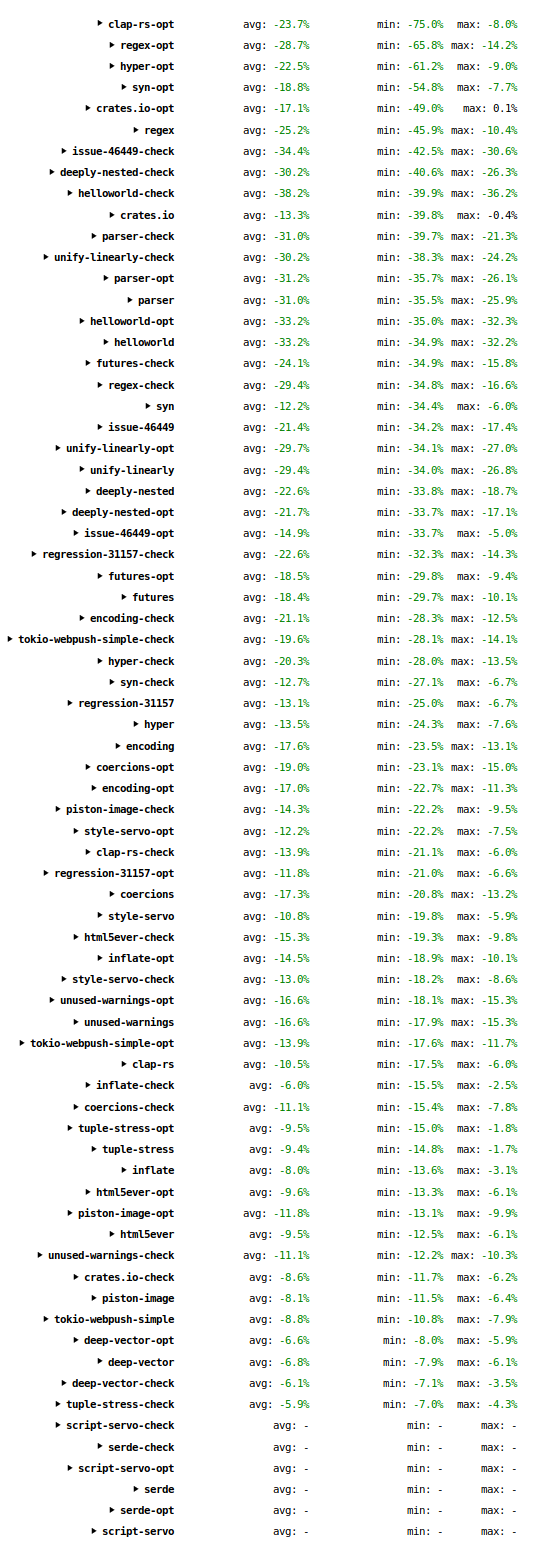

Instead, I have focused entirely on rustc-benchmarks, a pre-existing rustc benchmark suite. It contains 13 benchmarks of various sizes. It has been used to track rustc’s performance at perf.rust-lang.org for some time, but it wasn’t easy to use locally until I wrote a script for that purpose. I invoke it something like this:

./compare.py \

/home/njn/moz/rust0/x86_64-unknown-linux-gnu/stage1/bin/rustc \

/home/njn/moz/rust1/x86_64-unknown-linux-gnu/stage1/bin/rustc

It compares the two given compilers, doing debug builds, on the benchmarks See the next section for example output. If you want to run a subset of the benchmarks you can specify them as additional arguments.

Each benchmark in rustc-benchmarks has a makefile with three targets. See the README for details on these targets, which can be helpful.

Wins

Here are the results if I compare the following two versions of rustc with compare.py.

- The commit just before my first commit (on September 12).

- A commit from October 13.

futures-rs-test 5.028s vs 4.433s --> 1.134x faster (variance: 1.020x, 1.030x)

helloworld 0.283s vs 0.235s --> 1.202x faster (variance: 1.012x, 1.025x)

html5ever-2016- 6.293s vs 5.652s --> 1.113x faster (variance: 1.011x, 1.008x)

hyper.0.5.0 6.182s vs 5.039s --> 1.227x faster (variance: 1.002x, 1.018x)

inflate-0.1.0 5.168s vs 4.935s --> 1.047x faster (variance: 1.001x, 1.002x)

issue-32062-equ 0.457s vs 0.347s --> 1.316x faster (variance: 1.010x, 1.007x)

issue-32278-big 2.046s vs 1.706s --> 1.199x faster (variance: 1.003x, 1.007x)

jld-day15-parse 1.793s vs 1.538s --> 1.166x faster (variance: 1.059x, 1.020x)

piston-image-0. 13.871s vs 11.885s --> 1.167x faster (variance: 1.005x, 1.005x)

regex.0.1.30 2.937s vs 2.516s --> 1.167x faster (variance: 1.010x, 1.002x)

rust-encoding-0 2.414s vs 2.078s --> 1.162x faster (variance: 1.006x, 1.005x)

syntex-0.42.2 36.526s vs 32.373s --> 1.128x faster (variance: 1.003x, 1.004x)

syntex-0.42.2-i 21.500s vs 17.916s --> 1.200x faster (variance: 1.007x, 1.013x)

Not all of the improvement is due to my changes, but I have managed a few nice wins, including the following.

#36592: There is an arena allocator called TypedArena. rustc creates many of these, mostly short-lived. On creation, each arena would allocate a 4096 byte chunk, in preparation for the first arena allocation request. But DHAT’s output showed me that the vast majority of arenas never received such a request! So I made TypedArena lazy — the first chunk is now only allocated when necessary. This reduced the number of calls to malloc greatly, which sped up compilation of several rustc-benchmarks by 2–6%.

#36734: This one was similar. Rust’s HashMap implementation is lazy — it doesn’t allocate any memory for elements until the first one is inserted. This is a good thing because it’s surprisingly common in large programs to create HashMaps that are never used. However, Rust’s HashSet implementation (which is just a layer on top of the HashMap) didn’t have this property, and guess what? rustc also creates large numbers of HashSets that are never used. (Again, DHAT’s output made this obvious.) So I fixed that, which sped up compilation of several rustc-benchmarks by 1–4%. Even better, because this change is to Rust’s stdlib, rather than rustc itself, it will speed up any program that creates HashSets without using them.

#36917: This one involved avoiding some useless data structure manipulation when a particular table was empty. Again, DHAT pointed out a table that was created but never read, which was the clue I needed to identify this improvement. This sped up two benchmarks by 16% and a couple of others by 3–5%.

#37064: This one changed a hot function in serialization code to return a Cow<str> instead of a String, which avoided a lot of allocations.

Future work

Profiles indicate that the following parts of the compiler account for a lot of its runtime.

- malloc and free are still the two hottest functions in most benchmarks. Avoiding heap allocations can be a win.

- Compression is used for crate metadata and LLVM bitcode. (This shows up in profiles under a function called

tdefl_compress.) There is an issue open about this.

- Hash table operations are hot. A lot of this comes from the interning of various values during type checking; see the CtxtInterners type for details.

- Crate metadata decoding is also costly.

- LLVM execution is a big chunk, especially when doing optimized builds. So far I have treated LLVM as a black box and haven’t tried to change it, at least partly because I don’t know how to build it with debug info, which is necessary to get source files and line numbers in profiles. [Update: there is a new –enable-llvm-release-debuginfo configure option that causes LLVM to be build with debug info.]

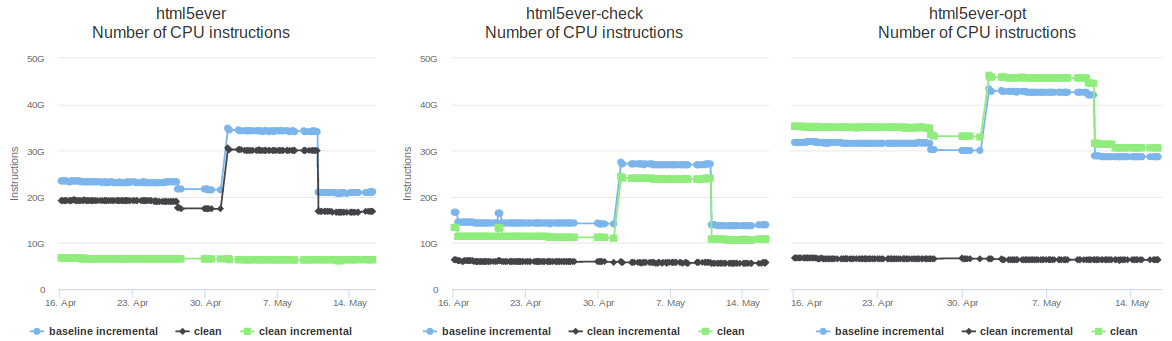

A lot of programs have broadly similar profiles, but occasionally you get an odd one that stresses a different part of the compiler. For example, in rustc-benchmarks, inflate-0.1.0 is dominated by operations involving the (delighfully named) ObligationsForest (see #36993), and html5ever-2016-08-25 is dominated by what I think is macro processing. So it’s worth profiling the compiler on new codebases.

Caveat lector

I’m still a newcomer to Rust development. Although I’ve had lots of help on the #rustc IRC channel — big thanks to eddyb and simulacrum in particular — there may be things I am doing wrong or sub-optimally. Nonetheless, I hope this is a useful starting point for newcomers who want to speed up the Rust compiler.