One issue that’s been on everyone’s mind lately is privacy. Privacy is extremely important to us at Mozilla, but it isn’t exactly clear how Firefox users define privacy. For example, what do Firefox users consider to be essential privacy issues? What features of a browsing experience lead users to consider a browser to be more or less private?

In order to answer these questions, we asked users to give us their definitions of privacy, specifically privacy while browsing, in order to answer these questions. The assumption was that users will have different definitions, but that there will be enough similarities between groups of responses that we could identify “themes” amongst the responses. By text mining user responses to an open-ended survey question asking for definitions of browsing privacy, we were able to identify themes directly from the users’ mouths:

- Regarding privacy issues, people know that tracking and browser history are different issues, validating the need for browser features that address these issues independently (“private browsing” and “do not track”)

- People’s definition of personal information vary, but we can group people according to the different ways they refer to personal information (this leads to a natural follow-up question; what makes some information more personal than others?)

- Previous focus group research, contracted by Mozilla, showed that users are aware that spam indicates a security risk, but what didn’t come out of the focus group research was that users also also consider spam to be an invasion of their privacy (a follow-up question, what do users define as “spam?” Do they consider targeted ads to be spam?)

- There are users who don’t distinguish privacy and security from each other

Some previous research on browsing and privacy

We knew from our own focus group research that users are concerned about viruses, theft of their personal information and passwords, that a website might misuse their information, that someone may track their online “footprint”, or that their browser history is visible to others. Users view things like targeted ads, spam, browser crashes, popups, and windows imploring them to install updates as security risks.

But it’s difficult to broadly generalize findings from focus groups. One group may or may not have the same concerns as the general population. The quality of the discussion moderator, or some unique combination of participants, the moderator, and/or the setting can also influence the findings you get from focus groups.

One way of validating the representativeness of focus group research is to use surveys. But while surveys may increase the representativeness of your findings, they are not as flexible as focus groups. You have to give survey respondents their answer options up front. Therefore, by providing the options that a respondent can endorse, you are limiting their voice.

A typical way to approach this problem in surveys is to use open-ended survey questions. In the pre-data mining days, we would have to manually code each of these survey responses: a first pass of all responses to get an idea of respondent “themes” or “topics” and a second pass to code each response according to those themes. This approach is costly in terms of time and effort, plus it also suffers from the problem of reproducibility; unless themes are extremely obvious, different coders might not classify a response as part of the same theme. But with modern text mining methods, we can simulate this coding process much more quickly and reproducibly.

Text mining open-ended survey questions

Because text mining is growing in popularity primarily due to its computational feasibility , it’s important to review the methods in some detail. Text mining, as with any machine learning-based approach, isn’t magic. There are a number of caveats to make about the text mining approach used. First, the clustering algorithm I chose to use requires an arbitrary and a priori decision regarding the number of clusters. I looked at 4 to 8 clusters and decided that 6 provided the best trade-off between themes expressed and redundancy. Second, there is a random component to clustering, meaning that one clustering of the same set of data may not produce the exact same results as another clustering. Theoretically, there shouldn’t be tremendous differences between the themes expressed in one clustering over another, but it’s important to keep these details in mind.

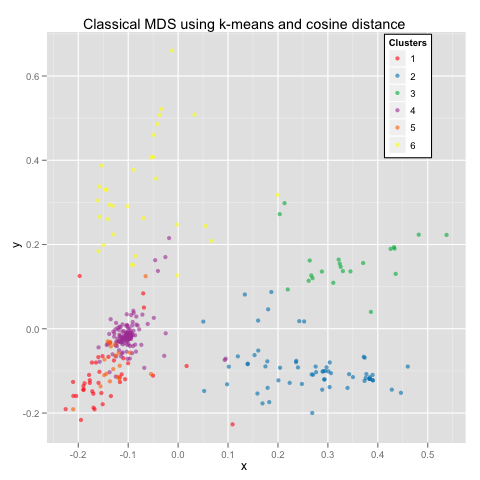

The general idea of text mining is to assume that you can represent documents as “bags of words”, that bags of words can be represented or coded quantitatively, and that the quantitative representation of text can be projected into a multi-dimensional space. For example, I can represent survey respondents in two dimensions, where each point is a respondent’s answer. Points that are tightly clustered together mean that these responses are theoretically very similar with respect to lexical content (e.g., commonality of words).

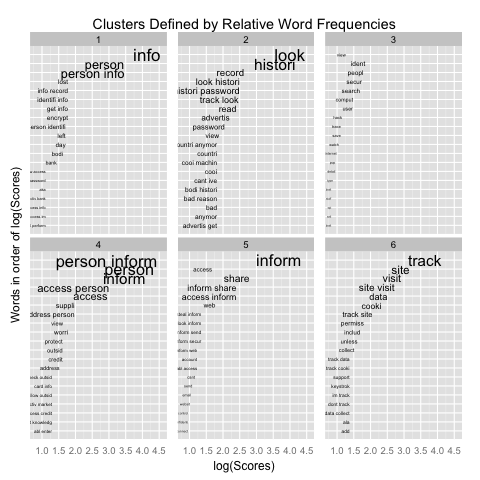

I also calculated a score that identifies the relative frequency of each word in a cluster, which is reflected in the size of the word on each cluster’s graph. In essence, the larger the word, the more it “defines” the cluster (i.e. its location and shape in the space).

Higher resolution .pdf files of these graphs can be found here and here.

Cluster summaries

- “Privacy and Personal information”: Clusters 1, 4, and 5 are dominated by, unsurprisingly, concerns about information. What’s interesting are the lower-level associations between the clusters and the words. The largest, densest cluster (cluster 4) deals mostly with access to personal information whereas cluster 1 addresses personal information as it relates to identity issues (such as when banking). Cluster 5 is subtly different from both 1 and 4. The extra emphasis on “share” could imply that users have different expectations of privacy with personal information that they explicitly choose to leak onto the web as opposed to personal information that they aren’t aware they are expressing. One area of further investigation would be to seek out user definitions on personal information; what makes some information more “personal” than others?

- “Privacy and Tracking”: Cluster 6 clearly shows that people associate being tracked as a privacy issue. The lower-scored words indicate what kind of tracked information concerns them (e.g., keystrokes, cookies, site visits), but in general the notion of “tracking” is paramount to respondents in this cluster. Compare this with cluster 2, which is more strongly defined by the words “look” and “history.” This is obviously a reference to the role that browsing history has in defining privacy. It’s interesting that these clusters are so distinct from each other, because it implies that users are aware there is a difference between their browser history and other behaviors they exhibit that could be tracked. It’s also interesting that users who consider browser history a privacy issue also consider advertising and ads (presumably a reference to targeted ads) as privacy issues as well. We can use this information to extend the focus group research on targeted ads; in addition to a security risk, some users also view targeted ads as an invasion of privacy. One interesting question naturally arises: do users differentiate between spam and targeted advertisements?

- “Privacy and Security”: The weakest defined group is cluster 3, which can be interpreted in many ways. The least controversial inference could be that these users simply don’t have a strong definition of privacy aside from a notion that privacy is related to identity and security. This validates a notion from our focus group research that some users really don’t differentiate between privacy and security.

Final thoughts

User privacy and browser security are very important to us at Mozilla, and developing a product that improves on both requires a deep and evolving understanding of what those words mean to people of all communities – our entire user population. In this post, we’ve shown how text mining can enhance our understanding of pre-existing focus group research and generate novel directions for further research. Moreover, we’ve also shown how it can provide insight into users’ perception by looking at the differences in the language they use to define a concept. In the next post, I’ll be using the same text mining approach to evaluate user definitions of security while browsing the web.

Donnie Berkholz wrote on

:

wrote on

:

Rebecca Weiss wrote on

:

wrote on

:

Raf wrote on

:

wrote on

:

smo wrote on

:

wrote on

:

Rebecca Weiss wrote on

:

wrote on

: