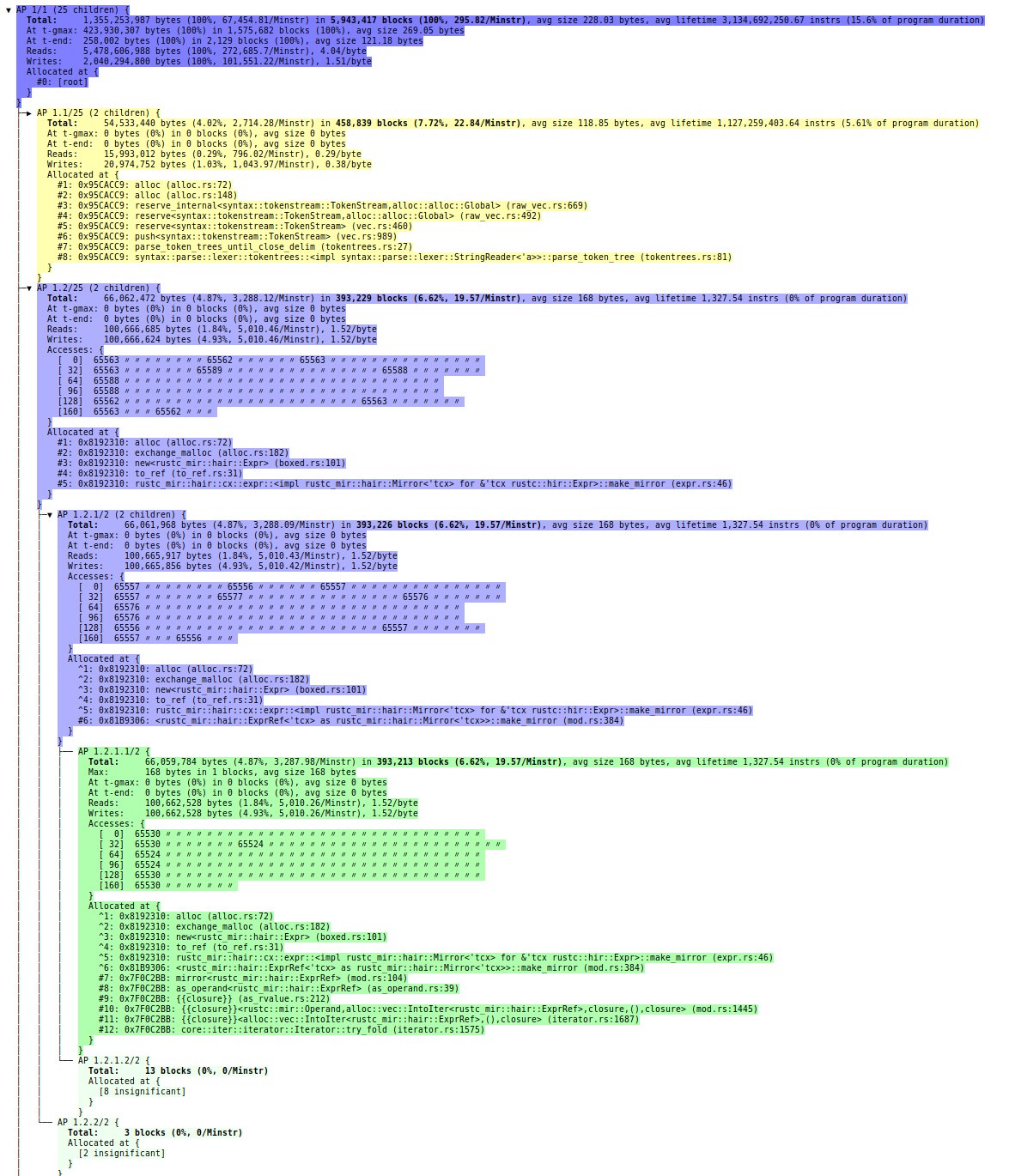

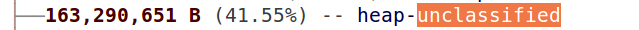

I’ve been working a lot on about:memory lately. It’s a really useful tool, but one frustrating thing about it is that a decent chunk of our memory usage is not covered by any of the existing memory reporters, and so is falling into the “heap-unclassified” bucket:

This bucket typically accounts for 30–45% of about:memory’s “explicit” measurement. We’ve discussed this “dark matter” several times in MemShrink meetings. There’s lots of work underway to add new reporters to uninstrumented parts of Gecko, but I’ve seen cases where it looks like a decent chunk of the missing memory is due to the JS engine, which is already seemingly thoroughly instrumented.

This week I realized that some of the dark matter could be due to “slop” from jemalloc, the browser’s heap allocator. What is slop? When you ask a heap allocator for a certain number of bytes, it’ll often give you back more than you asked for, rounding the size up. This wastes some memory, but there are good reasons for it — it makes the heap allocator much faster and simpler, and helps avoid fragmentation when the memory is freed.

The following comment from jemalloc.c shows jemalloc’s size classes. Any request that’s not for one of the listed sizes below is rounded up to the nearest size.

* |=====================================|

* | Category | Subcategory | Size |

* |=====================================|

* | Small | Tiny | 2 |

* | | | 4 |

* | | | 8 |

* | |----------------+---------|

* | | Quantum-spaced | 16 |

* | | | 32 |

* | | | 48 |

* | | | ... |

* | | | 480 |

* | | | 496 |

* | | | 512 |

* | |----------------+---------|

* | | Sub-page | 1 kB |

* | | | 2 kB |

* |=====================================|

* | Large | 4 kB |

* | | 8 kB |

* | | 12 kB |

* | | ... |

* | | 1012 kB |

* | | 1016 kB |

* | | 1020 kB |

* |=====================================|

* | Huge | 1 MB |

* | | 2 MB |

* | | 3 MB |

* | | ... |

* |=====================================|

In extreme cases, jemalloc will return almost double what you asked for. For example, if you ask for 1,025 bytes, it’ll give you 2,048. A lot of the time you have to just live with slop; if you need to heap-allocate an object that’s 680 bytes, jemalloc will give you 1,024 bytes, and you just have to accept the 344 bytes of waste. But if you have some flexibility in your request size, it’s a good idea to pick a size that’s a power-of-two, because that always gives you zero slop. (Remember this, it’s important later on.)

So I instrumented jemalloc to print out an entry for every heap allocation, showing the requested amount, the actual allocated amount, and the resulting slop. I then started up Firefox, opened Gmail, then shut down Firefox. Next, I ran the resulting log through a wonderful little concordance-like script I have called “counts” which analyzes a file and tells you how many times each distinct line occurs. Here’s the top ten lines of the output:

909062 numbers:

( 1 ) 205920 (22.7%, 22.7%): small: 24 -> 32 ( 8 )

( 2 ) 66162 ( 7.3%, 29.9%): small: 72 -> 80 ( 8 )

( 3 ) 61772 ( 6.8%, 36.7%): small: 40 -> 48 ( 8 )

( 4 ) 54386 ( 6.0%, 42.7%): small: 1056 -> 2048 ( 992 )

( 5 ) 48501 ( 5.3%, 48.0%): small: 18 -> 32 ( 14 )

( 6 ) 47668 ( 5.2%, 53.3%): small: 15 -> 16 ( 1 )

( 7 ) 24938 ( 2.7%, 56.0%): large: 4095 -> 4096 ( 1 )

( 8 ) 24278 ( 2.7%, 58.7%): small: 56 -> 64 ( 8 )

( 9 ) 13064 ( 1.4%, 60.1%): small: 104 -> 112 ( 8 )

(10 ) 12852 ( 1.4%, 61.6%): small: 136 -> 144 ( 8 )

There were 909,062 lines, which means there were 909,062 allocations. (I didn’t print information about frees.) The most common line was “small: 24 -> 32 ( 8 )” which occurred 205,920 times, accounting for 22.7% of all the lines in the file. The “small” refers to jemalloc’s class size categories (see above), and every allocation request of 24 bytes was rounded up to 32 bytes, resulting in 8 bytes of slop.

Looking through the list, I saw a case where 1,048,578 (2^20+2) bytes had been requested, and jemalloc had returned 2,097,152 (2^21) bytes. That’s a huge (1MB) waste, and also smelled very fishy. And on further inspection there were a lot of smaller but still suspicious cases where a number slightly larger than a power-of-two was being rounded up to the next power-of-two: 1,032 to 2048; 1,056 to 2,048; 2,087 to 4,096; 4,135 to 8,192, etc.

I investigated a number of these by adding some code to jemalloc to detect these suspicious request sizes, and then using Valgrind to print out a stack trace every time one occurred. What I found was four distinct places in the codebase where the code in question had flexibility in the amount of memory it allocated, and so had tried to ask for a power-of-two, but had botched the request and thus ended up asking for slightly more than a power-of-two!

- In js/src/vm/String.cpp, when concatenating two strings, the capacity of the resultant string is rounded up to the next power-of-two (unless the string is really big, in which case the rounding is less aggressive). This is a reasonable thing to do, as it means that if a further concatenation occurs, there’s a good chance there’ll be space for the extra chars to be added in-line, avoiding an extra allocation and copy. But the code first did its power-of-two round-up, and then added

sizeof(jschar) (which is two bytes) to the size, to allow space for the terminating NULL char. This code was responsible for the 1,048,578 byte request I mentioned earlier. Luke Wagner fixed this bug with a minimal change earlier this week. I was unable to easily measure the overall cost of this, but avoiding 1MB of slop with some frequency can only be a good thing.

- nsprpub/lib/ds/plarena.c implements an arena allocator. Each arena pool is given a size for each arena when it’s created, and the size passed in is almost always a power-of-two such as 1,024 or 4,096. Which is good, except that the allocator then has to add a few more bytes onto the request because each arena has a header that holds book-keeping information. When I start Firefox and load Gmail on my Linux64 box, I see that approximately 3MB of space is wasted because of this bug. The fix is simple, it just requires that the arena payload be reduced by the size of the header; my patch is awaiting review. Sadly enough, this problem was identified 3.5 years ago but not fixed.

- js/src/jsarena.cpp is almost identical to nsprpub/lib/ds/plarena.c and the story is the same: it has the same problem; it was first identified 3.5 years ago but not fixed; and my patch is awaiting review. This didn’t make much difference in practice because this problem had been separately identified for the particular JSArenaPool that allocates the most memory, and worked around using an ill-documented hack.

- db/sqlite3/src/sqlite3.c is the best one of all. SQLite is very careful about measuring its memory usage and provides an API to access those measurements. But in order to measure its own memory usage accurately, it adds 8 bytes to every allocation request in order to store the requested size at the start of the resulting heap block. In doing so, it converts a lot of 1,024 byte requests into 1,032 byte requests. Ironically enough, the slop caused by storing the requested size rendered the stored size inaccurate. I determined that as a result, SQLite is using roughly 1.15–1.20x more memory in Firefox than it thinks it is. So, go look at the “storage/sqlite” number in about:memory and mentally adjust it accordingly. (On my Mac laptop which has an old profile and 11 tabs open it’s currently 70MB, so there’s probably an additional 10–14MB of slop). SQLite is an upstream product, and the authors are working on a fix.

Once all these fixes have landed, there will be two benefits. First, Firefox’s memory usage will go down, which is good for everyone. Second, the proportion of dark matter in about:memory will also drop, which makes our memory profiling more accurate and useful.

After that, converting existing reporters to use malloc_usable_size will account for much of the legitimate slop, further reducing the amount of dark matter. And then I have a master plan for identifying whatever’s left.

So what’s up with this post’s title? Andreas Gal uses the term “clownshoes” to refer to code that is laughably bad. When I found and filed the first of these bugs, in a fit of whimsy I put “[clownshoes]” in its whiteboard. Only later did a realize how suitable this was: a clown’s shoes and these botched power-of-two-sized allocations both have lots of empty space in them, and thus make their owner less nimble than they could be. Perfect.