With the holiday, gift-giving season upon us, many people are about to experience the ease and power of new speech-enabled devices. Technical advancements have fueled the growth of speech interfaces through the availability of machine learning tools, resulting in more Internet-connected products that can listen and respond to us than ever before.

At Mozilla we’re excited about the potential of speech recognition. We believe this technology can and will enable a wave of innovative products and services, and that it should be available to everyone.

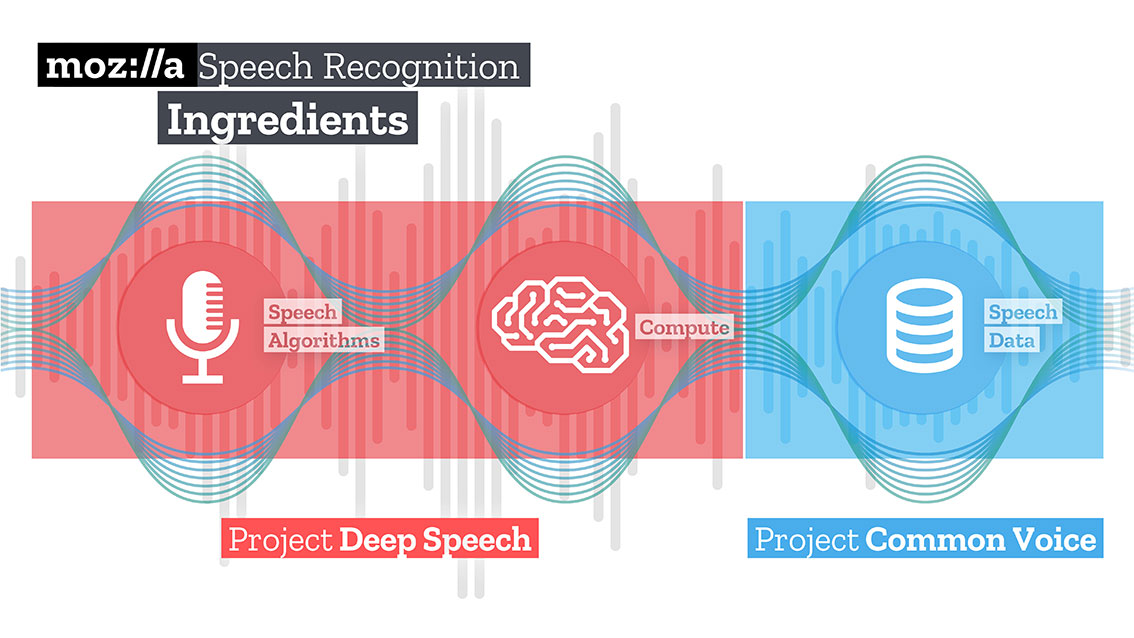

And yet, while this technology is still maturing, we’re seeing significant barriers to innovation that can put people first. These challenges inspired us to launch Project DeepSpeech and Project Common Voice. Today, we have reached two important milestones in these projects for the speech recognition work of our Machine Learning Group at Mozilla.

I’m excited to announce the initial release of Mozilla’s open source speech recognition model that has an accuracy approaching what humans can perceive when listening to the same recordings. We are also releasing the world’s second largest publicly available voice dataset, which was contributed to by nearly 20,000 people globally.

An open source speech-to-text engine approaching user-expected performance

An open source speech-to-text engine approaching user-expected performance

There are only a few commercial quality speech recognition services available, dominated by a small number of large companies. This reduces user choice and available features for startups, researchers or even larger companies that want to speech-enable their products and services.

This is why we started DeepSpeech as an open source project. Together with a community of likeminded developers, companies and researchers, we have applied sophisticated machine learning techniques and a variety of innovations to build a speech-to-text engine that has a word error rate of just 6.5% on LibriSpeech’s test-clean dataset.

In our initial release today, we have included pre-built packages for Python, NodeJS and a command-line binary that developers can use right away to experiment with speech recognition.

Building the world’s most diverse publicly available voice dataset, optimized for training voice technologies

One reason so few services are commercially available is a lack of data. Startups, researchers or anyone else who wants to build voice-enabled technologies need high quality, transcribed voice data on which to train machine learning algorithms. Right now, they can only access fairly limited data sets.

To address this barrier, we launched Project Common Voice this past July. Our aim is to make it easy for people to donate their voices to a publicly available database, and in doing so build a voice dataset that everyone can use to train new voice-enabled applications.

Today, we’ve released the first tranche of donated voices: nearly 400,000 recordings, representing 500 hours of speech. Anyone can download this data.

What’s most important for me is that our work represents the world around us. We’ve seen contributions from more than 20,000 people, reflecting a diversity of voices globally. Too often existing speech recognition services can’t understand people with different accents, and many are better at understanding men than women — this is a result of biases within the data on which they are trained. Our hope is that the number of speakers and their different backgrounds and accents will create a globally representative dataset, resulting in more inclusive technologies.

To this end, while we’ve started with English, we are working hard to ensure that Common Voice will support voice donations in multiple languages beginning in the first half of 2018.

Finally, as we have experienced the challenge of finding publicly available voice datasets, alongside the Common Voice data we have also compiled links to download all the other large voice collections we know about.

Our open development approach

We at Mozilla believe technology should be open and accessible to all, and that includes voice. Our approach to developing this technology is open by design, and we very much welcome more collaborators and contributors who we can work alongside.

As the web expands beyond the 2D page, into the myriad ways where we connect to the Internet through new means like VR, AR, Speech, and languages, we’ll continue our mission to ensure the Internet is a global public resource, open and accessible to all.