Since we’ve added mach try perf, quite a few improvements have been made, along with new features added. Below, you’ll find a summary of the most important changes. If mach try perf is something new to you, see this article on Improving the Test Selection Experience with Mach Try Perf.

Standard Workflow

The workflow for using mach try perf can be a bit difficult to follow so we’ve prepared a standard workflow guide to help make the most of this new tool. The guide can be found here.

Mach try perf –alert

Something that we’ve wanted to do for a very long time now but have not had the infrastructure, and tooling required for it is allowing developers to run performance tests based on only the number (summary ID) of the alert that they are working on. I’m excited to say that we now have this functionality through mach try perf with --alert.

This feature was added by a volunteer contributor, MyeongJun Go (Jun). This was a complex task that required him to make changes on Treeherder, and in our Mozilla-Central code. On the Treeherder side, he added an API call to find the tasks that produced an alert. Then, using this new API call, he made some changes to mach try perf to allow us to run all the tasks that get returned. This new feature can be used like so: ./mach try perf --alert <ALERT-SUMMARY-ID>

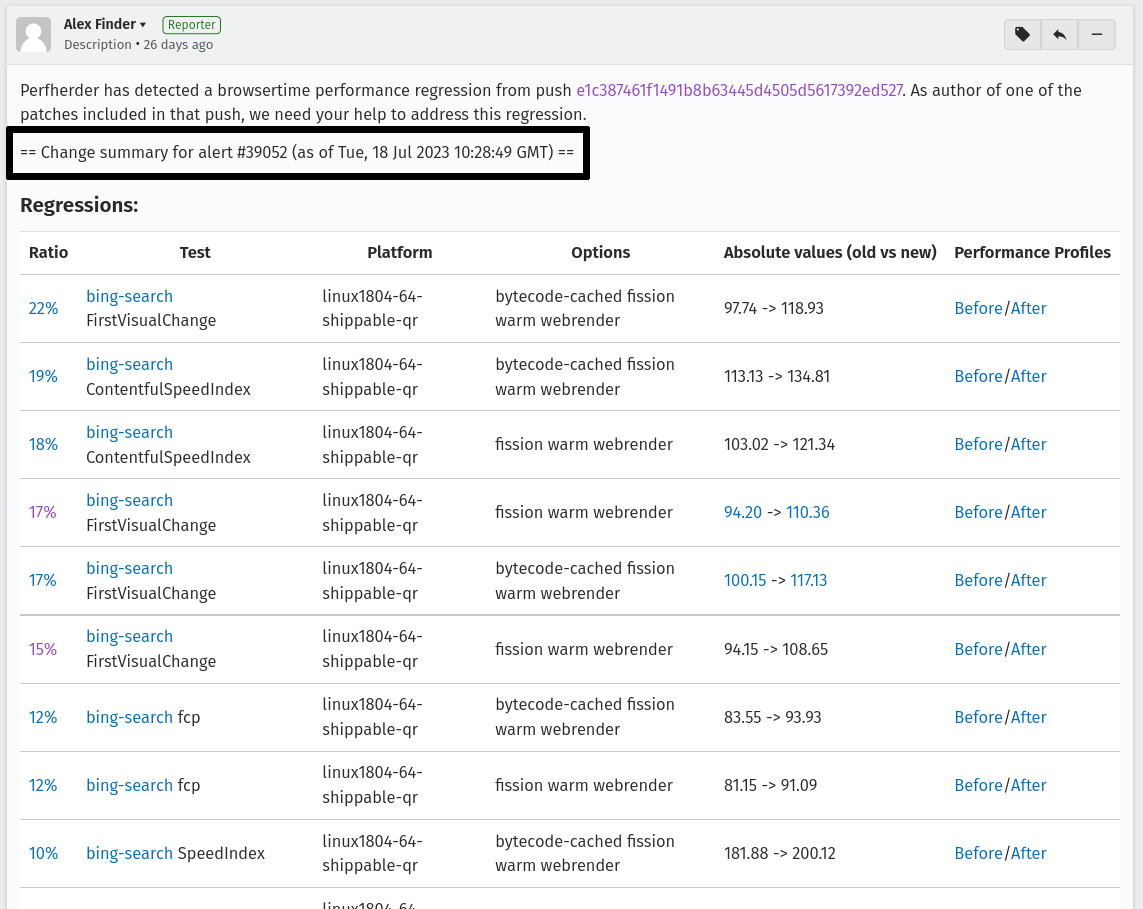

The alert summary ID can be found by looking at the Bugzilla alert comment (in this case it’s 39052):

Some more information about this feature can be found here. In the future, the alert summary comment on bugs will include information about how to do this. See bug 1848885 for updates on this work.

Mach try perf –perfcompare-beta

The Performance Tools team is currently working on revamping our CompareView into PerfCompare. This new tool will provide us with the ability to extend the tooling, and provide more features to improve developer experiences, and efficiency when it comes to comparing performance changes across different sets of changes. More information on this project can be found in this blog post.

With ./mach try perf --perfcompare-beta, you can test out the beta version of this new interface, and begin providing feedback on it in the Testing :: PerfCompare component.

Comparators

Lastly, for more complex use cases, we have a new feature called “comparators”. These allow us to customize how the multiple pushes are produced. For instance, one custom comparator that we have is for Speedometer 3 benchmark tests so that we can run a push with one benchmark revision, and a push with another. For example, this command will let you run the Speedometer 3 benchmark on two different revisions:

./mach try perf --no-push --comparator BenchmarkComparator --comparator-args new-revision=c19468aa56afb935753bd7150b33d5ed8d11d1e3 base-revision=a9c96c3bd413a329e4bc0d34ce20f267c9983a93 new-repo=https://github.com/WebKit/Speedometer base-repo=https://github.com/WebKit/Speedometer

With this feature, we no longer need to make changes to the mozilla-central code to run experiments with new benchmark changes. More information about this can be found here, and the BenchmarkComparator can be found here. In the future, we’ll be using these to do more than 2 pushes, and enable comparisons with multiple preference settings.

Future Work

In the very near future, descriptions of the various categories will be added to mach try perf and displayed under the tasks selected (see bug 1826190) this is being worked on by Jun. We’d also like to make mach try perf compatible with –push-to-lando as it is currently unsupported there due to the remote revision requirement, see bug 1836069 for this.

For any questions, comments, etc. you can find us in the #perftest channel on Element.

No comments yet

Comments are closed, but trackbacks are open.