Our visual metrics processing system used to use two separate machines to produce visual metrics from our pageload tests. In this post, I’ll describe how we moved to a single-machine system that also brought about many other benefits for us.

Note: If you’ve never heard of visual metrics before, they can be summed up as performance metrics processed from a video recording of a pageload. You can find more information about these from this article titled Improving Firefox Page Load, by Bas Schouten.

Over the last little while, we’ve been busy moving from a Web Extension-based performance testing system to a Browsertime-based one. I’ve briefly detailed this effort in my post on Migrating Raptor to Browsertime. Originally, our visual metrics processing system in Continuous Integration (CI) used two machines to produce the visual metrics, one for running the test, and the other for processing the video recordings to obtain the visual metrics. This made sense at the time as the effort required to try to run the visual metrics script on the same machine as the task was far too large at the beginning. There were also concerns around speed of execution on the machines, and the installation of dependencies that were not very portable across the various platforms we test.

However, while migrating to Browsertime, it quickly became clear that this system was not going to work. In CI, this system was not built for the existing workflow and we had to implement multiple special-cases to handle Browsertime tests differently. Primarily, our test visualization, and our test retriggering capabilities needed the most work. In the end, those hacks didn’t help much because the developers couldn’t easily find the test results to being with! Local testing wasn’t much better either: dependencies were difficult to install and handle across multiple platforms and python versions, retriggering/rebuilding tasks on Try was no longer possible with --rebuild X, and, in general, running visual metrics was very error-prone.

This was an interesting problem and one that we needed to resolve so developers could easily run visual metrics locally, and in CI with a simple flag, without additional steps, and as similar as possible to the rest of our test harnesses. There were multiple solutions that we thought of trying first such as, building a microservice to process the results, or using a different script to process visual metrics. But each of those came with more issues than we were trying to solve. For instance, the microservice would alleviate the need for two-machines in CI, but then we would have another service outside of Taskcluster that we would need to maintain and this wouldn’t resolve issues with using visual metrics locally.

After looking over the issues and the script again (linked here), I realized that our main issue with using this script in CI, and locally was the ImageMagick dependency. It was not a very portable piece of software, and it frequently caused issues when installing. The other non-Python dependency was FFMPEG but this was easy to install on all of our platforms, and we were already doing this automatically. Installing the Python-dependencies were also never an issue. This meant that if we remove the ImageMagick dependency from the script, we could use it on the same machine in CI, and eliminate the friction of using visual metrics locally. The small risk with doing this was that the script would run a bit slower as we would have more Python-based computations which can be slow compared to other languages. That said, the benefits outweighed the risks here so I moved forward with this solution.

I began by categorizing all the usages of ImageMagick in the script, and then converted all of them, one by one, into a pure-python implementation. I used my own past experience with image processing to do this, and I also had to look through their documentation and their source code to determine how they built each algorithm so I could end up with the same results before and after this rework. This was very important because we didn’t want to rework the script to then receive possibly faulty metrics. At the same time, I even managed to fix an outstanding bug in the hero element calculations by removing the alpha channel that made the edges of the hero element blurry, and this also revealed an issue in the hero element sizing (obtaining sizes of 0) calculations which are produced elsewhere.

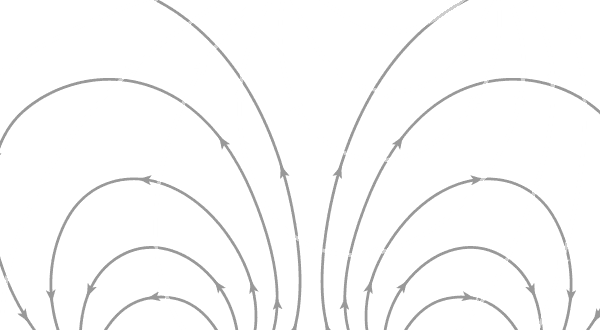

Once I finished with the rework, I was immediately able to use it locally, and in CI. This next phase of the rework involved assuring that the metrics before and after the change are exactly the same in all cases. In all cases except for Contentful Speed Index (CSI), the metrics matched perfectly. For CSI, the issue was that we had a different underlying algorithm for Canny-Edge detection and different parameters from what we used to use that caused this mismatch. I worked with Bas Schouten, and the performance team to resolve this issue by finding parameters that matched what we expected from the edge-detection the most, and provided similar but not exact matches in terms of metrics. We agreed upon some parameters, ran some final tests, and then confirmed that this is what we want from CSI. In other words, while I was reworking this metric, we also managed to slightly improve it’s accuracy. In the image below, note how the edges that we used to use (in green) were very noisy and had sporadic pixels defined as edges, while the new method (in red) catches the content much better without so much variance in the edges produced. The old method also has a slight shift in the edges because the Gaussian smoothing kernel used was asymmetric.

Red: Edges from the new edge detection method. Green: Edges from the old edge detection method. Left: New method using a sigma of 0.7, right: new method using a sigma of 1.5. In both cases, new method edges are overlaid onto the old edges in Green (same on both sides).

Throughout this work, Peter Hedenskog was also very helpful with testing the changes and notifying me about bugs he found as he’s also interested in using this script in Browsertime (thank you!). Once we were all done with testing, and the metrics matched perfectly, or had minor acceptable differences, then we were able to finalize a series of patches and land it so that developers could enjoy using our tools with much less friction. Thank you to Kash Shampur for reviewing all the patches! Today, developers are able to retrigger tasks without issues or special cases, are able to easily find the results of a test in CI, and can easily use visual metrics locally without having to install extra dependencies by themselves. The risk about slower computation turned out to be a non-issue as the script runs just as quickly. This change also resulted in almost halving the number of tasks we have defined for Browsertime tests in CI as we no longer duplicate them for visual metrics which, in theory, should make the Gecko Decision task slightly faster. Overall, reworking this nearly legacy piece of code that is critical to modern performance testing was a great success! You can find the new visual metrics processing script here if you are interested.

No comments yet

Comments are closed, but trackbacks are open.