After over twenty years, Mozilla is still going strong. But over that amount of time, there’s bound to be changes in responsibilities. This brings unique challenges with it to test maintenance when original creators leave and knowledge of the purposes, and inner workings of a test possibly disappears. This is especially true when it comes to performance testing.

Our first performance testing framework is Talos, which was built in 2007. It’s a fantastic tool that is still used today for performance testing very specific aspects of Firefox. We currently have 45 different performance tests in Talos, and all of those together produce as many as 462 metrics. Having said that, maintaining the tests themselves is a challenge because some of the people who originally built them are no longer around. In these tests, the last person who touched the code, and who is still around, usually becomes the maintainer of these tests. But with a lack of documentation on the tests themselves, this becomes a difficult task when you consider the possibility of a modification causing a change in what is being measured, and moving away from its original purpose.

Over time, we’ve built another performance testing framework called Raptor which is primarily used for page load testing (e.g. measuring first paint, and first contentful paint). This framework is much simpler to maintain and keep up with its purpose but the settings used for the tests change often enough that it becomes easy to forget how we set up the test, or what pages are being tested exactly. We have a couple other frameworks too, with the newest one (which is still in development) being MozPerftest – there might be a blog post on this in the future. With this many frameworks and tests, it’s easy to see how test maintenance over the long term can turn into a bit of a mess when it’s left unchecked.

To overcome this issue, we decided to implement a tool to dynamically document all of our existing performance tests in a single interface while also being able to prevent new tests from being added without proper documentation or, at the least, an acknowledgement of the existence of the test. We called this tool PerfDocs.

Currently, we use PerfDocs to document tests in Raptor and MozPerftest (with Talos in the plans for the future). At the moment in Raptor, we only document the tests that we have, along with the pages that are being tested. Given that Raptor is a simple framework with the main purpose being to measure page loads, this documentation gives us enough without getting overly complex. However, we do plan to add much more information to it in the future (e.g. what branches the tests run on, what are the test settings).

The PerfDocs integration with MozPerftest is far more interesting though and you can find it here. In MozPerftest, all tests have a mandatory requirement of having metadata in the test itself. For example, here’s a test we have for measuring the start-up time on our mobile browsers which describes things such as the browsers that it runs on, and even the owner of the test. This lets us force the test writer to think about maintainability as we move into the future rather than simply writing it and forgetting it. For that Android VIEW test, you can find the generated documentation here. If you look through the documented tests that we have, you’ll notice that we also don’t have a single person listed as a maintainer. Instead, we refer to the team that built it as the maintainer. Furthermore, the tests actually exist in the folders (or code) that those teams are responsible for so they don’t need to exist in the frameworks folder giving us more accountability for their maintenance. By building tests this way, with documentation in mind, we can ensure that as time goes on, we won’t lose information about what is being tested, its purpose, along with who should be responsible for maintaining it.

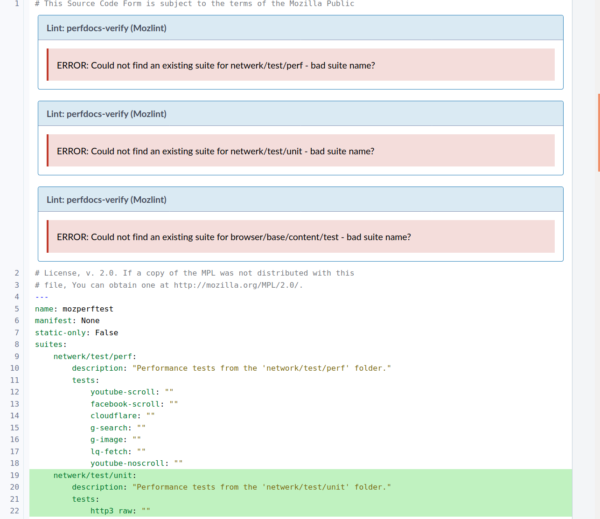

Lastly, as I alluded to above, outside of generating documentation dynamically we also ensure that any new tests are properly documented before they are added into mozilla-central. This is done for both Raptor and MozPerftest through a tool called review-bot which runs tests on submitted patches in Phabricator (the code review tool that we use). When a patch is submitted, PerfDocs will run to make sure that (1) all the tests that were documented actually exist, and (2) all the tests which exist are actually documented. This way, we can prevent our documentation from becoming outdated with tests that don’t exist anymore, and that all tests are always documented in some way.

The review-bot left a complaint on this patch which was adding new suites. This one is because we could not find the actual tests.

In the future, we hope to be able to expand this tool and its features from our performance tests to the massive box of functional tests that we have. If you think having 462 metrics to track is a lot, consider the thousands of tests we have for ensuring that Firefox functionality is properly tested.

This project started in Q4 of 2019, with myself [:sparky], and Alexandru Ionescu [:alexandrui] building up the base of this tool. Then, in early H1-2020, Myeongjun Go [:myeongjun], a fantastic volunteer contributor, began hacking on this project and brought us from lightly documenting Raptor tests to having links to the tested pages in it, and even integrating PerfDocs into MozPerftest.

If you have any questions, feel free to reach out to us on Riot in #perftest.

No comments yet

Comments are closed, but trackbacks are open.