In October there were 202 alerts generated, resulting in 25 regression bugs being filed on average 4.4 days after the regressing change landed.

Welcome to the second edition of the new format for the performance sheriffing newsletter! In last month’s newsletter I shared details of our sheriffing efficiency metrics. If you’re interested in the latest results for these you can find them summarised below, or (if you have access) you can view them in detail on our full dashboard. As sheriffing efficiency is so important to the prevention of shipping performance regressions to users, I will include these metrics in each month’s newsletter.

Sheriffing efficiency

- All alerts were triaged in an average of 1.7 days

- 75% of alerts were triaged within 3 days

- Valid regression alerts were associated with a bug in an average of 2 days

- 95% of valid regression alerts were associated with a bug within 5 days

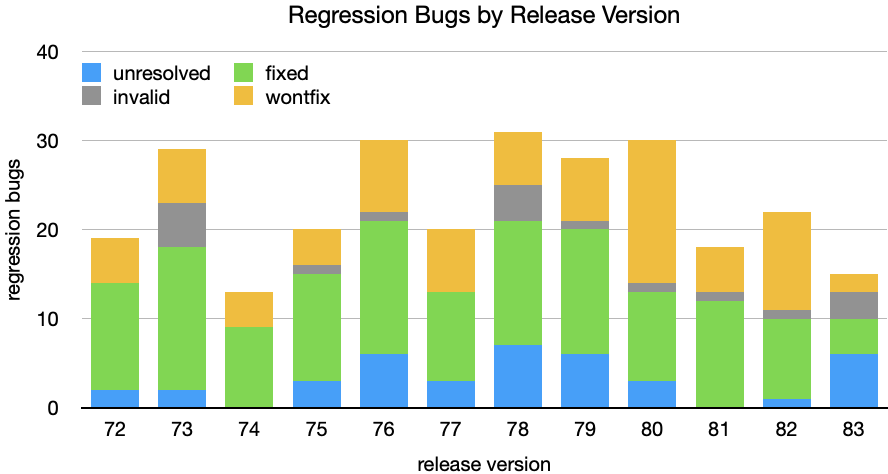

Regressions by release

For this edition I’m going to focus on a relatively new metric that we’ve been tracking, which is regressions by release. This metric shows valid regressions by the version of Firefox that they were first identified in, grouped by status. It’s important to note that we are running these performance tests against our Nightly builds, which is where we land new and experimentational changes, and the results cannot be compared with our release builds.

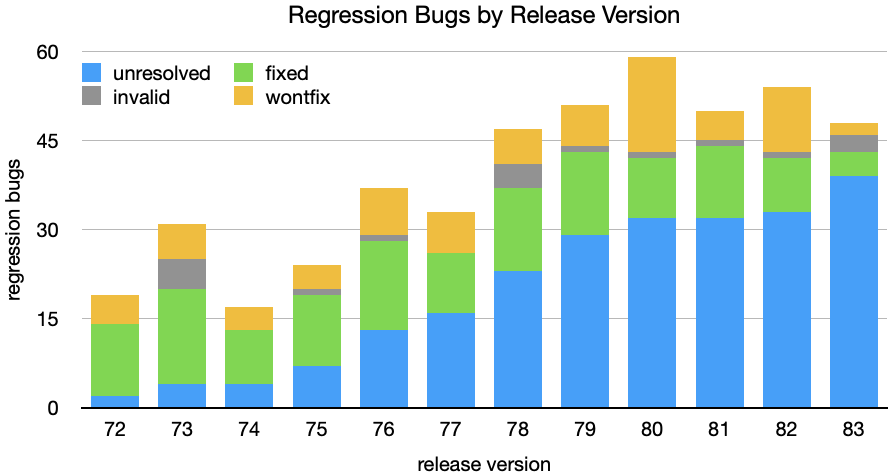

In the above chart I have excluded bugs resolved as duplicates, as well as results prior to release 72 and later than 83 as these datasets are incomplete. You’ll notice that the more recent releases have unresolved bugs, which is to be expected if the investigations are ongoing. What’s concerning is that there are many regression bugs for earlier releases that remain unresolved. The following chart highlights this by carrying unresolved regressions into the following release versions.

In the above chart I have excluded bugs resolved as duplicates, as well as results prior to release 72 and later than 83 as these datasets are incomplete. You’ll notice that the more recent releases have unresolved bugs, which is to be expected if the investigations are ongoing. What’s concerning is that there are many regression bugs for earlier releases that remain unresolved. The following chart highlights this by carrying unresolved regressions into the following release versions.

When performance sheriffs open regression bugs, they do their best to identify the commit that caused the regression and open a needinfo for the author. The affected Firefox version is indicated (this is how we’re able to gather these metrics), and appropriate keywords are added to the bug so that it shows up in the performance triage and release management workflows. Whilst the sheriffs do attempt to follow up on regression bugs, it’s clear that there are situations where the bug makes slow progress. We’re already thinking about how we can improve our procedures and policies around performance regressions, but in the meantime please consider taking a look over the open bugs listed below to see if any can be nudged back into life.

- 2 open regression bugs for Firefox 72

- 2 open regression bugs for Firefox 73

- 3 open regression bugs for Firefox 75

- 6 open regression bugs for Firefox 76

- 3 open regression bugs for Firefox 77

- 7 open regression bugs for Firefox 78

- 6 open regression bugs for Firefox 79

- 3 open regression bugs for Firefox 80

Summary of alerts

Each month I’ll highlight the regressions and improvements found.

- 😍 19 bugs were associated with improvements

- 🤐 3 regressions were accepted

- 🤩 6 regressions were fixed (or backed out)

- 🤥 3 regressions were invalid

- 🤗 1 regression is assigned

- 😨 12 regressions are still open

Note that whilst I usually allow one week to pass before generating the report, there are still alerts under investigation for the period covered in this article. This means that whilst I believe these metrics to be accurate at the time of writing, some of them may change over time.

I would love to hear your feedback on this article, the queries, the dashboard, or anything else related to performance sheriffing or performance testing. You can comment here, or find the team on Matrix in #perftest or #perfsheriffs.

The dashboard for October can be found here (for those with access).

No comments yet

Comments are closed, but trackbacks are open.