Introduction

I have been a member of the performance test team for the past two and a half years. During that time, the performance team has had many discussions about improving the developer experience when running performance tests.

The most significant pain points for developers are that:

1) tests are difficult to run, or they don’t know which tests to run, and

2) our tools for comparing these results are complex or confusing.

One of the tools we currently use is called Perfherder. Perfherder does many things, such as alerting on performance improvements and regressions, comparing results of performance tests, and graphing those results.

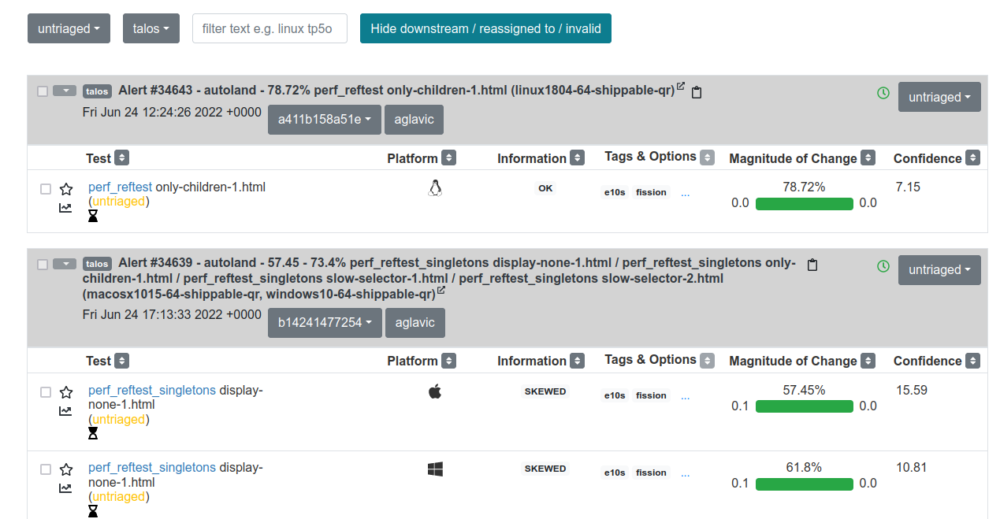

Perfherder Alerts View

Our performance sheriffs use Perfherder Alerts View to investigate possible improvements or regressions. They first determine if the alert is valid and then identify the culprit revision that caused a regression or improvement.

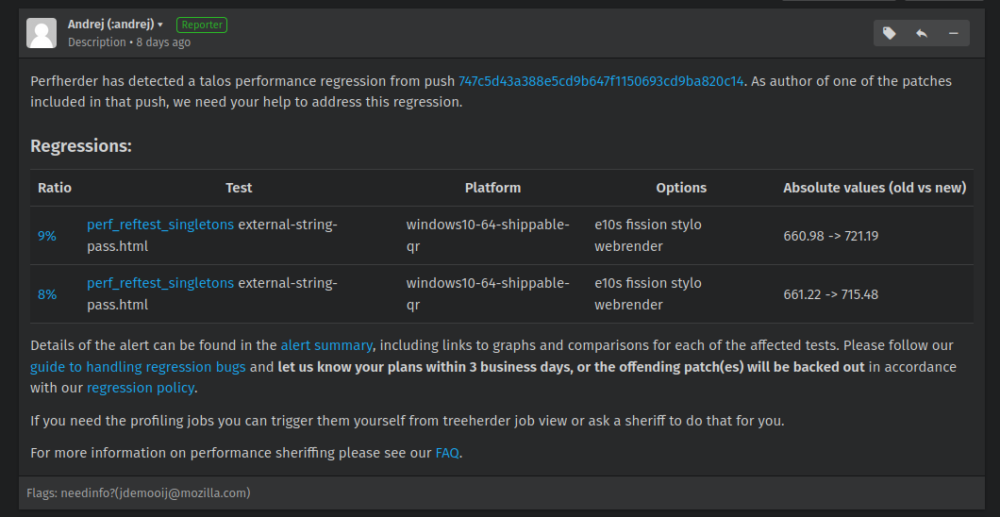

The sheriff then files a bug in Bugzilla. If it is a regression, a :needinfo flag is added for the author of that commit.

Example of a performance regression bug

The user workflows for the Compare View and Graphs View are usually one of two scenarios:

Scenario A: An engineer is tagged in a regression (or improvement) by a performance sheriff, as described above. The engineer then needs to determine why the patch caused a regression, which can take many iterations of altering code, running tests, and comparing the results.

or

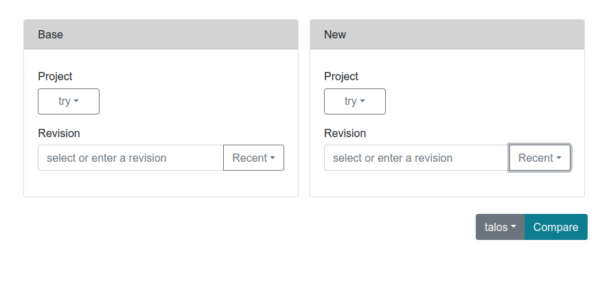

Scenario B: A firefox engineer working on a patch will run performance tests to ensure the latest changes have not caused performance to regress. Once tests are complete, the engineer compares the results before and after those changes were made.

The proactive approach, Scenario B, is highly preferable to a reactive one, as in Scenario A.

A primary goal of the performance test team is to simplify and improve the experience of running performance tests and comparing the results.

If we succeed in this, I believe we can improve the performance of Firefox as a whole.

By providing better tools and workflows, we will empower our engineers to include performance testing as a part of their standard development process.

Perfherder User Experience

Perfherder Compare View – Search for Revision

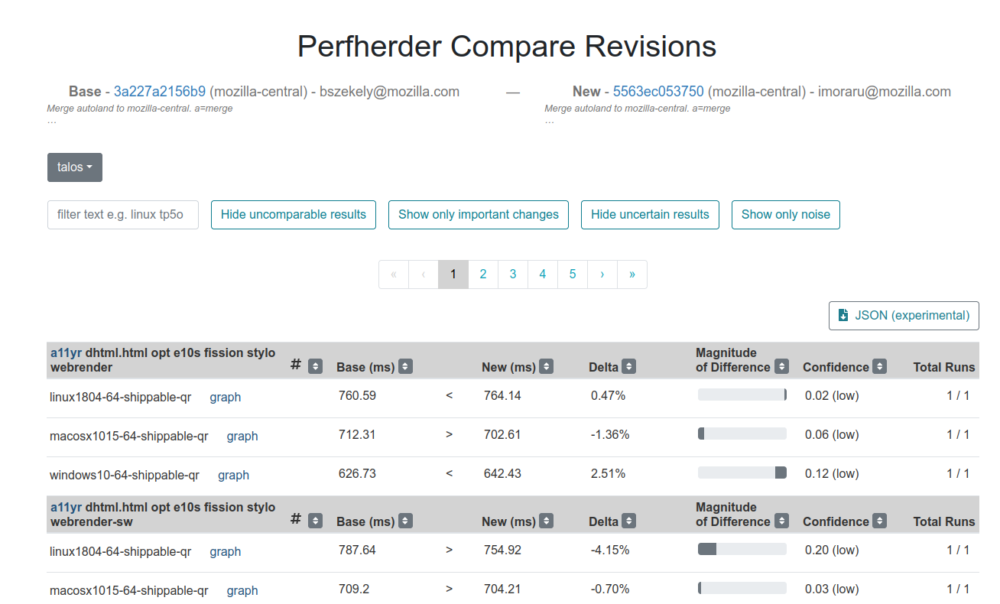

Perfherder Compare View – Comparison Results

We have conducted usability testing and user research to identify developer pain points for Perfherder and attempted to address some of these issues.

However, sometimes these changes created an even more cluttered UI. Some of these changes included adding a User Guide or more documentation to explain how to use the tools, which is a problematic approach.

If we need to write more documentation to explain how to use a tool that should be reasonably straightforward, it suggests that there are fundamental weaknesses in our UX design.

Perfherder was initially developed for a particular use case and has been expanded to include more features and test harnesses over the years.

While it has served its purpose, the time has come to develop a new comparison tool to support the wide range of features that we have added to Perfherder–and some new ones too!

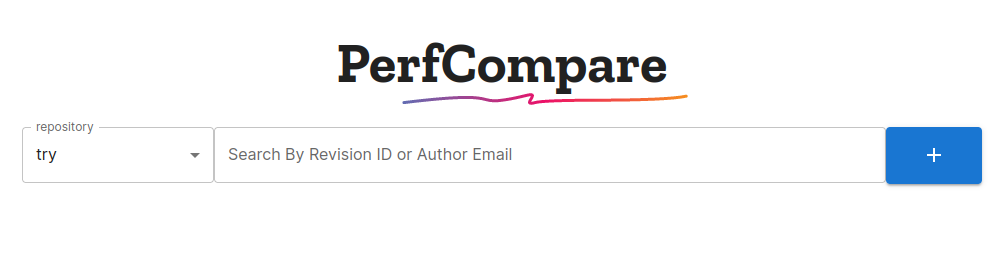

Introducing PerfCompare

PerfCompare is a performance comparison tool that aims to replace Perfherder Compare View. This is a task I have wanted to undertake for quite some time, but we had a smaller team and lacked the resources to justify a project of this size.

Although I am an engineer, I am also an artist. I studied art for years, attending a residential arts school my junior year, and (very briefly) pursuing a double major in Computer Engineering and Art.

As a career, however, I knew that software engineering was what I wanted to pursue, while art remained an enjoyable pastime.

As such, I am very opinionated on matters of aesthetics and design. My goal is to create a tool that is easy to use–but also enjoyable to use!

I want to create something to delight engineers and make them want to run performance tests.

I approached this project with a blank page–literally! When creating initial designs, I sat in front of a white sheet of paper with a black pen. In my mind, I asked, “What does the user want to do?”

I broke this down into the most simple actions, step by step. I wanted to remove anything unnecessary or confusing, and create a clean and aesthetic UI.

Since then, three other engineers have joined the project. Together we have been working to create an MVP to announce and demo at the All Hands in September.

We aim to have a product ready for initial user testing and enroll it in Mozilla’s foxfooding program.

While some of these are still under development, below are some highlights of improvements and features we have implemented so far.

Highlights

- List of repositories to select from includes only the most common, rather than an exhaustive list of all repositories.

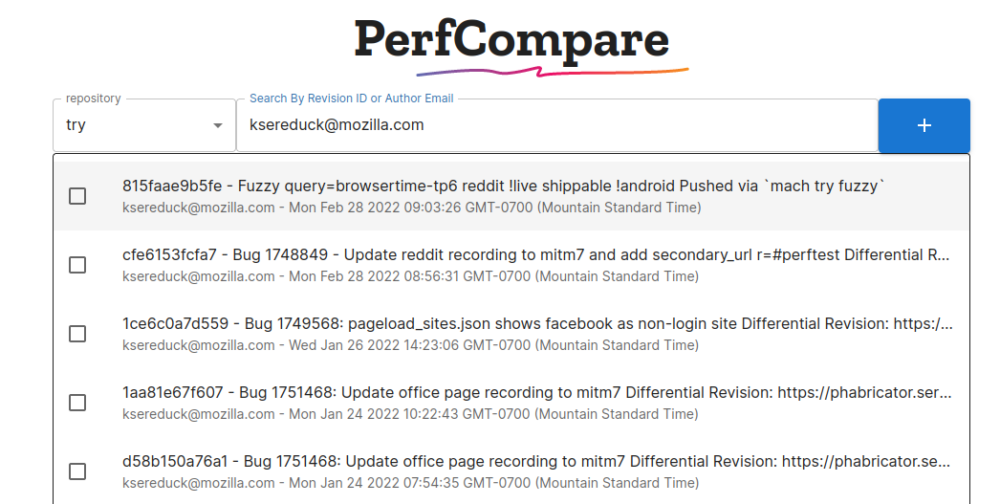

- Users can search by short hash, long hash, or author email, instead of long hash only.

Search for revision by author email

- When searching for revisions, the results include not just a revision hash and author email, but also the commit message and timestamp, making it easier to differentiate between them.

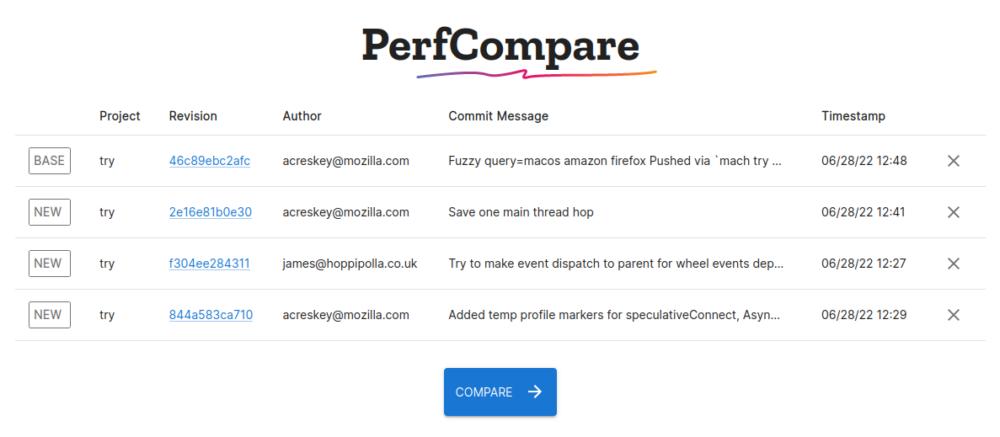

- Users can select up to four revisions to compare instead of just two.

Select four revisions to compare

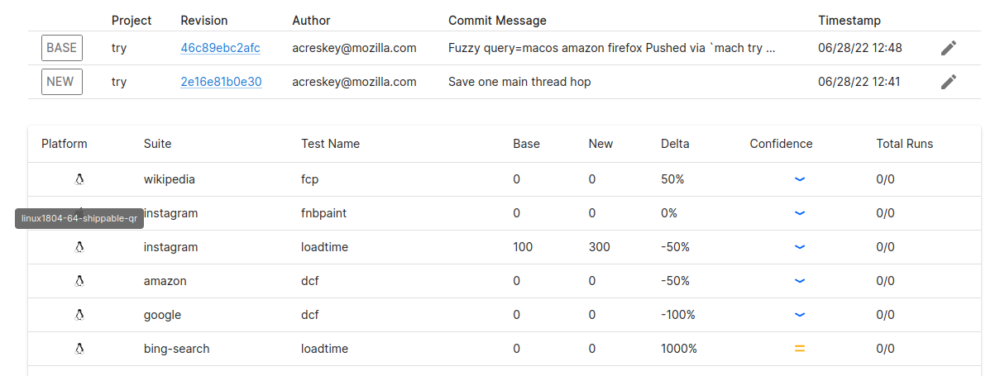

- We are using icons to represent platform and confidence, with a tooltip that displays more information.

- Easier-to-read test names.

- We have removed magnitude of change and repeated header rows for a cleaner UI.

- Users can edit selected revisions directly from the Results View instead of navigating back to Search View.

Example of results view using mock data, showing the platform tooltip

While we still have much to do before PerfCompare is ready for user testing, we have made significant progress and look forward to demonstrating our tool in Hawaii!

If you have any feedback or suggestions, you can find us in the #PerfCompare matrix channel, or join our #PerfCompare User Research channel!

Thank you to everyone who has contributed to our user research so far!

Resources

- Perfherder Compare View

- Perfherder Compare – example comparison

- PerfCompare github repository

- #PerfCompare matrix channel

- #PerfCompare User Research channel

- Bug 1754831 – [meta] feature requests for improved performance comparison tool

- Bug 1750983 – [meta] developer pain points in performance testing

No comments yet

Comments are closed, but trackbacks are open.