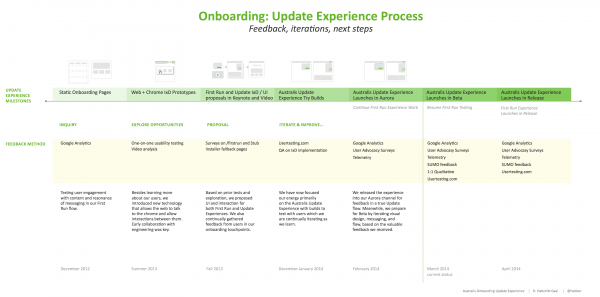

This post focuses on process and accompanies Introducing the update experience for Australis. – Zhenshuo Fang and Holly Habstritt Gaal, Mozilla UX.

We’re really excited to release a new onboarding flow for users updating to the new Firefox (Australis). Throughout this project we’ve learned a lot about design process that helped us to make decisions and allow our assumptions to become something tangible and valuable for our users. We started with high level thinking about how to educate users and are now focused on updating current users to the new Firefox. Our process started with a few assumptions, but evolved to fit a project that didn’t exist when we started. This post explains a few questions that we asked along the way.

1. Are my assumptions right?

Assumptions need to be explored and tested before offering solutions. In the early stages of our work, “onboarding” was not yet a project on the roadmap and was not tied to the launch of Australis in Firefox 29. We felt strongly that improving the onboarding experience would help the adoption of the new browser. Sentiment reports also tell us that better onboarding is desired by our users. In order to demonstrate the value of onboarding, we first needed to learn more about the assumptions we had about browser usage and onboarding in general. Initially, there were only a few of us exploring our ideas, so early testing and learning allowed us to rule out assumptions early and work efficiently.

One assumption we had was that users in a First Run or Update experience are likely to ignore what appears to be a typical web page since they are focused on a task like checking email when opening their browser. This presents a challenge of not creating a barrier from completing the user’s task, but also creating an experience that is both valuable and memorable. We also had assumptions about language and tone, how feature education could lead to more usage hours, and many others.

Tools we have to help

-

Usertesting.com, surveys, and one-on-one observation: By gathering qualitative data (feedback directly from our users) we are able to quickly validate or rule out our early assumptions before diving into them too far.

-

Google Analytics: We ran very early web experiments to test copy, UI styles, and general engagement with our content, but will continue to use GA after release.

-

Telemetry and Firefox Health Report tell us about user behavior in the browser itself. See Blake Winton’s post about Measuring Australis to learn more.

2. What should I prototype?

We know that prototyping is useful, but knowing what to prototype and being efficient is important. Testing first with existing products, Keynote mockups, and static pages allowed us to decide what we should prototype further with engineers. The key to learning more about our assumptions and proposing possible solutions was working with engineers early in the process. For example, this allowed us to know the technical possibilities and limits when debating early UI and interaction ideas.

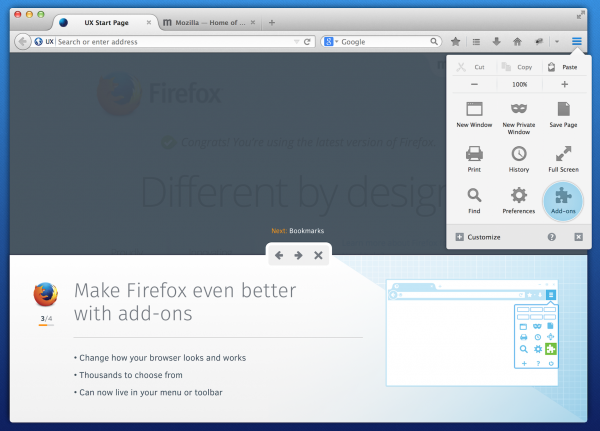

An early premise was that watching a video or looking at a screenshot is not as memorable or clear as showing the user what is happening directly in the browser. We knew that the best experience shouldn’t just tell the user about something new, but result in a behavioral change and a new way of thinking. Exploring this idea with a rough prototype is what lead to creating the foundation of the experience, technology that allows the web and chrome to interact with one another. This avoids having to rely on passive viewing of a web page to create a memorable experience. Check out this video from Michael Verdi to see what I mean.

* video demonstrates our progress and thinking as of November 2013

Working with engineers early with prototypes allowed us to do the following:

-

Confirm that the new interaction for showing, not just telling a user about the browser was technically possible.

-

Have access to try builds to test with users outside of the release channel. This was essential to knowing what UI and interaction and would be accepted and adopted by users.

-

Find bugs early and be able to plan for what challenges we may face in implementation. This was essential for moving forward in proposing solutions.

3. Am I confident enough to propose a solution?

As a direct result of everything we learned in preliminary exploration, prototyping, and testing we were able to propose solutions based on key findings that we felt confident would lead to valuable onboarding experience. For example:

-

an experience that doesn’t feel like a webpage, appears to be connected to the browser itself, and not isolated to the web

-

an experience that balances low interruption with clear visibility that a UI tour is present

-

a new interaction pattern where the web and chrome collaborate (as a result users are noticing and remembering the experience more than serving the same information in a typical web page)

Being able to share what we learned by referencing data and user test results made our design choices easier to understand by those not directly involved. It was also much easier to have a discussion and make sure everyone felt confident in design choices before moving forward.

For more about how we designed the experience see Introducing the update experience for Australis.

4. Is everyone on board?

Collaboration leads to a better solution and experience for our users, but also leads to smoother internal adoption of an idea. This project started as a few of us exploring assumptions, then became a weekly meeting that included those interested in improving onboarding and user education, and is now a cross-functional team working together towards the Australis launch in Firefox 29.

The collaboration spans across teams such as release engineering, web development, marketing, visual design, UX, user advocacy & support team, metrics, and others. This is an example of how UX isn’t an isolated step in the process. It affects the life cycle of a project and requires balancing input and collaboration from all teams and roles.

5. Are we improving?

With all of the hard work we have put into this, we want to be sure to continue to improve the experience for our users. To do so, it is important to know both what our definition of success is and how to monitor it. As we release the experience to more users in Firefox 29, we’ll learn more about how it can have an impact on behavior, usage hours, and overall enjoyment of using Firefox. What we learn will also help to improve other onboarding touchpoints we have with our users such as the First Run experience. The benefit of creating the controls and majority of the UI in the web means that we are not tied to our release cycle and can iterate quickly.

An interesting challenge we have is that interaction occurs both in the browser chrome and web page. We would like you to use the browser and not just interact with a web page, so high interaction in a web page doesn’t necessarily equal success. Success is when the user finds value in the message we are communicating and that results in using something new in the browser, using the browser more, or adopting new behavior. If we notice changes in user behavior and long-term use of the browser, knowing if they are direct result of an onboarding experience will guide our improvements and next steps.

What we’ve learned isn’t about an exact process that will work for every project, but about how answering these questions and collaborating early will lead to a better experience for our users.

We love to discuss all things onboarding. If you have any questions, please reach out to Holly Habstritt Gaal and Zhenshuo Fang.

Stef Miller wrote on

wrote on

Holly Habstritt Gaal wrote on

wrote on

Abhi wrote on

wrote on

two wrote on

wrote on