This year, the Firefox User Research team is planning to add two new researchers to our group. The job posting went live last month, and after just a few weeks of accepting applications, we had over 900 people apply.

Current members of the Firefox User Research Team fielded dozens of messages from prospective applicants during this time, most asking for informational meetings to discuss the open role. We decided as a team to decline these requests across the board because we did not have the bandwidth for the number of meetings requested, and more importantly we have spent a significant amount of time this year working on minimizing bias in our hiring process.

We felt that meeting with candidates outside of the formal hiring process would give unfair advantage to some candidates and undermine our de-biasing work. At the same time, in alignment with Mozilla’s values and to build on Mozilla’s diversity and inclusion disclosures from earlier this year, we realized there was an opportunity to be more transparent about our hiring process for the benefit of future job applicants and teams inside and outside Mozilla thinking about how they can minimize bias in their own hiring.

Our Hiring Process Before This Year

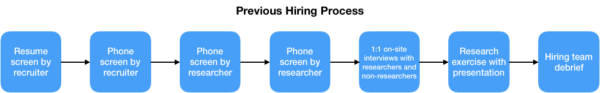

Before this year, our hiring process consisted of a number of steps. First, a Mozilla recruiter would screen resumes for basic work requirements such as legal status to work in the regions where we were hiring and high-level relevant work experience. Applicants with resumes that passed the initial screen would then be screened by the recruiter over the phone. The purpose of the phone screen was to verify the HR requirements, the applicant’s requirements, work history, and most relevant experience.

If the applicant passed the screen with the recruiter, two members of the research team would conduct individual phone screens with the applicant to understand the applicant’s experience with different research methods and any work with distributed teams. Applicants who passed the screen with the researchers would be invited to a Mozilla office for a day of 1:1 in-person interviews with researchers and non-researchers and asked to present a research exercise prepared in advance of the office visit.

However, there were also a lot of limitations to our former hiring process. Each Mozilla staff person involved determined their own questions for the phone and in-person components. We had a living document of questions team members liked to ask, but staff were free to draw on this list as little or as much as they wanted. Moreover, while each staff person had to enter notes into our applicant tracking system after a phone screen or interview with an applicant, we had no explicit expectations about how these notes were to be structured. We were also inconsistent in how we referred to notes during the hiring team debrief meetings where final decisions about applicants were typically made.

Our New Hiring Process: What We’ve Done

Our new hiring process is a work in progress. We want to share the strides we have made and also what we would still like to do. Our first step in trying to reduce bias in our hiring process was to document our current hiring process, which was not documented comprehensively anywhere, and to try and identify areas for improvement. Simultaneously, we set out to learn as much as we could about bias in hiring in general. We consulted members of Mozilla’s Diversity and Inclusion team, dug into materials from Stanford’s Clayman Institute for Gender Research, and talked with several managers in other parts of Mozilla who had undertaken de-biasing efforts for their own hiring. This “discovery” period helped us identify a number of critical steps.

First, we needed to develop a list of essential and desired criteria for job candidates. The researcher job description we had been using reflected many of the criteria we ultimately kept, but the exercise of distilling essential and desired criteria allowed current research team members to make much that was implicit, explicit.

Team members were able to ask questions about the criteria, challenge assumptions, and in the end build a shared understanding of expectations for members of our team. For example, we previously sought out candidates with 1–3 years of work experience. With this criteria, we were receiving applications from some candidates who only had experience within academia. It was through discussing how each of our criteria relates to ways we actually work at Mozilla that we determined that what was even more essential than 1–3 years of any user research experience was that much experience specifically working in industry. The task of distilling our hiring criteria was not necessarily difficult, but it took several hours of synchronous and asynchronous discussion — time we all acknowledged was well-spent because our new hiring process would be built from these agreed-upon criteria.

Next, we wrote phone screen and interview questions that aligned with the essential and desired criteria. We completed this step mostly asynchronously, with each team member contributing and reviewing questions. We also asked UX designers, content strategists, and product managers that we work with to contribute questions, also aligned with our essential and desired criteria, that they would like to ask researcher candidates.

The next big piece was to develop a rubric for grading answers to the questions we had just written. For each question, again mostly asynchronously, research team members detailed what they thought were “excellent,” “acceptable,” and “poor” answers, with the goal of producing a rubric that was self-evident enough that it could be used by hiring team members other than ourselves. Like the exercise of crafting criteria, this step required as much research team discussion time as writing time. We then took our completed draft of a rubric and determined at which phase of the hiring process each question would be asked.

Additionally, we revisited the research exercise that we have candidates complete to make its purpose and the exercise constraints more explicit. Like we did for the phone screen and interview questions, we developed a detailed rubric for the research exercise based on our essential and desirable hiring criteria.

Most recently, we have turned our new questions and rubrics into worksheets, which Mozilla staff will use to document applicants’ answers. These worksheets will also allow staff to document any additional questions they pose to applicants and the corresponding answers, as well as questions applicants ask us. Completed worksheets will be linked to our applicant tracking system and be used to structure the hiring team debrief meetings where final decisions about leading applicants will be made.

From the work we have done to our hiring process, we anticipate a number of benefits, including:

- Less bias on the part of hiring team members about what we think of as desirable qualities in a candidate

- Less time spent screening resumes given the established criteria

- Less time preparing for and processing interviews given the standardized questions and rubrics

- Flexibility to add new questions to any of the hiring process steps but more attention to how these new questions are tracked and answers documented

- Less time on final decision making given the criteria, rubrics, and explicit expectations for documenting candidates’ answers

Next Steps

Our Mozilla recruiter and members of the research team have started going through the 900+ resumes we have received to determine which candidates will be screened by phone. We fully expect to learn a lot and make changes to our hiring process after this first attempt at putting it into practice. There are also several other resource-intensive steps we would like to take in the near future to mitigate bias further, including:

- Making our hiring process more transparent by publishing it where it would be discoverable (for instance, some Mozilla teams are publishing hiring materials to Github)

- Establishing greater alignment between our new process and the mechanics of our applicant tracking system to make the hiring process easier for hiring team members

- At the resume screening phase, blinding parts of resumes that can contribute to bias such as candidate names, names of academic institutions, and graduation dates

- Sharing the work we have done on our hiring process via blog posts and other platforms to help foster critical discussion

Teams who are interested in trying out some of the exercises we carried out to improve our hiring process are welcome to use the template we developed for our purposes. We are also interested in learning how other teams have tackled bias in hiring and welcome suggestions, in particular, for blinding when hiring people from around the world.

We are looking forward to learning from this work and welcoming new research team members who can help us advance our efforts.

Thank you to Gemma Petrie and Mozilla’s Diversity & Inclusion Team for reviewing an early draft of this post.

Originally published on medium.com