This summer, I had the pleasure of interning at Mozilla with the Android Performance Team. Previously, I had some experience with Android, but not particularly with the performance aspect except for some basic performance optimizations. Throughout the internship, my perspective on the importance of Android performance changed. I learned that we could improve performance by looking at the codebase through the lens of four pillars of android performance. In this blog, I will describe those four pillars of performance: parallelism, prefetching, batching, and improving XML layouts.

Parallelism

Parallelism is the idea of executing multiple tasks simultaneously so that overall time for running a program is shorter. Many tasks have no particular reasons to run on the main UI thread and can be performed on other threads. For example, disk reads are almost always frowned upon and rightfully so. They are generally very time consuming and can block the main thread. It is often helpful to look through your codebase and ask: does this need to be on the main thread? If not, move it to another thread. The main thread’s only responsibilities should be to update the UI and handle the user interactions.

We are used to parallelism through multi-threading in languages such as Java and C++. However, multi-threaded code has several disadvantages, such as higher complexity to write and understand the code. Furthermore, the code can be harder to test, subject to deadlocks, and thread creation is costly. In comes the coroutines! Kotlin’s coroutines are runnable tasks that we can execute concurrently. They are like lightweight threads, which can be suspended/resumed quickly. Structured concurrency, as presented in Kotlin, makes it easier to reason about concurrent applications. Hence, when the code is easier to read, it’s easier to focus on the performance problems.

Kotlin’s coroutines are dispatched on specific threads. Here are the four dispatchers for coroutines.

- Main

- Consists of only the Main UI thread.

- A good rule of thumb is to avoid putting any long-running jobs in this thread, so the jobs do not block the UI.

- IO

- Expected to be waiting on IO operations most of the time.

- Useful for long-running tasks such as Network calls.

- Default

- Default when no dispatcher is provided.

- Optimized for intensive CPU workloads.

- Unconfined

- Not restrained to any specific thread or thread-pool.

- Coroutine dispatched through the Unconfined dispatcher is executed immediately.

- Used when we do not care about what thread the code runs on.

Furthermore, the function withContext() is optimized for switching between thread-pools. Therefore, you can perform an IO operation on the IO thread and switch to the main thread for updating the UI. Since the system does thread management, all we need to do is tell the system which code to run on which thread pool through the dispatchers.

fun fetchAndDisplayUsers() {

scope.launch(IO) {

// fetch users inside IO thread

val users = fetchUsersFromDB()

withContext(Main) {

// update the UI inside the main thread

}

}

}

Prefetching

Prefetching is the idea of fetching the resources early and storing them in memory for faster access when the data is eventually needed. Prefetching is a prevalent technique used by computer processors to get data from slow storage and store them in fast-access storage before the data is required. A standard pattern is to do the prefetching while the application is in the background. One example of prefetching is making network calls in advance and storing the results locally until needed. Prefetching, of course, needs to be balanced. For instance, if the application is trying to provide a smooth scrolling experience that relies on prefetching the data. If you prefetch too little, it’s not going to be very useful since the application will spend a lot of the time making a network call. However, prefetch too much, and you run into the risk of making your users wait and potentially draining the battery.

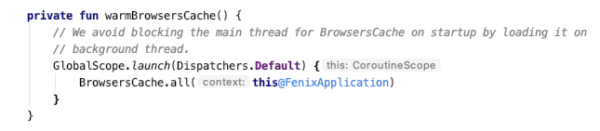

An example of prefetching in Fenix codebase is warming up the BroswersCache inside FenixApplication (Our main Application class).

Getting all the browser information in advance since it’s used all over the place.

Batching

Batching is the idea of grouping tasks together to be executed sequentially without much overhead of setting up the execution. For example, in the android database library Room, you can insert a list object as a single transaction (batching), which will be faster than inserting items one by one. Furthermore, you can also batch network calls to save precious network resources, therefore, saving battery in the process. In Android’s HTTP library called Volley, you can buffer multiple web requests and add them to a single instance of a networkQueue.

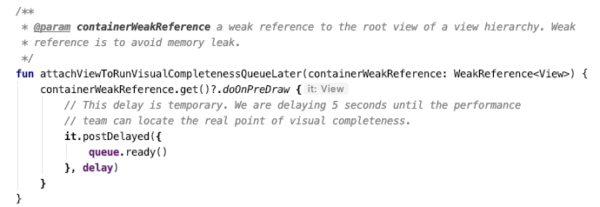

An example of batching in Fenix codebase is a visualCompletenessQueue, which is used to store up all the tasks that are needed to run after the first screen is visible to the user. Tasks include warming up the history storage, initializing the account manager, etc.

Attaching a VisualCompletenessQueue to the view to execute once the screen is visible.

XML Layouts

Let’s talk about the importance of improving the XML layout. Suppose the frame rate is 30 FPS, we have roughly around 33 milliseconds to draw each frame. If the drawing is not complete in the given time, we consider the frame to be dropped. The dropped frame is what causes a UI to be laggy and unreliable. Therefore, the more the dropped frames, the more unstable the UI is. Poorly optimized XML layouts can lead to a choppy looking UI. In general, these issues fall within two categories: heavily nested view hierarchy (CPU problem) and overdrawing (GPU problem).

Heavily nested view hierarchies can be reasonably simple to flatten. However, the tricky part is not overdrawing the UI. For example, if you have a UI component fully hidden by other components, it is unnecessary to waste GPU power drawing the component in the background. For instance, It is wasteful to draw a background for a layout that is entirely covered by a recycler view. Android has some features such as layout inspector to help you make the UI better. Additionally, under the developer’s options in Android phones, there are many features for debugging the UI, such as showing the GPU overdraw on the screen.

Conclusion

Paying attention to the application through the lens of parallelism, prefetching, batching, and improving the XML layout will help the application perform better. These fundamentals are often overlooked. Sometimes, developers seem to not care about memory and rely entirely on garbage collection for memory cleanup and optimizations. However, not many developers realize that the more often garbage collection is run, the worse the user experience will be. Since the application’s main thread is stopped while GC is running, it might result in frames not being drawn in time, creating a laggy UI. Hence, using the four pillars of performance as a guide, we can avoid many performance issues before they appear.

No comments yet

Comments are closed, but trackbacks are open.